Sora 2 expands across creator apps – 5‑day free tiers, 4/8/12s chaining unlocked

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Sora 2 stopped being a demo and became a button in your toolchain today. ComfyUI shipped a desktop Sora 2 API node with 4/8/12‑second runs and tall/wide presets (720×1280, 1280×720), while Sora 2 Pro adds 1024×1792 and 1792×1024 — and outputs are watermark‑free. HeyGen turned Sora on inside the app, so teams can test looks and audio‑sync without export hops. This is the distribution moment: Sora shows up where creators already work.

Access got aggressive, too. Lovart is offering Oct 7–12 as a free window with 5 basic and 1 pro gen per day, no watermark, and paid tiers dangling a month of “unlimited.” Higgsfield’s “UNLIMITED Sora 2/Pro” went viral, ImagineArt flipped both tiers live, and OpenArt enabled Fast and Pro with dialogue‑sync so you can A/B Pro’s physics against quicker passes in one hub. Editors just got runway for real tests, not toy prompts.

Workflow-wise, Sora Extend open‑sourced “infinite length” chaining, stitching 12‑second segments with last‑frame context so long scenes don’t die at the cap. The catch: creators report near‑constant Sora 2 API failures, so plan retries, queues, or fallbacks until stability lands. Platform‑wide availability is here; now OpenAI has to keep it standing under load.

Feature Spotlight

Sora 2 goes platform‑wide for creators

Sora 2/Pro spreads across creator platforms with free windows, in‑app integrations, and API nodes—plus an open‑source “infinite length” chain—putting high‑fidelity, audio‑synced video into everyday workflows.

Cross‑account spike: third‑party apps and tools light up Sora 2/Pro with promos, integrations, and new utilities. Today’s tweets emphasize access windows, in‑app builds, API nodes, and long‑form chaining.

Jump to Sora 2 goes platform‑wide for creators topicsTable of Contents

🎬 Sora 2 goes platform‑wide for creators

Cross‑account spike: third‑party apps and tools light up Sora 2/Pro with promos, integrations, and new utilities. Today’s tweets emphasize access windows, in‑app builds, API nodes, and long‑form chaining.

Sora Extend open‑sources “infinite length” chaining to bypass 12s limit

Sora Extend is now open source, stitching Sora 2 segments into continuous long‑form clips via prompt enhancement and last‑frame context Open source tool. This follows infinite chaining, which demoed segment stitching before code release, and gives editors a practical path to narrative scenes beyond 12 seconds.

ComfyUI ships Sora 2 API Node with Pro tall/wide presets and no watermark

ComfyUI added a Sora 2 API Node with 4s/8s/12s lengths and watermark‑free output. Sora‑2 supports 720×1280 and 1280×720; Sora‑2 Pro adds 1024×1792 and 1792×1024 Node specs. Comfy also notes desktop availability, making node‑based Sora workflows easier to adopt for hobbyists and studios alike Desktop note.

Higgsfield’s UNLIMITED Sora 2/Pro goes viral, expanding worldwide access

Higgsfield’s “UNLIMITED Sora 2 & Pro” launch is drawing massive engagement and promises unrestricted, global access with audio‑sync support Unlimited rollout. Creators are already posting Sora 2 Pro samples generated via Higgsfield, underscoring demand for high‑ceiling tiers beyond OpenAI’s own app Creator sample First video.

HeyGen adds in‑app Sora 2 after early OpenAI access

HeyGen says Sora 2 now runs inside its product, after being granted early access by OpenAI, signaling another mainstream creator tool integrating Sora directly In-app announcement. For teams already using HeyGen for production pipelines, this removes the export–import hop when testing Sora looks and audio sync.

Lovart offers 5 days free Sora 2 with daily 5 basic + 1 pro, no watermark

Lovart is giving all users free Sora 2 access Oct 7–12, including 5 basic and 1 pro generations per day with zero credits and no watermark; paid tiers unlock a month of unlimited use Free window details. It’s a high‑leverage on‑ramp for filmmakers and designers to test Sora 2 Pro without friction.

Creators flag intermittent Sora 2 API failures during concept runs

High‑volume testing surfaced Sora 2 API instability, with creators reporting near‑constant failures and asking OpenAI for an ETA on a fix Reliability report. For teams planning side‑by‑side Sora 2 vs Pro comparisons or client demos, this signals a need for retries, queueing, or fallback models until stability improves.

ImagineArt turns on Sora 2 and Sora 2 Pro for creators

ImagineArt announces Sora 2 and Sora 2 Pro are “live,” touting hyper‑real visuals, narration, and clean audio—another platform‑level switch‑on for everyday creators Platform announcement. A second post reiterates access across tiers, pointing to a broader ecosystem push to make Sora 2 available beyond OpenAI’s own app Access confirmation.

OpenArt enables Sora 2 (Fast & Pro) with audio sync and 4/8/12s options

OpenArt switched on Sora 2 across Fast and Pro modes with synchronized dialogue and sound effects, plus 4/8/12‑second durations Feature list. The move gives OpenArt’s creator base a direct way to compare Pro’s physics and performance upgrades against Fast iterations inside one hub.

BasedLabsAI adds Sora 2 generation for creators

BasedLabsAI now supports Sora 2, with creators sharing their first anime‑style videos generated on the platform Creator demo. This expands the roster of third‑party build points where filmmakers and storytellers can test Sora‑driven sequences alongside other video stacks.

📹 Non‑Sora video: Grok, Veo‑3, Pika, Kling heat up

Focuses on video models outside the Sora feature (excludes Sora news). Today shows Grok Video quality jumps, Veo‑3 promptcraft, social‑ready Hedra, Pika’s longer clips, and 30s K‑pop choreography via Apob.

Grok Video momentum grows; Astra upscale boosts realism

Creators say Grok Video “holds up” across styles, sharing multi‑genre tests and playful reels, while Topazlabs Astra upscaling noticeably improves water physics and fine detail. Following up on first look reel that showed rapid style variety, new side‑by‑sides and clips expand the case for a Grok→Astra pipeline for social delivery and client work Style tests, First tests, Meow train clip, Experiment thread, Astra upscale.

Apob ReVideo touts 30‑second K‑pop‑style dance clips for creators

Apob is pitching ReVideo as a way to generate 30‑second AI K‑pop dance videos that “move like the real idols,” offering longer choreography without Sora access. The landing page emphasizes creator‑friendly, rhythm‑accurate motion and immediate onboarding for social content Try link, Product page.

Hedra adds Veo 3 Vertical for social‑ready outputs in minutes

Hedra is showcasing Veo 3 Vertical, positioning it for quick social format creation with one‑click vertical outputs. For creators working shorts and reels, this tightens the prompt → post loop without extra conform steps Hedra announcement.

Veo‑3 Fast on Gemini gets cinematic prompt blueprints for food and sci‑fi

Two detailed 8‑second scripts show how to direct Veo‑3 Fast on Gemini with time‑coded scene beats, lens moves, lighting, and audio cues—one for a luxury turkey‑platter ad, another for a colossal terraforming processor transforming a red sky to blue. These recipes read like miniature storyboards, useful for art directors and editors needing repeatable, spec‑ad‑ready outputs Luxury ad recipe, Terraforming script.

Kling 2.5 Turbo promptcraft: dashboard‑to‑skyline drive in one take

A granular Kling 2.5 Turbo prompt maps an elevated‑overpass drive shot—from dashboard‑level steady glide to a gentle yaw reveal and ending tilt—complete with roll compensation, lens‑flare pacing, and layered ambience. It’s a useful template for previs, mood reels, or establishing shots, and pairs with broader creator endorsements to try the workflow Drive prompt, Creator endorsement.

🎨 Image craft: Photoshop partner models + style refs

Image gen/editing for artists. New today: Photoshop’s partner models in‑app, fresh style packs and prompt recipes, and notable T2I models on Replicate. Excludes Sora items (covered as feature).

Photoshop adds Nano Banana and Flux Kontext Pro with free gens until Oct 28

Adobe Photoshop now ships partner models Nano Banana and Flux Kontext Pro for promptable photoreal edits, with a limited complimentary generation window through 10/28 Photoshop partner demo. The in‑app flow supports outfit swaps, POV/pose changes, add/remove objects, and rapid colorway visualization layered over classic masks and selections Angle and pose, Add or remove, Product color, with official overview here Photoshop page.

Hunyuan‑Image 3.0 arrives on Replicate; creators cite sub‑30s renders

Hunyuan‑Image 3.0 is now live on Replicate, with a PrunaAI infra collab touted for fast generation (creators report under‑30‑second renders) and strong prompt adherence; it’s also being positioned as #1 on LMArena for text‑to‑image Availability note, model page. This follows earlier visuals shared in a Mid‑Autumn branding set branding set.

Bagel unveils Paris, a decentralized‑trained open‑weight diffusion model

Bagel introduced Paris, described as the first decentralized‑trained, open‑weight diffusion model—trained across distributed computers without a central cluster—with an immediate try page for creators Model intro, model page. If the approach holds up in benchmarks, it could widen community participation in training while reducing single‑provider bottlenecks for image gen.

Cinematic Motion Scene prompt pack teaches motion‑blur tracking shots

A reusable “Cinematic Motion Scene” prompt shows how to sell dynamic tracking shots with motion blur across forests, wheat fields, flooded paddies, and desert combat—grounding urgency in lens‑true streaking and expressive skies Prompt pack.

- Over‑the‑shoulder fix: phrasing like “show the pov from his shoulder… see the side of his face very close” reliably flips perspective, addressing a common camera problem Shot request, Prompt solution.

Epic realistic anime prompt pack evokes Blood of Zeus intensity

A fresh prompt set for an intense, realistic anime style—think Blood of Zeus/Castlevania—details cinematic lighting, painterly cel shading, and battlefield framing, and is adaptable beyond Midjourney Prompt pack. Excellent for dramatic key art and consistent series looks.

Retro cyberpunk anime style ref (--sref 14094475) lands with Akira/GitS vibes

A new Midjourney style reference (--sref 14094475) blends retro‑realistic cyberpunk with cinematic action, channeling Akira, Ghost in the Shell, and Appleseed—dense neon atmospheres, hand‑drawn texture, and intense, tech‑melancholic tone Style id thread. Great for high‑energy key art and motion‑ready frames.

Anthropomorphic studio portrait recipe on Gemini using Nano Banana

A prompt template on Gemini with Nano Banana produces photoreal anthropomorphic studio portraits—wardrobe, accessories, sunglasses, and background cleanliness controlled in a single spec—handy for brandable mascots and poster‑clean hero shots Prompt recipe.

New children’s book illustration style pack teased with warm ink‑and‑wash textures

A Midjourney children’s book look—soft ink/watercolor textures, sketchy linework, warm palettes, and simple shapes—was previewed with multiple frames and will be shared to subscribers, useful for picture‑book spreads and character sheets Style preview.

🏭 Studio pipelines: Runway and LTX in practice

How studios build with creative AI (non‑Sora focus). Today: an Amazon Prime case using Runway, LTX ad workflows with shared prompts, and social repurposing with Pictory.

Amazon Prime’s House of David used Runway to hit budget and shave months

Director Jon Erwin says Amazon’s House of David leaned on Runway to achieve an outsized vision while staying on budget, cutting months of tedious VFX work from the schedule Runway case study. Following up on Broadcast VFX, Runway continues to surface in real studio pipelines beyond experiments.

For creatives, the takeaway is pragmatic: AI-assisted shots are graduating to episodic delivery, where deadlines and cost discipline matter as much as look.

A full spec ad made end‑to‑end in LTX Studio, prompt included

LTX Studio showcased a charming ad produced entirely in‑app by director and Ambassador Simon Meyer, sharing the full prompt so others can learn or adapt the workflow Full prompt. For designers and filmmakers, this is a practical template: image generation, iterative edits, and smart animation all contained within one tool—no hand‑off fatigue.

PictoryAI method turns one interview into a dozen social assets

Agorapulse featured Pictory’s workflow that repurposes a single interview into multiple platform‑ready clips and posts, leaning on text‑based editing and auto‑captioning to speed packaging without losing polish Podcast summary, with details in the episode page Podcast page.

- Edit via transcript, auto‑captions, and brand‑consistent templates to scale distribution across LinkedIn, Shorts, and blogs without re‑cutting from scratch.

- For small teams, this is a concrete content ops recipe: one recording in, many on‑brand artifacts out.

🎞️ Keyframe‑led animation with Hailuo

Hailuo keyframes and i2v workflows for stylized animation (distinct from Sora coverage). Today’s clips show end‑frame‑only animation, anime/live‑action hybrids, and episodic shorts.

Hailuo end‑frame‑only and first/last‑frame runs spread among creators

Creators are standardizing on Hailuo’s keyframe tricks that animate from a single end frame or between first/last frames for smooth, purposeful motion, with multiple fresh examples today. Following up on First–last transition coverage, new clips show end‑frame‑only animation and nuanced transitions in the wild End‑frame demo, a “Tokyo Tower Saga” scale shot built with end‑frame keyframes Tokyo Tower run, and a close‑up portrait transition framed as a Polaroid memory Close‑up transition.

Anime × live‑action hybrid chase made entirely in Hailuo

A Mad Max–inspired sequence blends anime styling with cinematic, live‑action motion using only Hailuo text‑to‑video, underscoring the model’s range for stylized realism and action readability Hybrid clip.

Cyberpunk noir ‘Neon Shadows’ threads moody, story‑driven beats in Hailuo

The “Neon Shadows” mini‑thread uses Hailuo to stage a moody cyber‑noir—rain, neon, and investigative beats—highlighting how keyframes can carry tone and narrative continuity, not just spectacle Noir thread.

Hailuo 02 nails high‑speed jets vs sea monster action prompt

A Hailuo 02 prompt showcases a fast aerial tracking run of fighter jets with a surprise kaiju reveal, indicating stable motion, horizon handling, and VFX‑style timing at speed Prompt example. Additional creator reels echo strong destruction and impact beats with Hailuo’s keyframe control Destruction set.

MeanOrangeCat Show drops Episode 7B built with Hailuo keyframes

Episodic production with Hailuo continues to mature as the MeanOrangeCat team releases Ep 7B, “The Purrfect Heist,” demonstrating consistent characters and repeatable shot grammar using keyframes Episode drop.

OpenArt × Hailuo Story Week spotlights intimate, window‑framed micro‑stories

Community curation around Hailuo continues: OpenArt’s Story Week highlights a creator’s reflective, single‑window narrative, underscoring how keyframes can foreground subtle emotion and pacing, not only action Community short.

🎙️ Voices and music pipelines for creators

Voice agents and music/sound tooling useful for film, ads, and games. Today features ElevenLabs’ open UI blocks, an enterprise voice deployment, and a Stable Audio 2.5 API deep dive event.

ElevenLabs open-sources UI blocks for voice/audio agents (22 components, MIT)

ElevenLabs released an MIT-licensed UI kit with 22 customizable components for building chat, transcription, music, and voice-agent interfaces, including a turnkey voice-chat component with built‑in state management Open-source components, Voice chat block. Following up on Agent Workflows (routing among sub‑agents), this gives creators a ready-made front end that accepts an Agent ID prop and ships as composable blocks for production apps Blocks gallery.

Sharpen chooses ElevenLabs as exclusive AI voice provider for contact centers

Contact-center platform Sharpen named ElevenLabs its exclusive voice provider, embedding agentic AI directly into the orchestration layer with caller history, sentiment, and escalation context for seamless human handoffs Partnership note. For creative teams, it’s a signal that real‑time voice agents are maturing into production systems that must juggle memory, turn‑taking, and reliability at scale.

Comfy hosts Stable Audio 2.5 API deep dive with Dadabots (Oct 7, 3pm PT)

ComfyUI is running a live deep dive on the Stable Audio 2.5 API with CJ Carr (Dadabots), streaming across YouTube, X, and Twitch at 3pm PT/6pm ET, focused on faster, more musical generation and practical pipelines Livestream promo, Deep dive note. Expect workflow examples that pair audio generation with node‑based tooling for film and ad sound design.

💡 Lighting realism and interactive 3D (Ray3)

Rendering and light behavior advances for filmmakers and 3D artists. Today’s Luma Ray3 clips emphasize true‑to‑camera motion, realistic light, and high‑fidelity faces/hair.

Ray3 close-up test shows lifelike face and hair under natural light

Luma shared a new Ray3 "Woman’s Face & Hair" clip that focuses on fine facial detail and hair response during motion, showcasing how lighting reads as camera‑true rather than CG. Following up on lighting demos that emphasized interactive light and reflections, today’s test underscores skin fidelity and strand behavior under natural photorealism claims. See the focused close-up reference in Face and hair test and the broader positioning of Ray3’s look and motion realism in Ray3 description.

🧩 Dev tooling and orchestration for creatives

Tools that wire models into creative workflows (excludes Sora updates). Today: MCP servers for multi‑model access, GoogleDeepMind’s computer‑use via Browserbase, and desktop UX notes.

Replicate’s MCP server plugs models into Codex/Claude/Cursor with CLI setup live

Replicate’s Model Context Protocol (MCP) server is available with docs that show how to discover, compare, and run models from the Codex, Claude, Cursor, Gemini CLIs and more, echoing OpenAI’s DevDay push for MCP as an “open standard.” See the stage slide and configuration steps in the docs and thread. DevDay slide Replicate MCP docs

For creative teams, this means you can script multi‑model A/Bs for image/video/music runs, wire evaluation passes, and keep the whole workflow in versioned CLI pipelines rather than ad‑hoc web UIs.

DeepMind’s computer‑use model lands via Browserbase for UI automation

Google DeepMind’s state‑of‑the‑art computer‑use model is rolling out in partnership with Browserbase, enabling agents to operate real browsers—useful for automating repetitive creative ops like bulk uploads to CMSes, asset tagging across dashboards, and multi‑app publishing flows. Partnership note

Expect faster glue between model outputs and the places creatives actually ship (stores, socials, and DAMs), with fewer brittle per‑site scripts.

ElevenLabs ships open‑source UI kit with 22 blocks for voice and audio agents

ElevenLabs released “ElevenLabs UI,” an MIT‑licensed set of 22 components and examples for chat interfaces, transcription, music, and agentic voice apps—aimed at dropping a production UI into creative tools quickly. Components overview A ready‑to‑wire voice‑chat‑03 block includes state management; you pass an Agent ID and ship. Voice block demo Components docs

For storytellers and interactive designers, this trims the boilerplate around building talk‑to‑character demos, ADR scratch tracks, and voice‑directed edit assistants.

ComfyUI hosts Stable Audio 2.5 API deep dive with Dadabots today

ComfyUI is streaming a Stable Audio 2.5 API session with CJ Carr (Dadabots) on Oct 7 at 3pm PT across YouTube, X, and Twitch, highlighting faster and more musical generation plus how to wire it into node graphs. Livestream poster Session teaser The timing underscores Comfy’s rapid ecosystem expansion following Subgraph publish (one‑click reusable node packs).

Music editors and sound designers can expect recipes for prompt‑to‑stem workflows, batch cues, and post‑chain routing inside Comfy graphs.

Supabase MCP + Fusion agent demo jumps from mockup to live component

A short demo shows the Supabase MCP server working with the Fusion agent to transform a mockup into a backend‑connected component in one flow. Demo clip

While developer‑centric, this pattern maps cleanly to creative tooling—e.g., spinning up data‑bound shot lists, asset browsers, or review widgets from sketches without hand‑wiring every API call.

📅 Creator events, AMAs, and access perks

Meetups and opportunities relevant to creatives. Heavy events day: conference lineups, SF Tech Week gatherings, AMAs, and regional access offers.

Generative Media Conference unveils Oct 24 lineup: Katzenberg, BFL, a16z, DeepMind

Fal announced the speaker slate for its Oct 24 event in San Francisco, featuring Jeffrey Katzenberg, Black Forest Labs’ Andreas Blattmann, a16z’s Justine Moore, Kindred Ventures’ Steve Jang, and Google DeepMind’s Paige Bailey, among others Speaker lineup. This looks like the highest‑signal single‑day agenda for generative media founders and studio leads this month.

Google Türkiye offers 1‑year free Gemini Pro for 18+ university students (apply by Dec 9)

Google Türkiye is granting 12 months of free Gemini Pro access to all university students aged 18+, with applications open until Dec 9; creatives are urged to share with students in their networks Student offer. For film and design students, this removes a paywall for scripting, boards, and image ideas throughout the academic year.

Lovart hosts SF Tech Week panel on spatial design and AI, Oct 9

Lovart and Collov AI are hosting “Spatial Renaissance: Real Spaces to Visual World” on Oct 9 in San Francisco, with speakers from Meta Reality Lab, Shopify, Unity, Jobright, and Lovart’s CSO Sylvia Liu; sign‑ups are open Event details.

Expect cross‑discipline talks on how AI links space, design, and visual storytelling—useful for designers and creative directors planning mixed‑media pipelines.

SF Tech Week Startup Flash Sale offers $1M+ in limited‑time perks

A Tech Week Startup Flash Sale went live with $1M+ in perks from major AI ecosystem players like Anthropic and NVIDIA, available for a limited window Perks thread. For creative startups building with AI, this can materially cut experimentation costs on models and GPU credits.

ComfyUI livestream: Stable Audio 2.5 API deep dive with Dadabots and Stability (Oct 7)

ComfyUI is hosting a Stable Audio 2.5 API deep dive today at 3pm PT with CJ Carr (Dadabots) and Stability, streaming on YouTube, X, and Twitch Livestream poster, with a separate announcement pointing to the session Stream note. Following up on Subgraph publishing, this is a timely walkthrough for musicians and editors integrating AI audio into Comfy pipelines.

Fable Simulation hosts SF Tech Week salon on hard consumer/AI problems; RSVP open

Fable Simulation is running an invite‑only SF Tech Week gathering on Thursday focused on hard problems in consumer and AI; the post shares the RSVP link and agenda blocks RSVP page, with details in the event listing RSVP page. This looks geared to founders and product‑minded creatives seeking peer feedback on new AI experiences.

Gemini + filmmaking AMA in GeminiApp Discord with Diesol (Wed 10/8, 11:30am PT)

Filmmaker Diesol will host a live Q&A on using Google Gemini for filmmaking inside the GeminiApp Discord on Wed 10/8 at 11:30am PT; join link provided in the posts Discord AMA and a follow‑up reminder Reminder post. Expect practical camera‑to‑cut workflows and promptcraft for pre‑vis and edits.

Leonardo.Ai invites creators to connect at Gamescom Asia (Oct 16–19, Bangkok)

Leonardo.Ai will be on the ground at Gamescom Asia in Bangkok and is inviting creators to RSVP for an exclusive networking drinks event with the team Event invite.

If you work in game art, trailers, or toolchains, this is a good venue to compare generative‑AI workflows with BD and solutions leads.

🗣️ Community pulse: craft vs slop, memes, and tips

Discourse that shapes creative practice (excludes product update news). Today features a longform ‘slop era’ reflection, dev‑tool humor, and prompt‑camera problem‑solving threads.

The ‘slop era’ debate: creators call for slower, human‑led work

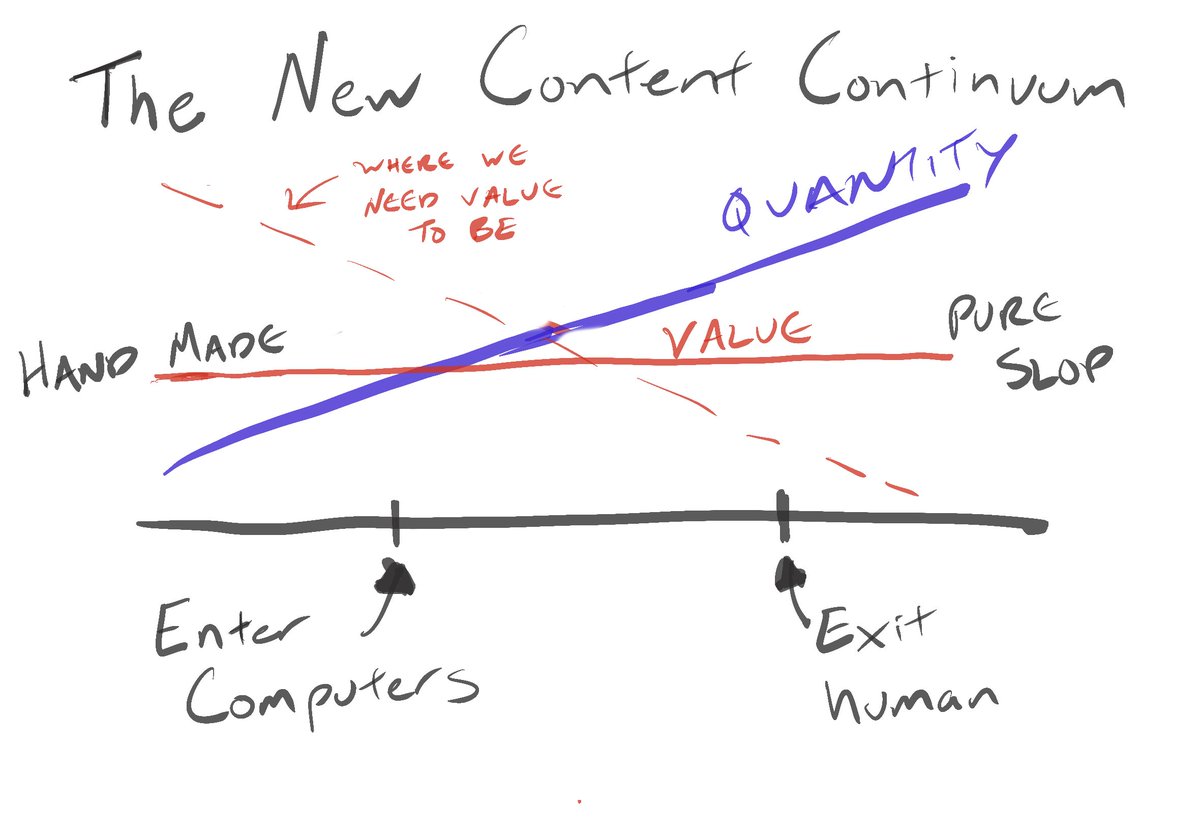

A widely shared reflection argues that AI video’s proliferation risks “slop,” and that lasting work still demands time, taste, and human intervention, illustrated with a hand‑drawn “New Content Continuum” sketch longform essay.

- The post contrasts dopamine‑driven feed content with handmade craft, urging audiences to reward intention and process, not volume longform essay.

Creators rebut The Oatmeal: AI art is still human choices

In response to a critique that AI art feels empty once its origin is known, a creator outlines the dense human decision‑chain behind strong AI work—from per‑word prompts and parameter dials to model selection and post in Photoshop/Lightroom/Topaz—arguing it’s still craft, just a new medium essay on AI art.

How to get a true over‑the‑shoulder shot with today’s models

A prompt‑craft thread tackles a classic coverage problem: consistently framing over‑the‑shoulder (OTS) and counter‑shots. One creator asks for a reliable method, and another shares a working phrasing that anchors POV beside the subject’s face to expose the scene target shot question, pov prompt fix.

- Discussion continues on how to achieve the reverse angle (true counter‑shot), underscoring limits of current controls and the need for explicit POV language counter‑shot reply.

Is Sora open yet? Code‑swap threads and access confusion persist

Creators keep asking whether a standard ChatGPT subscription now unlocks Sora, while others publicly request invite codes, highlighting ongoing ambiguity and peer‑to‑peer access bartering access question, code request, code help ask.

- Unclear onboarding and staggered partner rollouts add friction just as demand spikes among video artists and editors.

API hiccups stall Sora 2 experiments; creators ask for ETA

A prominent tester reports repeated Sora 2 API failures mid‑concept exploration and requests an ETA from OpenAI, underscoring fragile iteration loops for filmmakers trying to compare Sora 2 vs Pro api failures note.

Avoiding content filters with descriptive prompts (the wizard at a chasm)

Prompting around trademarks works: by replacing protected terms with clear, scene‑level descriptors (“old bearded wizard at a chasm…”) creators show Sora can generate the intended moment while respecting policy filters prompt workaround.

Tip: Upscale Grok Imagine with Topaz Astra for crisper physics

A practical workflow tip lands well: take Grok Imagine output and run it through Topaz Astra upscaling to punch up fine detail, with side‑by‑sides noting stronger water behavior and overall sharpness upscale comparison, following broader creator tests of Grok’s style range examples thread.

Will you use the Sora app or third‑party hosts? Community splits

A creator asks whether people will stick with the official Sora app now that multiple platforms host Sora 2, prompting debate over convenience, pricing, watermarking, and pipeline fit usage poll.

Agentic workflows, or just do the work? A meme draws laughs

A tongue‑in‑cheek jab asks whether agentic workflows can really automate everyday creative grind, capturing the mood that agents are promising but often over‑sold for nuanced craft agentic workflow meme.

- The joke reflects a broader theme in today’s threads: AI helps, but directorial decisions remain stubbornly human.

Meme watch: “average Cursor user” calls out 98.6 GB RAM bloat

A viral gag shows macOS’s Force Quit warning with Cursor allegedly chewing 98.59 GB, ribbing AI‑augmented dev tools’ hunger for memory and sparking lighthearted complaint threads among builders and artists memory bloat joke.

- Beyond laughs, it hints at a real friction: creative stacks that mix model UIs, IDEs, and upscalers can strain laptops during production.