Hailuo 2.3 lands on 6 platforms – try 4 free videos daily

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

MiniMax’s Hailuo 2.3 just went live across six creator hubs, and it matters because it finally pairs lifelike motion with a low‑friction onramp. Between 4 free videos a day on the official site and a 50% credit‑back on Runware for 30 days through Nov 28, teams can pressure‑test physics, faces, and on‑screen text without burning budget.

Leonardo AI carries the launch badge, while Replicate exposes an API for pipelines. fal ships Fast/Standard/Pro tiers so you can dial latency versus fidelity, and Higgsfield Popcorn is running a 7‑day unlimited blitz plus a 200‑credit DM drop alongside 70+ click‑to‑video presets for 720–1080p, 6–10s shots. GMI tosses $10 credits for shared outputs. Under the hood, 2.3 tightens human physics—flips, dance, and fluid body mechanics—sharpens logos and titles, and keeps faces and props consistent from beat to beat. Early tests even hold up under abuse: an FPV motorcycle cockpit spec’d like a Sony FX6, 24mm at 48 fps, lands convincing speed blur, flares, and depth without wobble.

If you’re slotting this into a finishing stack, pair Hailuo takes with Runway’s text‑guided object removal or Luma’s Ray3 HDR to keep tone and cleanliness in the final export.

Feature Spotlight

Hailuo 2.3 rolls out across creator platforms

Hailuo 2.3 goes wide (Replicate, fal, Runware; Leonardo partner), bringing cinematic realism, human physics, and VFX to more creators—lower cost, faster iteration, 4 free vids/day.

Big cross‑account push: MiniMax Hailuo 2.3 lands on multiple hubs with realism, physics and VFX—today’s dominant filmmaking story for shorts, ads, and music videos.

Jump to Hailuo 2.3 rolls out across creator platforms topicsTable of Contents

🎬 Hailuo 2.3 rolls out across creator platforms

Big cross‑account push: MiniMax Hailuo 2.3 lands on multiple hubs with realism, physics and VFX—today’s dominant filmmaking story for shorts, ads, and music videos.

MiniMax details Hailuo 2.3 and 2.3 Fast with realism upgrades and four free videos daily

Hailuo 2.3 focuses on movement, expression and physical realism, while a lighter 2.3 Fast balances quality and speed; MiniMax is offering four free videos per day to try it. Feature notes and the product page outline smarter text/logo rendering, better consistency and professional‑grade fidelity feature brief, and the official site is already live for runs product page.

fal ships Hailuo 2.3 with Fast, Standard and Pro tiers for cost/quality control

fal rolled out MiniMax Hailuo 2.3 with three speed/quality options, highlighting dynamic human motion and cinematic VFX for production‑grade shorts and ads fal rollout.

Leonardo AI named official launch partner for Hailuo 2.3

Leonardo AI says creators can try Hailuo 2.3 there first as MiniMax’s official launch partner, positioning the model for accessible, cinematic short‑form work in the creative stack partner announcement.

Replicate adds Hailuo 2.3 for API access with lifelike physics and VFX

Hailuo 2.3 is now deployable on Replicate, bringing improved human physics, fluid motion (flips/dance), stronger VFX, sharper text, consistent faces/objects, and tighter prompt adherence to API workflows platform launch.

Runware launches Hailuo 2.3 with 50% credit‑back for 30 days

Runware made Hailuo 2.3 available day‑0 and is returning 50% of usage in platform credits for the first 30 days (through Nov 28), making large‑scale testing cheaper for teams runware launch. Following up on auto motion blur, which showed precise motion control, this rollout adds an API‑ready path for those looks model catalog.

Hailuo 2.3 lands on Higgsfield Popcorn with 7‑day unlimited and 200‑credit blitz

Higgsfield Popcorn is featuring Hailuo 2.3 with a seven‑day unlimited run and a nine‑hour 200‑credit DM promo tied to retweets/comments, alongside 70+ click‑to‑video presets for 720–1080p 6–10s clips Higgsfield promo.

Early test shows Hailuo 2.3 nailing high‑speed FPV motorcycle realism

A creator’s FPV cockpit sequence—shot spec’d as Sony FX6, 24mm at 48fps with 500T grain—demonstrates Hailuo 2.3’s speed blur, depth, flares and tension under fast motion, hinting at stronger physical coherence for action beats motorcycle test.

GMI Cloud lists Hailuo 2.3 and offers $10 creator credits for shared outputs

GMI added Hailuo 2.3 to its lineup and is giving $10 in credits to creators who repost and comment their 2.3 creations, signaling more low‑friction trials across platforms GMI listing.

🎥 Veo 3.1: prompt blueprints, VFX and agentic tooling

Fresh creator workflows turn single clips into multiple cinematic scenes; experimental 360 styles and dialogue realism tests. Excludes Hailuo 2.3 (covered as feature).

Glif’s Master Prompter turns one clip into three Veo 3.1 scenes

Glif showcased an agentic “Master Prompter” that transforms a single real clip into multiple cinematic outputs with Veo 3.1, effectively automating scene reframing and VFX-driven looks for editors and VFX artists Master Prompter thread. The company frames this as part of a broader push toward creative AI agents that autonomously produce films Platform note, with hands-on access via its tool page Master Prompter agent.

Haimeta shows an 8s, three‑beat off‑road sequence with Veo 3.1 Fast

Haimeta users are testing a dynamic 8‑second Jeep Wrangler concept built as three timed beats (river splash, climb-out track, wide exit), specifying lens, FPS, lighting, and motion cues to steer Veo 3.1 Fast Prompt recipe. This follows Jeep 8s recipe that outlined a similar beat sheet; today’s run validates the structure on a third‑party platform and shows how granular metadata (e.g., golden‑hour flares, handheld vibration) maps to final motion.

Veo 3.1 nails a 360 wraparound ‘claymation’ aesthetic

A creator demoed a seamless 360 wraparound camera move in a claymation-like style using Veo 3.1, highlighting the model’s ability to blend aggressive camera language with stylized, stop‑motion textures—useful for title sequences and brand idents 360 demo.

Veo 3.1 vs Sora 2: community tests dialogue realism

OpenArt shared side‑by‑side dialogue clips to gauge which video model produces more natural, realistic conversation—an increasingly relevant test for filmmakers and advertisers pushing talking‑head and narrative work Dialogue comparison. Early feedback focuses on lip‑sync believability, micro‑expressions, and tone continuity across cuts.

Creators report first‑to‑last‑frame control in Veo 3.1

A creator highlighted “first frame – end frame” support, noting significantly better scene control and more intentional transitions in Veo 3.1—useful for preplanned cut points and storyboard-accurate beats Creator report. This strengthens timeline‑precise direction without resorting to heavy post fixes.

🧰 Finishing tools: Runway apps, object removal, HDR control

Practical post moves for ads and films: instant device mockups, text‑guided object removal, node‑based workflows, and HDR color control. Excludes Hailuo feature news.

Luma Ray3 brings HDR grading control to Dream Machine

Ray3 adds cinematic HDR fine‑tuning—depth‑preserving color and contrast across moving scenes—so tone and realism hold shot‑to‑shot in Dream Machine Feature brief. This lands nicely following up on Promo videos, where creators were already routing Boards images through Ray3 to finish promos.

Runway Workflows goes node‑based for end‑to‑end video builds

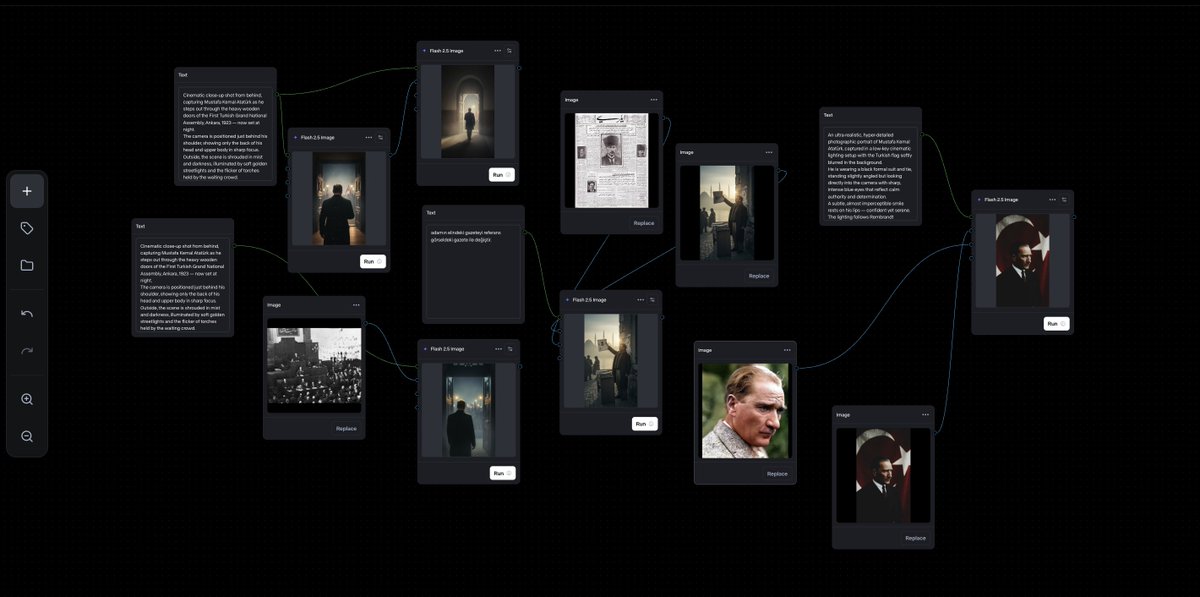

A shared project graph shows Runway Workflows chaining prompts, references and outputs into a fully composed historical short—consolidating shot prompts, portrait variants, and assembly in one reproducible canvas Workflow screenshot.

Runway Remove Object deletes distractions with a single prompt

A creator removed background people by simply typing “remove the people behind,” with no masking or rotoscoping—showcasing Runway’s text‑guided object removal for quick editorial cleanups in film and social workflows Object removal demo.

Runway’s Mockup app turns designs into instant device shots

Runway introduced Mockup, a drop‑in tool to wrap any design onto phones, laptops, billboards and more—upload, pick a preset (or prompt your own), export, done. It’s positioned as part of the Apps for Advertising collection and is available now App announcement.

🌀 Grok Imagine: cinematic moods, fourth walls, abstract poetry

Creators animate MJ stills into Grok films—characters notice the camera, painterly anime angles, and abstract shorts that read like visual poems. Excludes Hailuo feature.

Grok Imagine delivers a fourth‑wall tracking shot with camera‑aware character

A new Grok Imagine test shows a character (Alucard‑style) noticing the lens mid‑track—an intentional fourth‑wall beat that sells presence Creator demo. Following up on Camera language, which probed move/zoom phrasing, this clip suggests Grok can model gaze‑to‑camera continuity for POV, mockumentary, and horror pacing.

Low‑angle, painterly anime realism brought to life from MJ into Grok

A low‑angle, cinematic anime shot—first styled in Midjourney, then animated in Grok—shows controlled perspective and painterly shading that reads like hand‑drawn film Creator clip. The artist also shared the underlying Midjourney sref for this gothic look, handy for matching stills before animating Style reference.

80s OVA vibe: a goddess forging the sun, animated in Grok

Using MJ for stills and Grok for motion, a nostalgic 80s OVA‑style sequence shows a goddess forming a sun—bold lighting, cel‑shaded feel, and painterly highlights Creator demo. If you want the exact anime sref to match, see the shared Midjourney style post Style reference.

Kiss between two eclipses turns celestial metaphor into motion

An abstract short—“the kiss between two eclipses”—leans on Grok’s non‑literal motion to stage cosmic bodies as emotive actors, useful for title sequences and music visuals Creator short. The piece reads like visual poetry rather than plot, underscoring Grok’s strength at mood‑first animation.

The Infinite Kiss showcases Grok’s strength in abstract, loopable art

Another abstract vignette, “The Infinite Kiss,” demonstrates smooth, poetic motion without physical‑realism constraints—ideal for logo idents, interludes, or music visuals Creator short.

Creators say you can make a whole action movie with Grok Imagine

Creator sentiment keeps rising: “you can make a whole action movie with Grok Imagine,” a nod to the pace, continuity, and shot variety creators are already achieving for dynamic sequences Creator sentiment.

🖼️ Still-style recipes: MJ V7, srefs, and Nano Banana portraiture

A day rich in style guides—gothic anime srefs, collage params, minimalist line art, moodboards, and studio‑grade portrait prompts in Nano Banana.

MJ V7 style ref 500791745 nails cinematic gothic anime

Midjourney V7 gets a fresh style reference—sref 500791745—dialed for cinematic gothic anime with clean linework, advanced cel-shading, and painterly texture blends. This expands on the creator’s anime toolkit, following up on Neo‑noir sref where a similar MJ V7 reference was shared for a darker anime look. Style ref thread

MJ V7 collage recipe: chaos 12, 3:4, sref 2483353378, sw 500, stylize 500

A concise Midjourney V7 setup for expressive collage work lands: --chaos 12 --ar 3:4 --sref 2483353378 --sw 500 --stylize 500. Useful for creators seeking consistent framing with deliberate variation and style cohesion. Collage settings

Nano Banana studio portrait: 85mm, hard light, velvet noir styling

A studio‑grade Nano Banana prompt details an 85mm f/1.8 tight‑medium portrait with dramatic hard lighting, glossy accessories, visible pores, and couture styling (velvet, opera gloves, silver cross). It specifies negative prompts, background, and light placement for crisp, high‑contrast results. Studio recipe

Object‑lit dark portrait: single practical as the only light source

This Nano Banana recipe centers a handheld prop (lantern/lotus/sphere/rose) as the sole light source, casting upward glow and deep opposing shadows for moody chiaroscuro. It controls pose, framing, and DOF to keep the subject isolated in black. Object-lit prompt

Fashion editorial recipe: cherry‑red bob, golden‑hour rim, urban DOF

A street‑fashion Nano Banana prompt defines a sleek cherry‑red bob, black cutout top, denim, and gold‑accent accessories, shot as a medium full with shallow f/1.4 DOF. Golden‑hour rim lighting and ochre facades set the mood for editorial‑ready frames. Fashion prompt

Minimalist line‑drawing prompt for clean children’s clipart

A reusable prompt pattern produces simple, full‑body cartoon line drawings with no edge outline or shadows—ideal for children’s books and clipart sets. The structure emphasizes clear lines, soft lighting, and solid-color backgrounds for fast, consistent sheets. Prompt recipe

Moodboard Mondays drops retro‑futurist fashion profile (--p nuco5d2)

A new moodboard profile showcases a 12‑tile grid of retro‑futurist fashion portraits with glossy eyewear, metallic accessories, and punchy palettes, anchored by parameter --p nuco5d2 for creators to riff on. Moodboard post

🎧 Voices and narration for spooky season

Voice packs and narration techniques for storytellers: seasonal voices and a powerful spoken‑word Suno use case. Video dialogue model tests live in the Veo section.

ElevenLabs drops Halloween voice pack; ‘Handpicked’ Library spotlights seasonal voices

ElevenLabs introduced a Halloween Creatures & Characters collection tuned for ambience, tension, and character work, aimed at creators and storytellers planning spooky releases collection note. The Voice Library’s new Handpicked for your use case carousel makes it easier to discover themed voices, then design and share them with teammates for production workflows library tip.

Suno used for cinematic spoken‑word narration; creator shares prompt for thunderous delivery

A creator showed Suno can be steered beyond music into pure narration, sharing a Turkish spoken‑word prompt that starts calm and crescendos into a heroic, goosebump‑inducing climax—no backing track, just a deep, resonant male voice; they also stem‑split to isolate the vocal for editing prompt recipe.

🌍 Worlds and casts: generative engines and consistency

Tools for designing spaces and casts—procedural worlds, character sheets, and real‑time stylization. Higgsfield Popcorn’s consistency called out separately from Hailuo.

ComfyUI workflow builds consistent character sheets inside the node graph

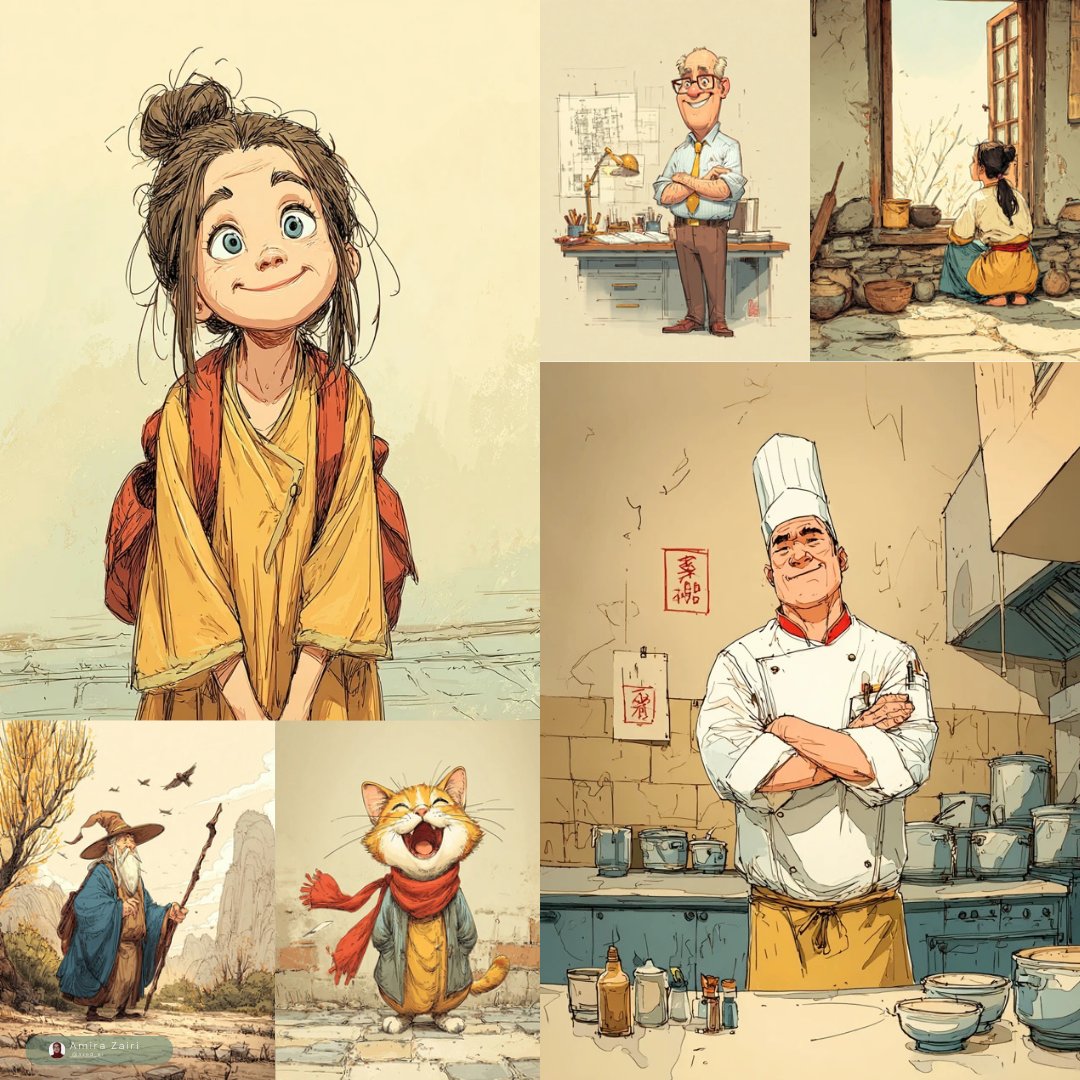

A ComfyUI showcase demonstrates end‑to‑end character sheets—multiple angles, outfits, and moods—directly from a node graph, helping keep faces, wardrobe, and palette locked for downstream animation or storyboards ComfyUI showcase.

Creators can iterate a cast rapidly while preserving continuity across shots.

BytePlus shows real‑time ‘digital claymation’ look with Seedance Pro

BytePlus highlights a real‑time, stop‑motion‑like “digital claymation” aesthetic—fast enough to iterate style and motion on the fly for shorts, ads, or music video visuals Claymation demo. Availability aligns with a Seedance 1.0 Pro Fast rollout on fal, promising 3× faster and ~60% cheaper generations for creators testing the look Fal rollout.

WorldGrow demos infinite 3D world generator for instant environments

WorldGrow surfaces a proof-of-concept for “Generating Infinite 3D World,” pointing to procedural, streamable spaces useful for previz, virtual production, and game block‑outs demo link. For creators, this hints at on‑demand locations that can be steered without manual level building.

Inkwell teases thin‑steered generative world engine for procedural stories

Inkwell is introduced as a "generative world building engine"—a thin AI layer steering procedural environments for storytelling and exploration engine tease. For writers and game makers, this suggests authored narratives that can flex across dynamic, system‑driven worlds without heavy bespoke scene building.

MJ V7 style ref 500791745 nails cinematic gothic anime consistency

A shared Midjourney V7 style reference (--sref 500791745) delivers a cohesive gothic‑cinematic anime look—clean outlines with painterly cel‑shadowing reminiscent of Castlevania/Vampire Hunter D—useful for keeping casts unified across frames before animating Style reference.

Pairing this sref with a video model can preserve character identity and mood shot‑to‑shot.

📚 Creative AI methods: control, correction, and sync

Fresh papers for production pipelines: reference‑video control, refining diffusion errors, auto‑aligned foley, and safer agent inputs.

ByteDance’s Video-As-Prompt turns any clip into a control signal for frozen video diffusion

Video-As-Prompt (VAP) uses a reference video to steer a frozen Video DiT via a Mixture‑of‑Transformers expert, enabling semantic, scene‑level control with strong zero‑shot generalization—no model finetune required paper summary. Following up on Video references, which showed practical style locking in tools, VAP pushes the concept into a principled method creatives can drop into pipelines to match movement, staging, and pacing from exemplars.

Foley Control auto‑aligns a frozen text‑to‑audio model to video for frame‑accurate SFX

Foley Control aligns a latent text‑to‑audio generator to picture so footsteps, cloth rustle, and impact sounds land where they should—synchronizing SFX to motion cues without bespoke training paper summary. For editors and sound designers, it means faster temp (or even final) foley passes that follow cuts and action beats while preserving tone via text prompts.

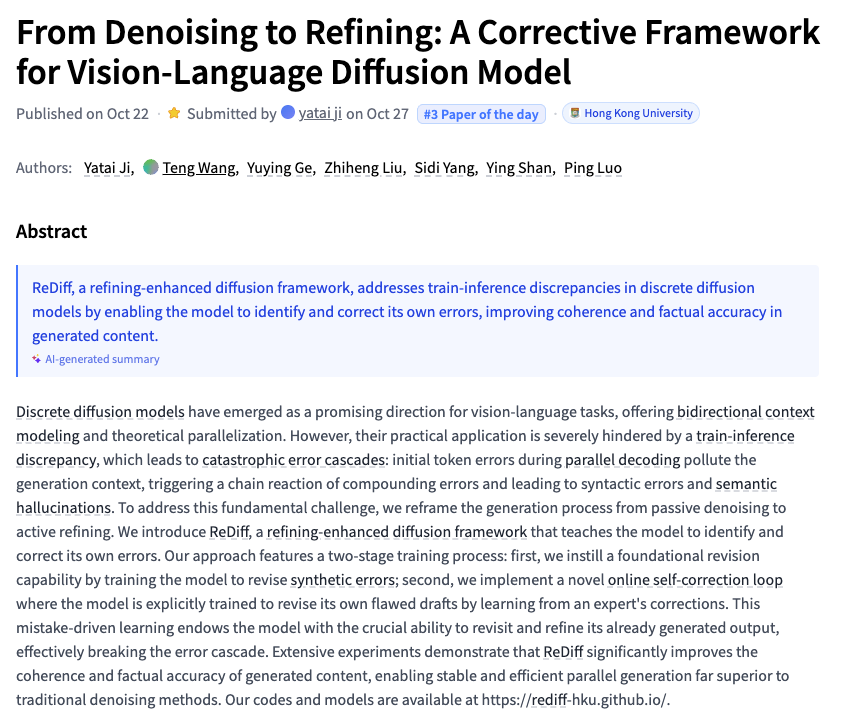

ReDiff proposes a corrective loop to catch and fix diffusion errors for truer, on‑brief visuals

ReDiff introduces a refining‑enhanced diffusion framework that detects discrepancies in discrete VLM diffusion and iteratively corrects them, improving coherence and factual alignment to prompts and references paper abstract. With two‑stage training plus an online self‑correction loop (and code/models reportedly available), this slots into art and shot‑planning workflows to reduce off‑model details without manual paintbacks.

Soft Instruction De‑escalation Defense sanitizes inputs to keep tool‑using agents from injection

The SIC method repeatedly inspects and cleans incoming data to tool‑augmented LLM agents, mitigating prompt‑injection and jailbreaking attempts before they trigger browser, file, or automation tools paper abstract. For creative assistants that scrape references or post edits on your behalf, SIC provides a guardrail layer without breaking natural language control.

🤖 Agentic coding for creatives

Open agent models and IDE signals that help creative coders wire apps and pipelines faster; complements, not duplicates, today’s video model launches.

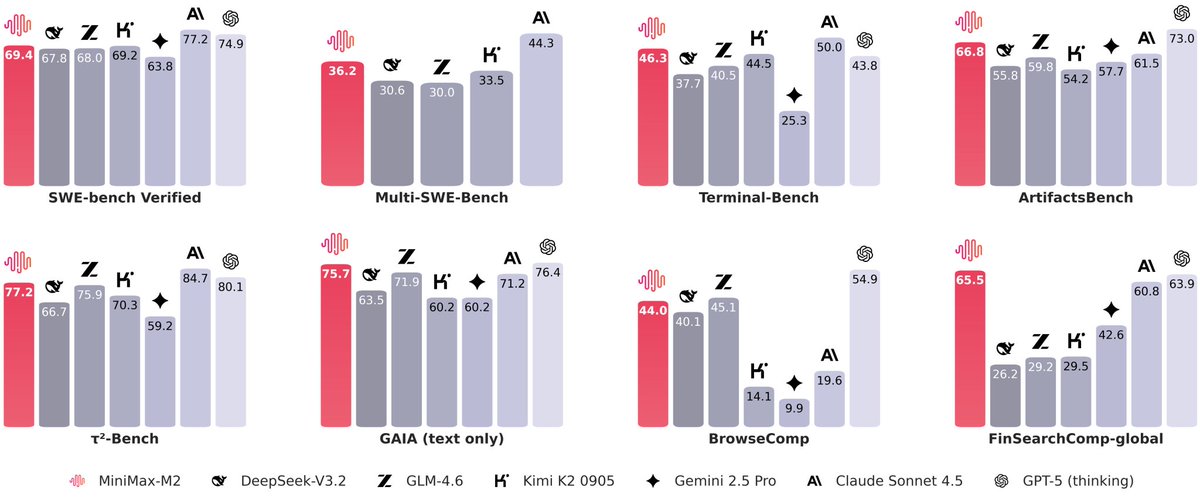

MiniMax open‑sources M2, a 230B MoE tuned for coding agents with strong evals and low cost

MiniMax M2 is now open‑sourced, pairing a 230B MoE (≈10B active) with standout coding/tool‑use scores and an aggressive pricing posture (~8% of Claude Sonnet, ~2× faster) launch claim, benchmarks chart.

- Benchmarks cited: 69.4% on SWE‑bench Verified and 75.7% on GAIA (text‑only), plus strong long‑horizon toolchain tasks like shell and browser benchmarks chart.

- For creative coders wiring agents, the combination of low cost, latency, and tool reliability makes M2 a practical backbone for IDE copilots, CLI chains, and browser‑driven research bots.

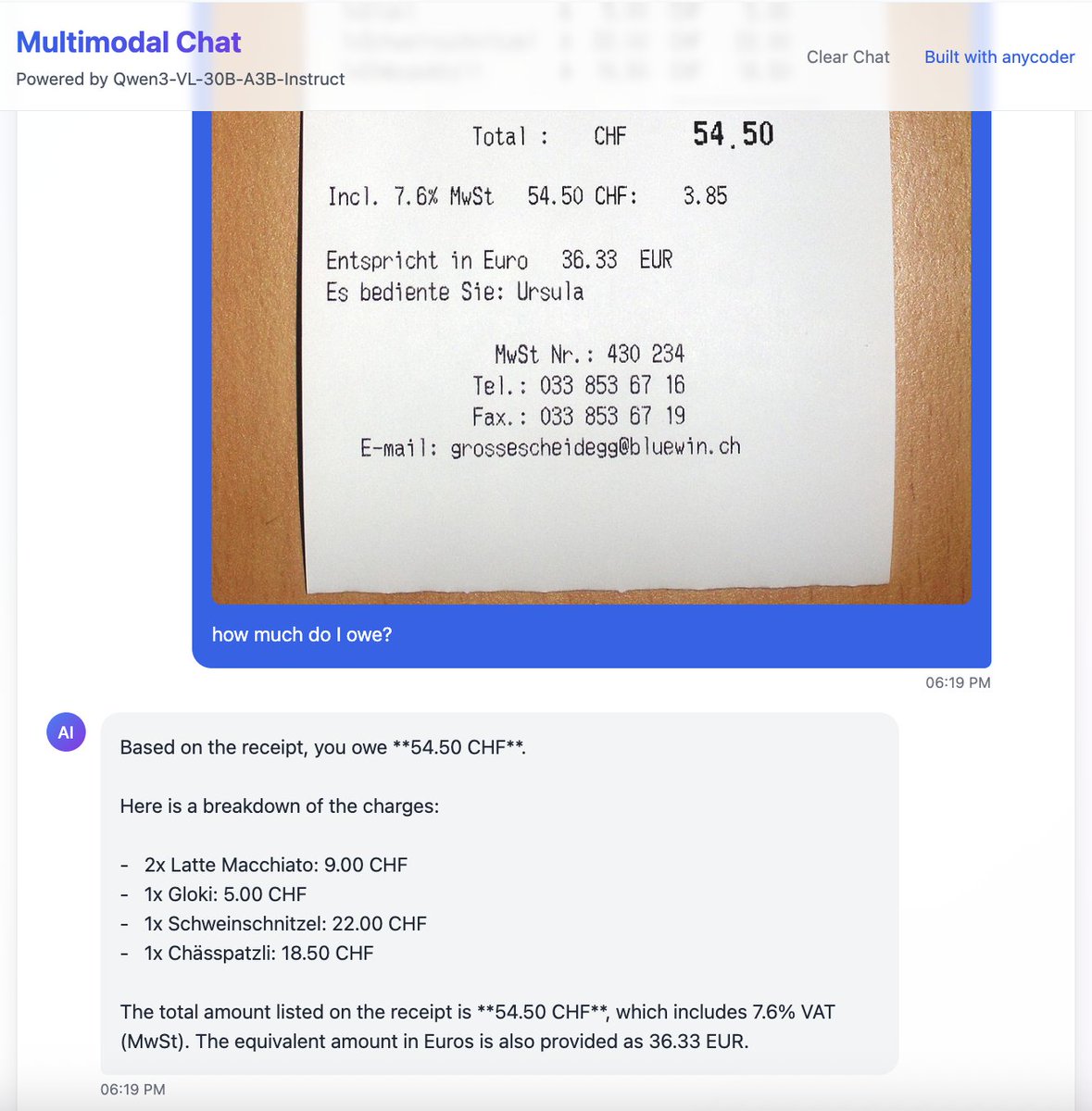

Anycoder shows MiniMax M2 vibe‑coding a React app, with live receipt parsing UX

Following up on Anycoder free, creators demo M2 inside Anycoder to “vibe‑code” an AI React app, including a chat that parses a photographed receipt and itemizes totals demo thread.

- This showcases agent‑style loops (vision→parse→explain) inside a familiar dev flow, hinting at faster idea‑to‑UI pipelines for indie tools and creative utilities.

DeepAgent paper proposes an end‑to‑end reasoning agent with memory folding and RL

A new paper introduces DeepAgent, an end‑to‑end deep reasoning agent that handles thinking, tool discovery, and action execution using memory folding and reinforcement learning, reporting wins over baselines on tool‑use and application tasks paper page.

- For creative automation, the design targets longer chains with less hand‑crafted routing, potentially simplifying multi‑tool studio workflows (e.g., fetch→generate→edit→publish).

Cursor 2.0 teased: a bigger swing at AI IDE workflows for creative coders

Cursor hints that 2.0 is “coming soon” with the tagline “one to infinite. infinitely simple,” signaling a deeper push into AI‑native coding flows that many creative devs already rely on for rapid prototyping teaser post.

- Details are sparse, but expect upgrades in project‑scale assistance and multi‑file reasoning that matter for app‑plus‑pipeline builds.

Soft Instruction De‑escalation Defense aims to harden tool‑augmented agents against prompt injection

Researchers propose SIC, an iterative prompt sanitization loop that repeatedly inspects and cleans incoming data to prevent prompt injections in tool‑using agents paper summary.

- As creatives connect agents to browsers, files, and APIs, SIC‑style guardrails can reduce jailbreak risks without over‑blocking legitimate instructions.

🎃 Creator events: MAX week and Halloween bounties

Competitions, livestreams, and seasonal giveaways for creators building today. Product launches like Hailuo sit in the feature; this is pure community/actionable perks.

Adobe MAX: Promise Studios to host two sessions and a shorts screening tomorrow

Promise Studios has two Adobe MAX appearances tomorrow: Diesol on Creative Park Discovery (4:30–5:30pm) and a Creator Stage panel and shorts screening at 5:30–6:30pm with MetaPuppet and others session details showtime note. Expect new short premieres plus Q&A—useful for directors testing AI-first pipelines.

Hedra Halloween: free pro guide and 1,000 credits for first 500 creators

Hedra is running a Halloween push with a free expert guide sent via DM to anyone who follows, RTs, and replies “Hedra Halloween,” plus 1,000 free credits for the first 500 people who make a video with any model and share it under the same tag campaign details. This is a fast, tactical way for filmmakers and social teams to test multiple spooky concepts without budget friction.

APOB’s Revideo Halloween challenge: $500 top prize, free credits for entrants

APOB’s Halloween Creator Challenge runs Oct 27–Nov 5 with a $500 top prize, runner‑up awards, and 5,000‑credit participation rewards; winners are announced Nov 7 challenge poster. Creators can request 800 bonus credits to produce entries and must post shorts on TikTok/YouTube/Instagram/X with specified tags Credits form Submission form.

Higgsfield Popcorn promo: 7 days unlimited, plus a 200‑credit DM drop (9‑hour window)

Higgsfield announced a creator push featuring Minimax Hailuo 2.3 with seven days of unlimited generations and a 200‑credit DM giveaway for nine hours if you RT and comment “higgsfield hailuo 2.3” promo details. Their feed also highlights character consistency (1 face, 8 frames, 0 drift), relevant for storyboarding and continuity consistency example.

Vidu opens all Halloween templates for free through Nov 1

Vidu is giving everyone free access to its Halloween template library until Nov 1, so creators can spin up spooky photos and videos in a tap for seasonal campaigns promo details. Perfect for last‑minute posts and testing multiple styles without cost.

Dor Awards reveal winners with judges’ commentary video

The Dor Brothers announced their competition winners in a recap video featuring judges like Grimes, Oliver Tree, Logan Paul, Beeple, and more winners video, following finale countdown that teased the results. If you’re scouting standout AI shorts or talent, the clip consolidates the top entries and judge notes in one place.

Freepik live session: Paige Piskin turns one selfie into a full fashion campaign

Freepik’s Inspiring Sessions returns on Thursday at 7 P.M CET, showcasing a selfie→campaign workflow with Paige Piskin—handy for social marketers and brand designers looking to scale shoots from minimal inputs session teaser save the date. Watch it live on YouTube YouTube livestream.

Hailuo community activations: post‑ICCV Halloween party and $10 creator credits on GMI

MiniMax (Hailuo AI) is hosting a post‑ICCV Halloween party with GMI Cloud party invite, and GMI is offering $10 in credits to users who repost and comment with their Hailuo 2.3 creations credit giveaway. A low‑friction chance to test the model, showcase work, and meet the community.

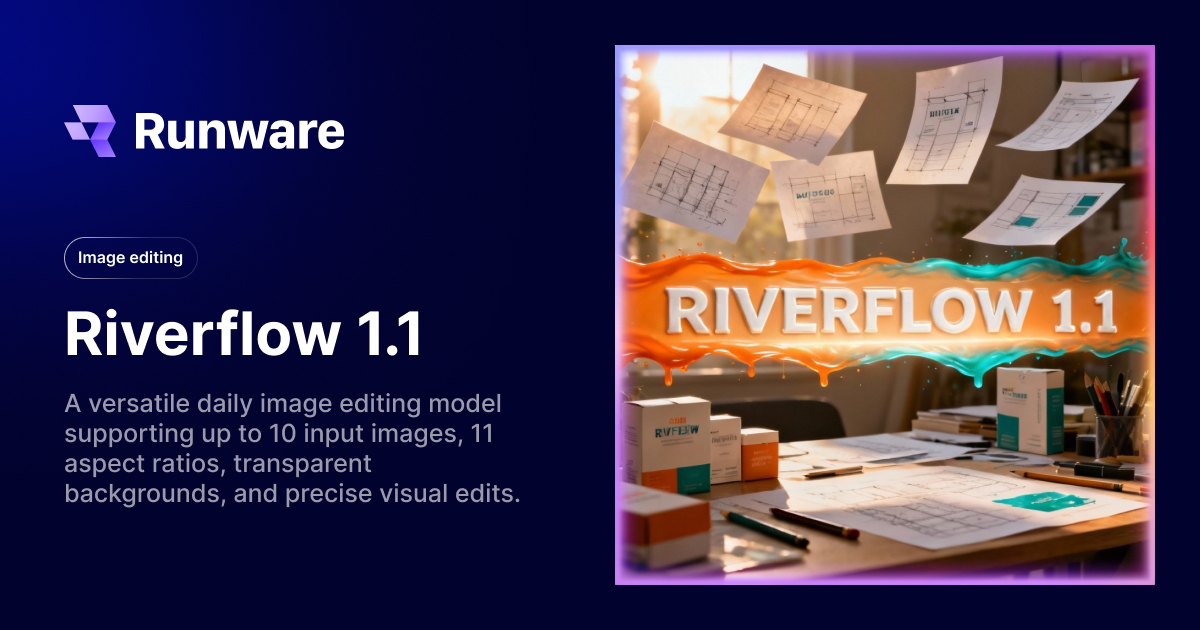

Runware announces $1,000 Riverflow image‑editing challenge (faster, cheaper v1.1)

Runware is running a $1,000 challenge around its Riverflow editor while shipping v1.1 with <40s edits and costs as low as $0.03025 per image challenge post. Creators can launch the model in the Runware hub and submit entries before week’s end Models page.

ImaStudio × MIT AI Film Hack invites prompt writers and filmmakers worldwide

A global AI Film Hack co‑run by ImaStudio and MIT is live, calling for creators whose prompts can shape new cinematic workflows; participants are already sharing test clips made during the hack hack mention. This is a good venue to prototype AI‑assisted directing and editing techniques.

📈 Market and eval signals for makers

A few broad signals creators may care about: olympiad‑grade multimodal evals, shifting API shares, and a real‑time model tease. Creative tool launches sit elsewhere.

Anthropic and Google set to overtake OpenAI in enterprise LLM APIs by 2025

A Menlo Ventures chart projects OpenAI’s enterprise LLM API share sliding from ~49% (2023) to ~24% in 2025, while Anthropic rises to ~30% and Google to ~18%—a shift that could change pricing, rate limits, and model access for studios and agencies building on these stacks Market share chart.

For creatives and production teams, diversifying vendor integrations (Anthropic + Google) may hedge roadmap risk and improve access to best‑fit models across safety, long‑context, and multimodal needs.

HiPhO benchmark puts 30 multimodal LLMs through Olympiad physics

The HiPhO benchmark evaluates 30 (M)LLMs on 360 problems across 13 physics olympiads, with P1‑235B‑A22B + PhysicsMinions at 23.2 and Gemini‑2.5‑Pro at 22.7, still below top human performance (29.2). Authors note models struggle most with diagrams and embodied reasoning; experimental tasks are excluded Benchmark snapshot.

Creators working on STEM explainers and edu content should expect solid reasoning on structured problems but plan for human oversight on diagram‑heavy steps and lab‑style tasks.

Odyssey‑2 real‑time interactive video is set to drop today

Odyssey signaled a launch for Odyssey‑2 at 10am PT, positioning it as an interactive, real‑time video model—promising lower latency feedback loops for directors, animators, and live performers Launch tease, Team announcement. If performance holds, this could enable on‑set iteration and reactive storytelling without offline renders.

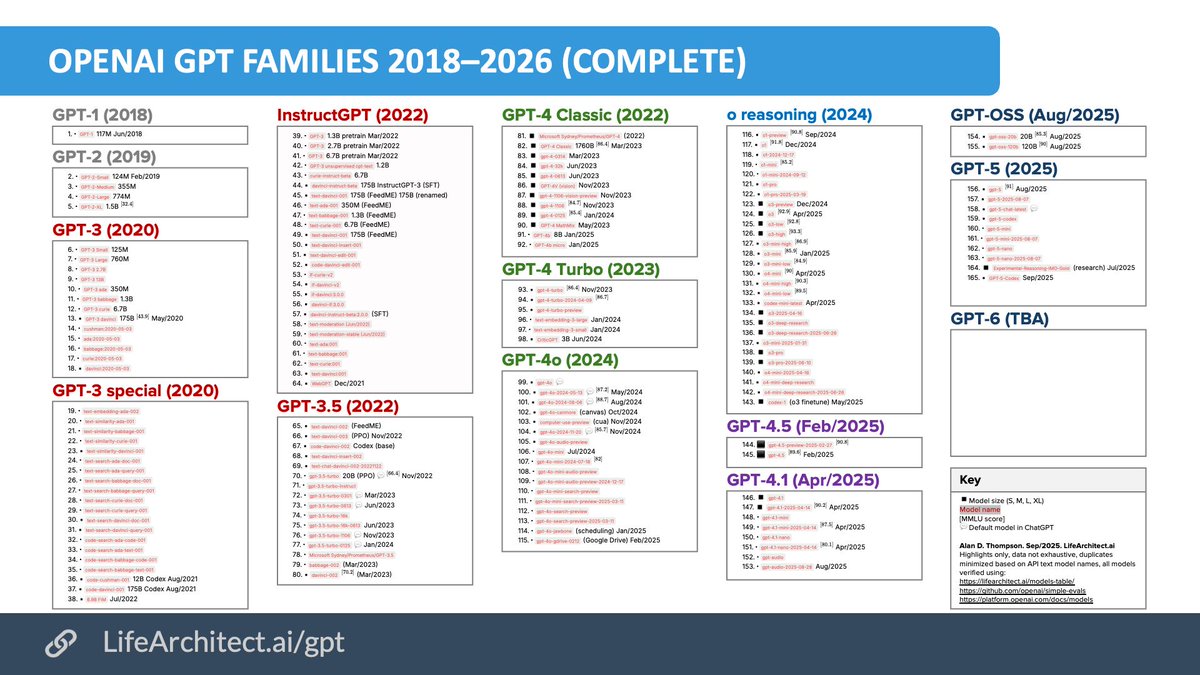

A mapped catalog shows 165 OpenAI text models since 2018

A compiled chart tracks 165 models across GPT‑1→GPT‑6 families (2018–2026), underscoring specialization and rapid cadence. For teams selecting stacks, it’s a reminder to pin versions and document migrations to avoid silent behavior drift in production pipelines Model families chart.

Expect more niche variants (thinking, tool‑use, long‑context) and coordinate with vendors to lock reproducible behavior for creative workflows.

🔎 Research helpers: Grokipedia v0.1 goes public

Grokipedia soft‑launch draws curiosity and confusion; could aid story research with 885k+ articles. Purely a resource note—no overlap with video features.

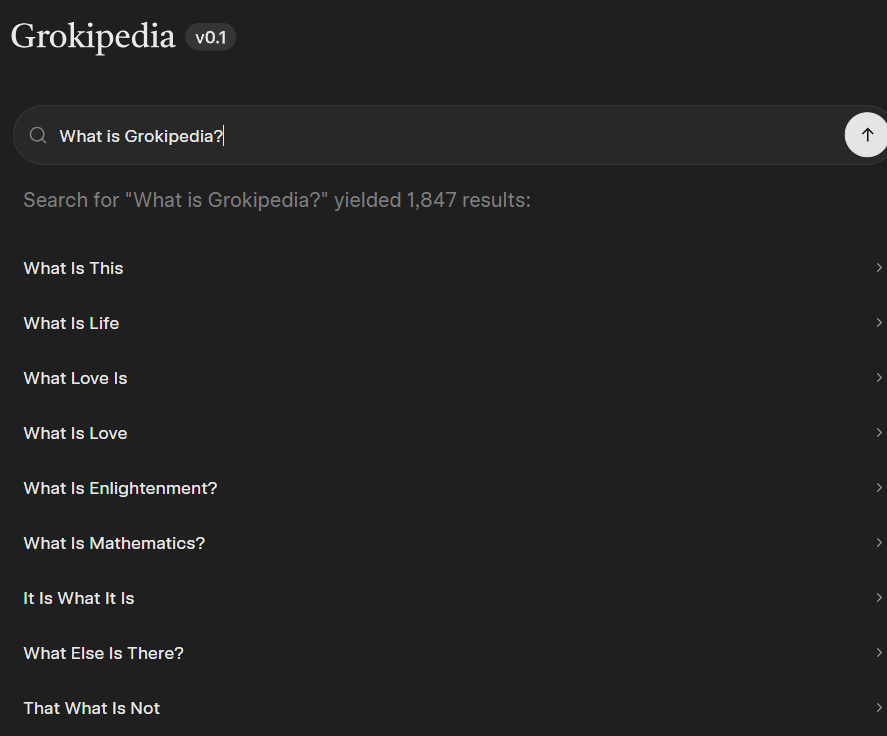

Grokipedia v0.1 goes live with 885,279 articles

xAI quietly switched on Grokipedia v0.1, exposing a minimalist research search that lists 885,279 articles—handy for quick background, lore, and reference pulls when outlining stories or pitches Live screenshot.

For creatives, this looks like a lightweight alternative to general web search for fast, citation‑ready context in treatments, animatics, and world guides.

Day‑one quirks: inconsistent access and a self‑query miss

Early visitors report inconsistent access (“Is Grokipedia real? I can’t access it.”), suggesting a limited or staggered soft‑launch Access question. One screenshot shows a humorous gap where searching “What is Grokipedia?” returns unrelated results—very v0.1 behavior Self-query screen.

Expect index growth and query tuning; for now, treat it as an early, sometimes‑finicky reference tool.

Early creative sentiment: “better than expected” for quick research

Despite the rough edges, an early tester called Grokipedia “better than I expected,” hinting at useful retrieval quality for story and production research Early endorsement. If you can reach it, the clean UI and visible article count make it a fast place to sanity‑check terms, timelines, and references before you commit to deeper dives Live screenshot.

📣 Social growth: thumbnails and auto‑summaries

Actionable growth moves for creators—thumbnail systems that drive views and a new auto‑summarizer for repackaging long videos into short highlights.

6.5M impressions in 12 days with AI‑built thumbnails

A creator hit 6.5M impressions in 12 days by leaning on fully AI‑generated thumbnails and visuals, sharing a step‑by‑step you can copy using Higgsfield thumbnail thread. If you’re testing systems, note Higgsfield’s preset‑driven workflows and short 720–1080p loops can seed multiple thumbnail variants quickly model promo.

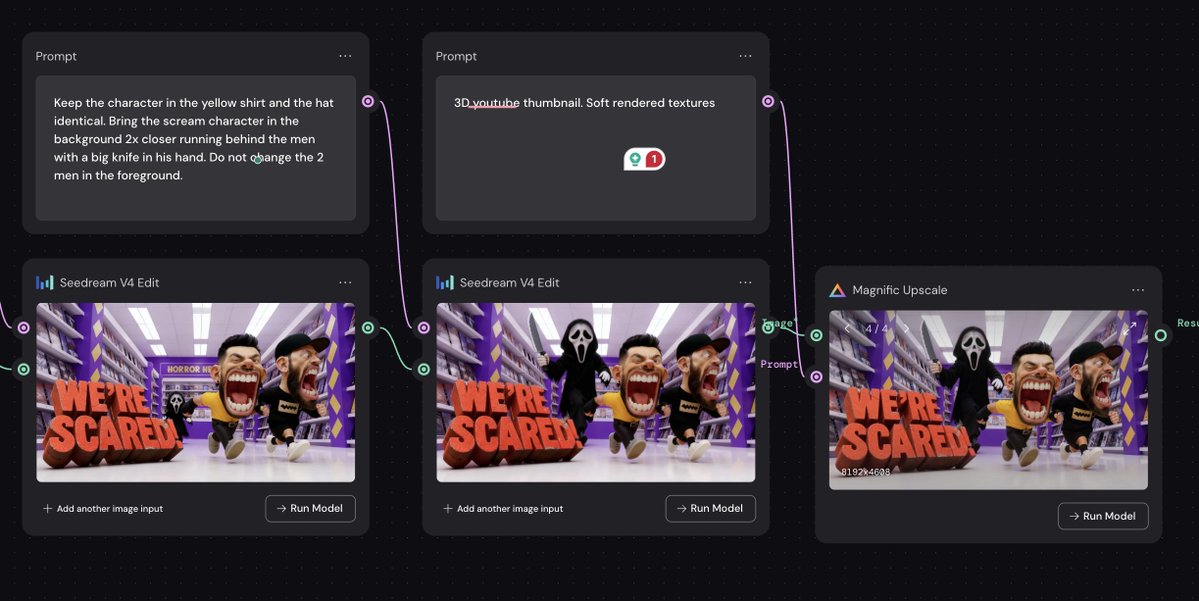

A reproducible Weavy → YouTube thumbnail workflow you can steal

Ror_Fly shared a practical YT thumbnail assembly: create base characters, composite them, iterate scenery, then add text and upscale/flatten—with a final pass to rebalance background figures for clarity workflow steps. The toolchain blends Weavy with Nano Banana/SeedEdit, Bria (BG removal), Compositor (canvas), and Recraft/Magnific for upscale; a follow‑up shows the exact node path and output before/after tweaks workflow screenshot.

Pictory adds one‑click Summarize Video for social highlights

Pictory rolled out Summarize Video to auto‑condense long clips into short, shareable highlight reels for social, marketing, or client updates feature post. The feature is live now in the app, streamlining repackaging without a manual edit timeline product page.