Nano Banana 2 on Higgsfield locks identity – Gemini 3 edits, 65% off

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Nano Banana 2 is now on Higgsfield with physics‑locked, zero‑drift characters and an “Edit with Gemini” flow that tweaks backgrounds and lighting without re‑rolling. There’s a 65% discount for a three‑day window, so it’s a good week to test identity‑safe video work.

Creators are posting same‑face, same‑body continuity across shots, plus momentum, weight, and camera shake that track prompts. Clips also show simulated studio lighting and coordinate‑aware scene builds; posts say the edit layer runs on Gemini 3, keeping the hero intact while you swap scenes.

Practical tip: batch 3–5 runs and compare frames to verify drift, and specify key‑light position/intensity to keep speculars consistent. Goodbye, “why did her face change again” reshoots.

Following last week’s Gemini–Nano Banana pairing sightings, the new thing is live editing inside a host platform rather than a separate app. Separate note: Higgsfield says the team is forgoing November pay and keeping the 65% window open after citing impact on 100k+ artists—so benchmark now, but keep the broader context in mind.

Feature Spotlight

Nano Banana 2 on Higgsfield: physics‑locked characters

Nano Banana 2 claims physics‑locked, zero‑drift characters with studio‑grade lighting and Edit‑with‑Gemini, landing on Higgsfield with 65% off—potentially redefining consistency for character‑driven visuals.

Cross‑account posts push Nano Banana 2 on Higgsfield with a 65% off window. Creators claim true physics, simulated lighting, and zero‑drift identity lock, plus “Edit with Gemini.” Mostly creator demos and promos today.

Jump to Nano Banana 2 on Higgsfield: physics‑locked characters topicsTable of Contents

🍌 Nano Banana 2 on Higgsfield: physics‑locked characters

Cross‑account posts push Nano Banana 2 on Higgsfield with a 65% off window. Creators claim true physics, simulated lighting, and zero‑drift identity lock, plus “Edit with Gemini.” Mostly creator demos and promos today.

Nano Banana 2 on Higgsfield claims physics‑locked, zero‑drift characters

Creators are showing Nano Banana 2 generating the same face and body across shots, with “physics‑locked” movement and no identity drift on Higgsfield’s platform during a 65% off window Character lock demo. For action work, posts also highlight momentum, weight, and even camera shake following prompts—useful for consistent UGC and character IP Physics engine notes.

- Test your hero consistency: batch 3–5 runs and compare frames to confirm drift behavior.

“Edit with Gemini” for Nano Banana 2 is live on Higgsfield

Higgsfield users can now swap backgrounds, lighting, and other scene elements on Nano Banana 2 outputs without re‑rolling—an “Edit with Gemini” flow that keeps identity locked and speeds versioning Feature description. Posts also say it runs on Gemini 3 Pro under the hood Powered by Gemini mention.

- Route small fixes through Edit with Gemini first; re‑roll only when composition must change.

Higgsfield staff forgo November paychecks; 65% off window stays open

Higgsfield says the team is giving up November salaries after acknowledging its tech displaced work for 100k+ artists, while keeping prices cut by 65% for a three‑day window Apology and 65% video, following 65% off BFCM promos earlier in the week. A separate note highlights the extension of Black Friday deals Extension note.

- If you paused trials on cost grounds, this is the week to benchmark Nano Banana 2 end‑to‑end.

Nano Banana 2 touts simulated, studio‑grade lighting on Higgsfield

Multiple clips claim the model “doesn’t guess light—it simulates it,” yielding studio‑grade illumination and more believable reflections during the sale period Lighting claim. A short reel shows object motion with highlight changes and flares that track geometry, supporting the photometric pitch Lighting physics clip.

- When matching a plate, specify key light position and intensity; check speculars across frames.

Coordinate‑aware scene builds: Nano Banana 2 maps real locations

A demo shows a wireframe transforming into a richly lit street scene, with the claim that Nano Banana 2 can map coordinates into accurate streets, angles, and lighting—promising for location‑based story beats and pre‑vis Coordinate scene demo.

- For B‑roll, try geo‑anchored prompts (cross streets, time of day) and compare to reference plates.

🎬 Shot control: Multi‑Frame, Start/End, and 10‑shot flows

Controllable sequencing and transitions land across Dreamina, Kling, Vidu, and PixVerse. Excludes the Nano Banana 2 rollout (covered as the feature).

Dreamina’s Multi‑Frame stitches up to 10 images with controllable transition timing

Dreamina introduced Multi‑Frame, a shot‑control workflow that lets you upload up to 10 stills, set each transition’s duration, and guide the whole sequence with a text prompt—useful for music videos, explainers, and mood reels Feature teaser.

For creative teams, this means you can pre‑board a sequence in images, then tune pacing without re‑rolling entire clips.

Kling 2.5 Start/End frames arrive on OpenArt for clean loops and morphs

Kling 2.5’s Start & End frame control is now live on OpenArt, enabling precise loops, morphs, and planned camera moves from a defined first/last frame OpenArt video tool. Following up on Start/End frame landing on fal/Runware, this widens access for creators.

Practical upshot: storyboard a hero frame, lock the button, and iterate motion without drifting identity.

Vidu adds 10‑image video chains, video‑extension upscaling, and RufHub search

Vidu rolled out 10‑image video creation with seamless transitions, an upscale option for extended shots, and RufHub search across Community, Library, and Reference; an Enterprise plan discount (up to 20%) and a 24‑hour giveaway of 500 credits were also flagged Feature brief.

Good for fast animatics: chain 6–10 boards, refine beats, then upscale only the segments you keep.

PixVerse V5 demo shows auto‑generated, scene‑fit sound effects on video

A PixVerse V5 clip highlights automatic sound effects that match the on‑screen action, hinting at audio pass‑throughs that reduce manual SFX spotting for short‑form edits Feature note.

If consistent, this trims turnaround on sports and UGC clips where foley is usually an afterthought.

Dreamina hints deeper keyframe control after a 10‑keyframe tool scored 9.5/10

Dreamina teased upcoming control features after Gemini 3 reportedly rated a “10‑keyframe video tool” 9.5/10, framing 10 controlled frames as effectively “directing a scene” Creator comment.

Expect more per‑beat control (not just transitions), which matters for matching music cues and camera grammar.

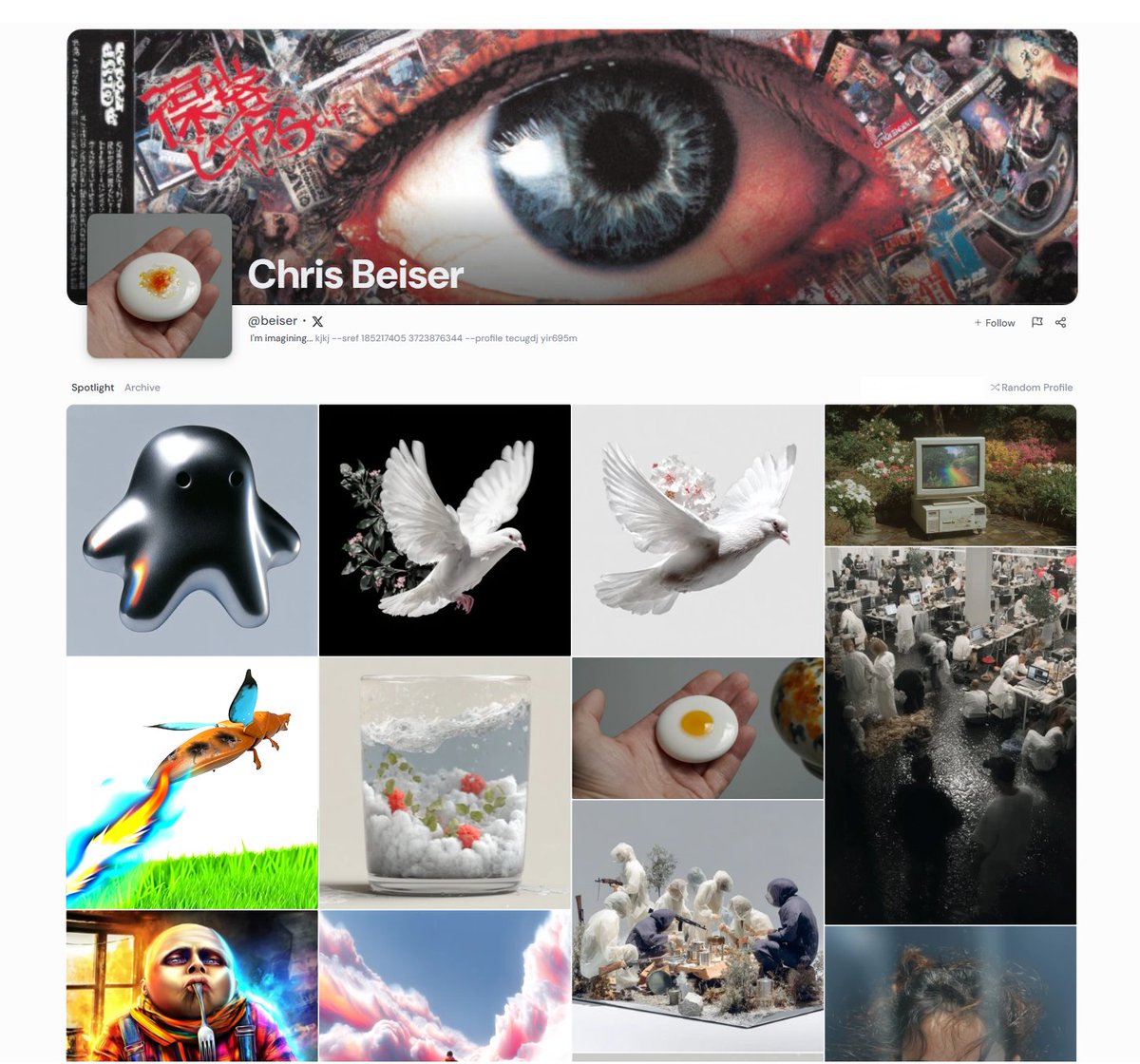

🪪 Midjourney gets profiles and fast‑hour perks

Midjourney ships public profiles (usernames, banners, follows). Creators rush to claim handles; >8 spotlights in 24h yields 5 free fast hours. Style prompts covered separately today.

Midjourney rolls out public profiles and a 5‑hour fast perk

Midjourney turned on public profiles—usernames, banners, follows, and social links—and is offering 5 free fast hours if you complete a profile and add more than eight spotlights within 24 hours Launch details. Creators are already claiming handles and sharing their pages, signaling a quick shift toward a more social discovery layer inside the app Personal profile share Profile page.

A community PSA is circulating to secure your username now, with early notes that the fast‑hour perk triggers once a full profile is set up with >8 spotlights Claim handle PSA.

🎵 Music tools meet labels

Licensing + tooling matter for AI musicians: Stability AI teams with Warner; Udio settles and partners with WMG. Also a creator ships an AI band launch with Suno V5.

Udio settles and partners with Warner Music, keeps user tools

Udio announced a licensing partnership with Warner Music Group and says its dispute has been resolved; importantly, current user tools remain available Deal summary, with details in the company’s post Udio blog. For AI musicians, this reduces legal risk around releases and hints at more official label pipelines.

Stability AI partners with Warner Music to build licensed creator tools

Stability AI and Warner Music Group will co‑develop professional tools for artists, songwriters, and producers using "ethically trained" models, with new revenue paths and rights protections called out in the announcement Partnership post. For music teams, this points to label‑aligned workflows instead of gray‑area datasets.

Father–son AI band debuts with Suno V5 and an 8‑track EP

Creator Diesol and his son launched "Afraid2Sl33p," blending trap, metal, and anime; the first single "Come Undone" was generated with Suno V5 and arrives with an AI video ahead of a self‑titled 8‑track EP Debut thread. Visuals were built with Grok Imagine, Midjourney, and Seedream Visuals note.

🎨 Reusable styles and asset packs for visuals

A practical day for style kits and assets: MJ V7 recipes and templates, Retro Diffusion pixel models on Replicate, and Leonardo’s ‘Model Holding Product’ blueprint for quick comps.

Leonardo’s “Model Holding Product” blueprint auto-composites products into hands

Leonardo rolled out a blueprint that drops your product into a person’s hand from a single upload, producing on‑brand "model holding product" shots without a photoshoot Blueprint demo. A follow‑up post shows multiple stylized comps made from the same asset Example set.

For marketers and storefronts, this cuts comp time and helps standardize hero angles across catalogs.

Retro Diffusion pixel-art suite arrives on Replicate: sprites, tilesets, game assets

Astropulse and Nerijus’s Retro Diffusion models are now live on Replicate, bringing production‑ready pixel art, animated sprites, and tilesets to an API you can scale in pipelines Model availability. See the full collection and docs in Replicate’s pages and blog for examples and parameters Model collection Blog post.

If you’re prototyping games or motion UI, this is a fast path to cohesive retro assets without training your own model.

One image → 15 distinct videos: Grok Imagine token pack for repeatable looks

A creator shows how a single portrait spawns 15 stylistically different video shots using a compact token list (e.g., Glitchwave, Volumetric smoke, LomoChrome Metropolis), highlighting Grok Imagine’s instruction following and motion control Token thread. This builds on earlier token lexicons for Grok token lexicon and adds video‑focused direction.

Keep these tokens in a shared style sheet so editors can mix motion cues with lighting and lens references consistently.

Esoteric tarot aesthetic for MJ: sref 3770290717 with print-like linework

A new Midjourney style reference (--sref 3770290717) evokes hand‑colored engravings and 19th‑century tarot illustration, reinterpreted with psychedelic folk colors and ornate framing—handy for collectible cards and poster series Style reference.

Save this sref in your studio presets to generate cohesive decks, chapter art, or packaging sets without chasing prompt drift.

MJ V7 style recipe lands: --sref 3507057859 with chaos 9 and stylize 500

A new Midjourney V7 style recipe is making the rounds: --chaos 9 --ar 3:4 --sref 3507057859 --sw 500 --stylize 500, a combo that yields bold compositions with a controlled, modern finish Recipe params.

Save the full flag set to your prompt snippets so a team can reproduce the same look across batches.

Reusable “Balloon” prompt template for MJ: subject-shaped, floral-filled, pastel shots

Azed shares a plug‑and‑play Midjourney prompt that outputs transparent, subject‑shaped balloons filled with delicate florals against soft pastel backdrops—great for cohesive sets and quick product or editorial looks Prompt details.

Swap [subject], [flowers/plants], and [background color] to build a mini‑library of consistent visuals for campaigns or moodboards.

High-contrast city look: sref 2003154966 + RAW and high stylize settings

A shared sref find (--sref 2003154966) paired with --style raw, high stylize, and strong style weight produces crisp, moody city portraits and street scenes that stay consistent across prompts Style settings.

Drop this into your house presets to speed lookdev for urban campaigns and banners.

🧰 How‑tos: from Freepik pipelines to Grok tricks

Hands‑on guidance dominates: a full Freepik Spaces pipeline from stills to motion, JSON prompt structuring, and a Grok Imagine colorization trick for engravings. Excludes Nano Banana 2 specifics.

Freepik Spaces workflow: Seedream stills to Veo/Kling motion using JSON prompts

Creator @techhalla shared a practical, end‑to‑end pipeline inside Freepik Spaces: generate consistent stills with Seedream 4K and Nano Banana, then animate with Veo and Kling—keeping character and scene continuity via JSON‑structured prompts. The thread shows multi‑angle asset building, looped shots with Kling Start/End, and how the pieces cut together fast. See the pacing and look in the concept reel Workflow reel, and grab the step‑by‑step with prompt notes in the replies Step-by-step thread.

Why it matters: this is a repeatable recipe for music videos, teasers, and ad specs. If you’ve been piecing tools together, this shows what to do in which order—and why JSON prompts help keep outputs stable when you iterate.

Dreamina Multi‑Frame stitches up to 10 images with per‑transition control

Dreamina’s new Multi‑Frame mode lets you upload up to 10 stills, set transition durations per cut, and guide the whole sequence with a text prompt. It’s built for longer, controllable AI videos that keep story beats tight (no re‑rolling everything to fix one move). Quick intro here Feature overview, with a deeper tutorial covering consistent outputs and timing tips Full tutorial.

Use it for: mood reels, brand explainers, or animatics where you want shot‑to‑shot control without a full timeline editor.

Grok Imagine turns one image into 15 distinct shots with tight instruction‑following

Following up on Token pack for lighting and fashion looks, @ai_artworkgen shows a one‑image → 15‑video workflow that changes lensing, motion, and atmosphere via compact prompts. The thread includes a macro “rack focus along dreadlocks” example and production notes on upscale and audio Variations reel, Macro glide prompt, Instruction following notes.

Why it matters: this model now behaves more like a DP taking direction than a randomizer. It’s a strong option for style boards and music video inserts.

Grok Imagine tip: live‑color old engravings with a single prompt

Drop any black‑and‑white engraving and prompt "coloring the illustration from the history book"—Grok Imagine animates a live paint‑in while adding subtle motion. It’s a one‑line trick that turns public‑domain art into eye‑catching reels or title cards in seconds How‑to prompt.

Handy for educators, editors, and music visuals where you want texture plus movement without designing from scratch.

Kling 2.5 Start/End frame on OpenArt: loops, morphs, and camera beats

OpenArt now exposes Kling 2.5’s Start & End frame control for image‑to‑video. You can anchor a shot to match first and last frames (clean loops), or set two frames to drive a morph and camera pass—useful for transitions and product hero moves Feature demo. The usage flow and model picker are documented on the product page OpenArt page.

Try it on social loops, logo reveals, and motion studies that need exact in/out alignment.

Vidu adds 10‑image video creation, upscale for extensions, and RufHub search

Vidu rolled out a 10‑image creation flow with seamless transitions, plus upscaling for extended clips and a new RufHub search that spans Community, Library, and Reference pages. It’s positioned for storyboard‑to‑edit workflows with faster reuse of references and longer shots Feature reel. A second post recaps the same features with a promo and enterprise tier notes Feature cards.

Good for teams building polished lookbooks or stitching character beats without leaving the app.

Leonardo’s “Model Holding Product” blueprint drops your item into hand shots

Upload a product image and Leonardo’s new blueprint composites it into model‑in‑hand scenes—handy when you don’t have a photoshoot but need marketing‑grade hero frames. The demo shows multiple angles and poses generated from a single upload Blueprint demo.

Use this to comp packaging, CPG, or gadget visuals into lifestyle photos while you iterate on design.

Two quick MJ recipes: “Balloon” prompt and V7 chaos‑9 sref setup

If you need fast art‑direction, two lightweight Midjourney shares landed: the “Balloon” prompt pattern for transparent, flower‑filled subject balloons on pastel backdrops Prompt recipe, and a V7 settings card using --chaos 9, --ar 3:4, --sref 3507057859, --sw 500, --stylize 500 for bold, styled outputs V7 settings.

They’re simple starting points you can slot into moodboards, packaging comps, or cover art.

🧪 Vibe‑coding and agentic tools for creators

Creators ship small games and apps with Gemini 3; early Codex Max tests show hiccups; dynamic visual layouts and Perplexity docs/slides aid pre‑production. Antigravity UX friction flagged.

Gemini 3 adds dynamic visual layouts to Google Search

Google is rolling out AI‑generated, interactive layouts in Search that turn answers into mini‑tools (e.g., physics simulations), with availability noted in AI Mode for U.S. subscribers and as a Labs experiment in the Gemini app Pendulum demo, AI mode details. This matters for pre‑production and education flows where an explorable widget beats a paragraph.

Gemini 3 Pro one‑shots Portal and Frogger‑style games

Creators are showing Gemini 3 Pro building playable web games in minutes, complete with controller support and AI‑generated art/audio. See a Portal‑like built inside AI Studio and a 20‑minute Frogger/Crossy‑style run for a sense of pace and fidelity Portal demo, Frogger build, Crossy clip.

Perplexity now builds and edits slides, sheets, and docs

Perplexity added Pro/Max features to compose and edit slides, spreadsheets, and documents directly from search, which streamlines research → deck/script workflows for small teams Feature demo. It works across all search modes, so you can keep context while drafting assets.

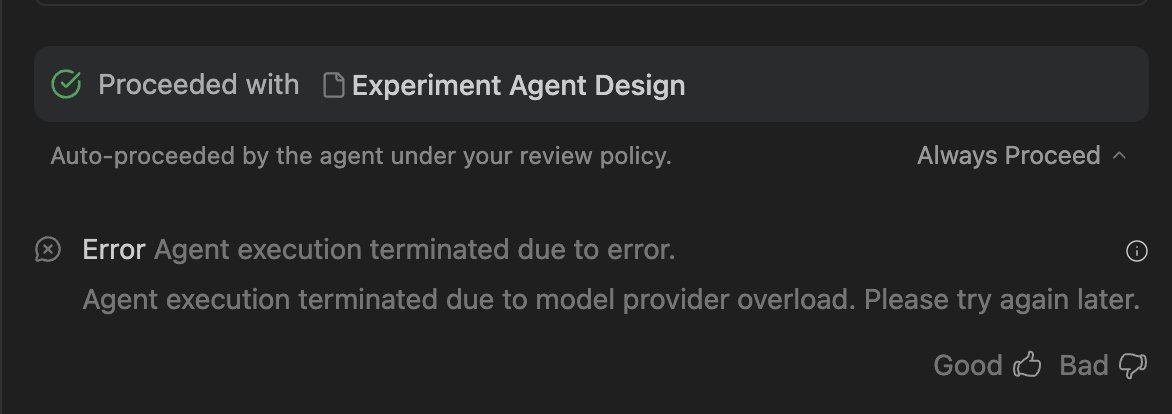

Antigravity needs a Retry button, say early users

Following up on initial demos of Google’s agentic IDE, creators are asking for a simple Retry control due to frequent overloads that force copy→revert→paste loops many times a day Retry request. This is a small UX gap with a big impact on iteration speed.

Dimension launches event‑based AI coworker for background tasks

Dimension introduced a trigger‑driven agent that runs without prompts: it can watch deployments (e.g., Vercel), compile post‑mortems on failure, or email meeting briefings before calls Product overview. For lean teams, this shifts AI from chat windows to scheduled, zero‑touch execution.

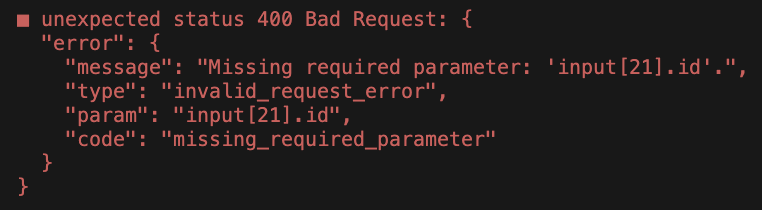

GPT‑5.1 Codex Max hits early CLI errors; fix in flight

Early users report persistent CLI session failures (input[...] id) while testing the new long‑running, agentic coding model; OpenAI’s team acknowledged the issue and is working on a fix CLI error report, Team acknowledgement. Expect some instability if you’re trialing Codex Max today.

Stitch adds one‑click export to AI Studio

Stitch now exports designs straight into AI Studio with HTML, screens, and project structure pre‑attached, cutting handoff friction for teams prototyping with Gemini 3 Feature brief. Newer files (post Nov 15) are supported natively; older projects can be duplicated to enable it.

A Gemini 3 voice app that visualizes in real time lands in minutes

Creators vibe‑coded a voice agent that surfaces relevant images as you speak, plus a separate one‑shot TTS app that runs 100% locally in‑browser via transformers.js—both anchored by Gemini 3 Voice app demo, Browser TTS app. This is a clear template for reactive podcasts, teaching tools, and live show assets.

🤝 Creative platforms: integrations and access

Ecosystem moves that simplify pipelines: Kling x ElevenLabs tie audio and video together; Gemini 3 Pro and Riverflow 2 become easy to run via hosted runtimes.

Gemini 3 Pro lands on Replicate with image, video and audio inputs

Replicate now hosts Google’s Gemini 3 Pro with multimodal I/O, so you can run prompts that mix images, video, and audio without wiring up Google’s SDKs. This is a fast way to prototype new assistants, analyzers, or creative tools from a single endpoint Launch clip, with model details at Gemini model card.

Kling partners with ElevenLabs so you can add voices, music and SFX to Kling visuals inside Playground

Kling and ElevenLabs announced a workflow tie‑in: generate visuals with Kling, then layer voice, music, and sound design directly in ElevenLabs’ Playground. It turns two separate steps into a single creative lane for trailers, ads, and social shorts Partnership note.

Retro Diffusion sprite and tileset models arrive on Replicate for game assets

Replicate published Retro Diffusion from RealAstropulse and NERIJS, a set of models for pixel‑art assets: animated sprites, tilesets, UIs, and more. It’s a handy hosted path for indie teams to batch‑generate consistent 8/16‑bit elements with a deployable API Model announcement, with details on the models and workflows in the write‑up Replicate blog and usage links at Models page.

Runware hosts Riverflow 2 Preview (unified text‑to‑image + editing) with 15% off until Dec 5

Riverflow 2 Preview is live first on Runware, combining text‑to‑image and image editing in one model. The team says it produces “staged photography”-like outputs and handles complex scenes more reliably; pricing is 15% off through Dec 5 for early tests Feature brief, with docs on the models catalog at Runware models.

Stability AI partners with Warner Music to co‑develop licensed, pro‑grade music tools

Stability AI and Warner Music Group will develop tools for artists, writers, and producers using “ethically trained” models, with a goal of protecting rights and opening new revenue paths. For working musicians this signals more cleared workflows and fewer licensing headaches in AI‑assisted composition Partnership brief.

OpenArt adds Kling 2.5 Start & End frame control for precise loops and morphs

OpenArt now exposes Kling 2.5’s Start & End frame control for creators, enabling clean loops, morphs, and shot‑to‑shot continuity from stills. This follows Start/End frame live on other hosts; having it in OpenArt puts the feature in more day‑to‑day creator pipelines Platform demo.

Perplexity Pro/Max can build and edit slides, sheets and docs inside search

Perplexity added a workspace layer across all search modes so Pro and Max users can create and edit slide decks, spreadsheets, and documents inline. For researchers and content teams this removes the copy‑paste hop into office tools, speeding briefs, outlines, and data tables Workspace demo.

Runware adds Bria background remover and 4× upscaler as API‑ready tools

Runware onboarded two Bria models—instant background removal and up to 4× super‑resolution—so product photo teams can chain cutouts and upscale in the same environment as their other generators. That reduces export/import hops during catalog or ad creative passes Tool preview.

🏗️ Big checks and demand signals

Infra and revenue‑side moves matter for creative tooling: Luma’s $900M + 2GW compute plan and ComfyUI’s 130 enterprise leads in 2 weeks. Mostly funding and pipeline signals today.

Luma raises $900M and teams with Humain on 2GW “Project Halo” compute

Luma announced a $900M Series C and a partnership with Humain to build a 2GW compute supercluster called Project Halo, targeting multimodal AGI research and deployment from 2026–2029 Funding announcement. The company frames “reality as the dataset,” citing its Ray3 reasoning video model and growing enterprise traction as drivers for scale Luma blog post.

This is a massive capacity signal for AI video and 3D creators. More compute usually means faster model iteration and broader access tiers.

Following up on Taiwan datacenter where a $500M, ~7,000‑GPU build was teased, Luma’s plan dwarfs typical single‑site expansions and suggests a coming wave of higher‑fidelity, longer‑form generation available to studios and tool vendors alike Blog details.

ComfyUI reports 130 enterprise inbounds in 2 weeks, avg team size 250

ComfyUI says it quietly added an enterprise form to its site and received 130 inbound requests in two weeks, with an average team size of 250, despite “zero announcements” or marketing Inbound claim. For creative teams, that’s a signal that node‑based gen pipelines are moving from solo tinkering into department‑level pilots.

Stability AI partners with Warner Music to co-develop pro music tools

Stability AI and Warner Music Group announced a partnership to build professional‑grade tools for artists, songwriters, and producers using “ethically trained” models, with an emphasis on creator rights and new revenue paths Partnership post. For musicians building in AI, this is a demand signal from a major label for sanctioned workflows that can ship to catalogs and sync.

Udio inks licensing deal with Warner Music, settles lawsuit, keeps tools live

Udio said it has partnered with Warner Music Group on a licensed AI music platform while settling litigation, and it will maintain current user tools during the transition Deal summary Udio blog. This is a clear commercialization path for AI music that reduces legal risk for creators and brands.

Kling partners with ElevenLabs to fuse visuals and pro audio in one workflow

Kling AI and ElevenLabs announced an integration that lets creatives generate video in Kling and layer voices, music, and sound design directly in ElevenLabs’ Playground—positioning a combined stack for multimodal storytelling and ads Partnership note. For small teams, this reduces tool‑swap time and makes client‑ready outputs more feasible on short timelines.

💸 Deals, credits, and creator events

BF deals for content ops plus community meetups: Thunderbit discount, Pictory promos, a FLUX hackathon, and Genspark Live. Excludes the Higgsfield sale (covered as the feature).

Pictory doubles down: 50% off annual, +2,400 credits, and a $600 Creator Pack giveaway

Pictory added a $600 Creator Pack giveaway on top of its BFCM offer—50% off annual plans plus 2,400 bonus AI credits—following up on Pictory BFCM. Deal details and pricing live today for creators repurposing blogs, webinars, and decks into video Offer details, Pricing page. The giveaway post spells out the cross‑platform share + tag entry rules Giveaway rules.

Thunderbit Pro is 50% off for Black Friday; 2‑click deep scraping with Notion/Sheets export

Thunderbit kicked off a Black Friday deal: 50% off your first billing period for Pro Pricing promo. The tool is relevant to content ops because it scrapes complex sites in two clicks, traverses pagination and subpages, and auto‑builds clean tables you can export to Notion, Airtable, or Google Sheets Feature demo, Notion export.

Midjourney adds public profiles; fill out 8+ spotlights in 24h to get 5 free fast hours

Midjourney launched user profiles with usernames, social links, banners, follows, and spotlights. As an incentive, anyone who completes a profile with more than eight spotlights in the next 24 hours gets 5 free fast hours—useful for sprinting through time‑sensitive client comps Profiles rollout. Creators are already sharing pages and urging others to secure handles Claim free hours.

Vidu adds 10‑image video chains and upscale, dangles 500 credits in a 24‑hour promo

Vidu rolled out 10‑image video creation with seamless transitions, extension upscaling, and a new RufHub search across Community/Library/Reference pages Feature thread. To drive trials, it’s running a 24‑hour “follow + RT + comment” campaign awarding 500 credits to participants Campaign post.

ElevenLabs opens interest list for a London Summit after debut event

Following its first Summit, ElevenLabs is taking sign‑ups for a London edition—relevant for filmmakers, podcasters, and game teams leaning into omnimodal voice, music, and video workflows Summit highlights, London signup.

Genspark Live is tomorrow at 3:30 PM PST with an “AI workspace” preview

Genspark Live is set for Nov 20 at 3:30 PM PST, teasing an “AI workspace” reveal—worth a watch if you’re consolidating research, writing, and asset pipelines for creative teams Event teaser.

Riverflow 2 Preview lands on Runware with 15% off until Dec 5

Runware is first to host Riverflow 2 Preview, a unified text‑to‑image plus editing model tuned for staged, product‑accurate photography. There’s a 15% launch discount running through December 5, which makes it a timely pickup for holiday campaign pipelines Launch post, Models page.

🧩 Research to watch for artists and devs

Method papers today focus on style control, faster multimodal processing, grounded 3D understanding, and material realism; active discussion threads linked. Lean but relevant for pipelines.

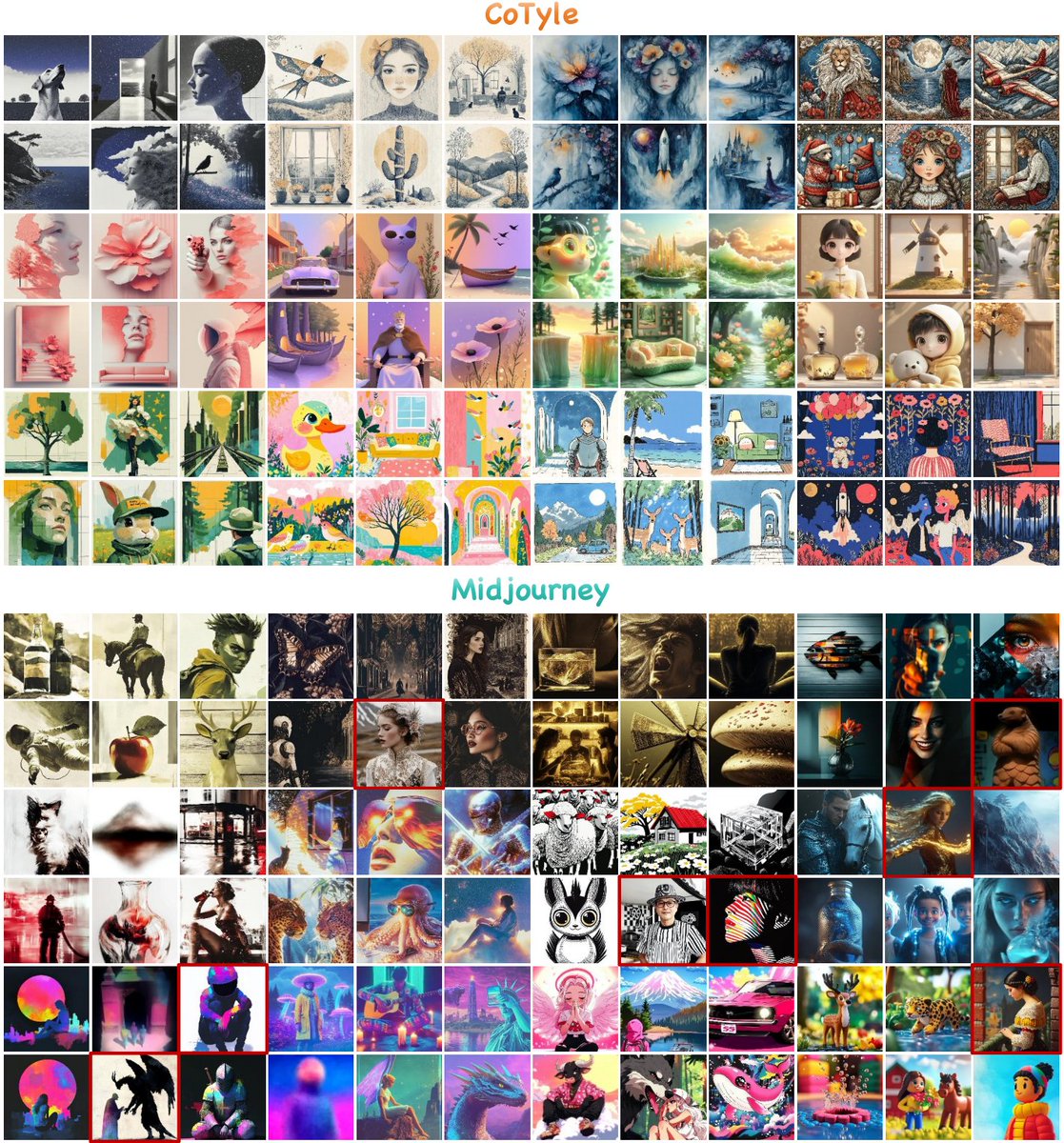

CoTyle: one numeric code now controls image style

Kuaishou’s CoTyle proposes code‑to‑style image generation: a single numeric code maps to a learned style embedding that drives a diffusion model, avoiding long prompts or reference boards Paper thread, with a broader explainer on why it matters for art direction and brand kits Explainer thread. The paper details a discrete style codebook and an autoregressive generator for synthesizing new, reproducible styles, useful for consistent lookbooks and multi‑asset campaigns Paper page.

OmniZip prunes tokens via audio to speed multimodal LLMs

OmniZip introduces audio‑guided dynamic token compression to accelerate omnimodal LLMs, selectively dropping redundant audio/video tokens to cut latency and cost on long clips Paper thread. For creative assistants that analyze footage or podcasts, this points to faster scrubbing and cheaper batch runs without retraining the backbone Paper page.

Think‑at‑Hard targets ‘hard’ tokens for better reasoning at lower cost

Think‑at‑Hard selectively adds latent iterations only where the model is likely wrong, reporting 8.1–11.3% accuracy gains while exempting ~94% of tokens from extra passes (via a neural decider and duo‑causal attention) Paper page. It’s a pragmatic complement to thinking‑aware editing noted in MMaDA‑Parallel (hands‑on gains on ParaBench), and could shrink "thinking token" budgets for coding and planning agents used in creative tooling Paper discussion.

Error‑driven 3D scene edits improve LLM grounding and spatial reasoning

DEER‑3D frames grounding as a loop: use the model’s own errors to propose scene edits and re‑evaluate, tightening alignment between text and 3D layouts Paper page. This matters for layout QA, blocking, and scene description tasks where subtle object relations break downstream generation or camera planning Paper page.

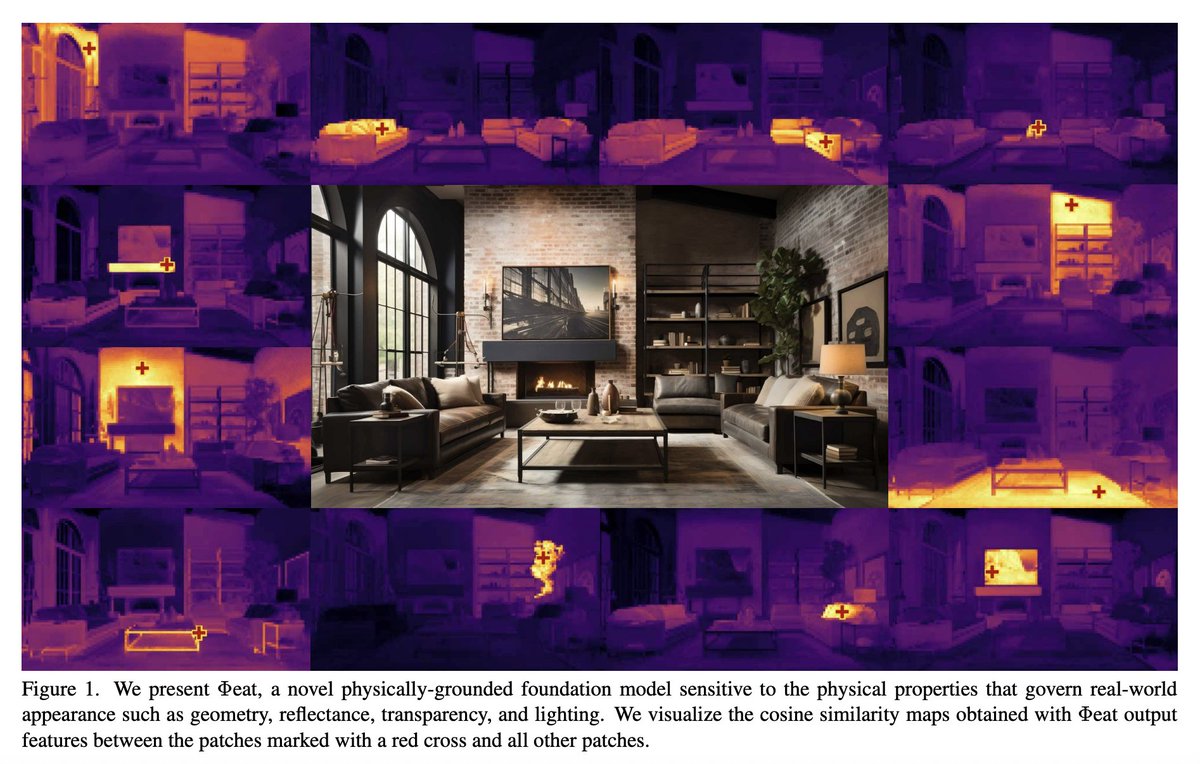

Φeat learns physically grounded material features for truer renders

Φeat proposes a physically grounded feature representation that better captures reflectance and mesostructure cues, improving how models perceive and edit materials Paper page. For product shots and stylized photorealism, richer material priors help with consistent highlights, roughness, and fabric/skin micro‑detail without heavy prompt gymnastics Paper page.

Proactive hearing assistants isolate your conversation in noisy spaces

A dual‑model, streaming “hearing assistant” isolates the egocentric conversation in crowded scenes, dynamically focusing on your partner’s speech Video demo. Field crews and vloggers get a hint of what on‑device audio agents might do for cleaner location sound and live transcription Paper page.

🧠 Culture wars and creator mindset

Multiple viral takes on AI haters vs makers provide sentiment signals for how work is judged and promoted. Pure discourse—no tool updates here.

Creators coin ‘AI Hater Syndrome’ as backlash pattern gets a name

A new label—“AI Hater Syndrome”—is circulating to describe people who see AI in everything and assume creators can’t think without it coinage post, following up on AI slop debate that framed anti‑AI pile‑ons as status moves. Naming the behavior helps teams filter bad‑faith critiques fast and keep focus on shipping work.

‘AI art is a mirror’ take skewers gatekeeping and mediocrity claims

A blunt post argues AI exposes creative taste rather than replaces it—“a mirror” revealing mediocrity—pushing back on purity tests about tools mirror take. For working artists, the point is simple: show vision in the output; the method won’t save weak ideas.

Callout: Herd mentality kills art; creativity needs independent judgment

One thread frames uncritical groupthink as the fastest way to become uncreative, urging creators to develop taste and take contrarian bets when needed creativity rant. It’s a reminder to teams: prompts and tools help, but direction comes from you.

PSA: Doomscrolling AI hate is a mental‑health red flag—log off and make

A short PSA calls obsessively seeking content you dislike a warning sign and suggests stepping away instead of feeding the loop mental health PSA. Protecting attention is part of the job. Make space to create.

Flip the script: ‘AI haters are a blessing’ means more room for makers

Another take reframes detractors as positive selection pressure: fewer competitors for those shipping with AI opportunity take. It’s a nudge to stay focused on output and clients, not arguments.

Punchy Q&A contrasts artists with trolls on behavior and basic literacy

A quip compares how artists vs trolls act online—calm craft versus insults and errors—to argue that engagement quality signals credibility contrast quip. The takeaway: don’t feed low‑signal replies; keep receipts in your portfolio.