fal ships 9 Qwen Edit LoRAs at $0.035 per MP – Replicate supports 30+ adapters

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

fal pushed a practical gallery of nine Qwen Image Edit Plus LoRAs priced at $0.035 per megapixel, and Replicate answered with a LoRA Explorer that runs 30+ adapters via Hugging Face IDs. If you handle product shots or social creative, that’s a real workflow unlock: common fixes become API‑callable presets you can scale across SKUs and portraits without hand‑masking every frame.

The fal set spans Remove Lighting, Integrate Product, Group Photo, Next Scene, and more, with before/after demos that keep perspective and shadows believable. Replicate’s “Lightning” path speeds multi‑image, style‑preserving passes, and you can drop in your own lora_weights to keep art direction consistent while iterating angles or backgrounds. Translation: fewer retakes, tighter control, and edits you can hand to a pipeline instead of a person (your retoucher can finally skip the 3 a.m. Slack).

If you’re leaning into video, note the adjacent trend line: ComfyUI’s new one‑node Wan 2.2 character swap hints at the same goal on motion—faster reshoots with less graph acrobatics.

Feature Spotlight

Lock the cut: Kling 2.5 Start & End Frames

Kling 2.5 Turbo’s Start & End Frames lets creators lock bookend frames to stitch clips with seamless continuity—cleaner scene-to-scene flow without complex setups.

Kling 2.5 Turbo adds start/end frame control for fluid transitions and shot consistency; multiple posts show frame-anchored edits useful for filmmakers and motion designers.

Jump to Lock the cut: Kling 2.5 Start & End Frames topicsTable of Contents

🎬 Lock the cut: Kling 2.5 Start & End Frames

Kling 2.5 Turbo adds start/end frame control for fluid transitions and shot consistency; multiple posts show frame-anchored edits useful for filmmakers and motion designers.

Kling 2.5 Turbo adds Start & End Frames for frame-locked cuts and continuity

Kling switched on Start & End Frames in 2.5 Turbo, letting you lock bookend frames so multi‑shot clips line up for precise transitions and recurring beats. The official reel runs ~28.7 seconds and showcases frame‑anchored motion that holds continuity across cuts

.

This lands right after stronger camera‑move control tests, following up on camera moves with a tool aimed at matching intros/outros and stitching sequences without post cheats. For editors and motion designers, it means fewer re‑gens and cleaner previz. For ads, it standardizes bumpers and logo resolves. Kling points creators to its community for recipes and prompt structures you can test today Discord server.

Creators stress‑test Kling’s bookend control; early reels call it “mind‑blowing”

Early users are validating Start & End Frames with style‑consistent transformations and cut matches. A Japanese demo aligns a fantasy transformation on the same bookend frames, while maker recaps label the feature “mind‑blowing,” and partners push fast action spots under the Turbo Pro banner Japan demo, Creator reaction,

.

- Anchor the start frame with a clean, well‑lit plate; keep camera orientation consistent to reduce drift.

- When chaining clips, export the exact last frame as the next prompt’s start to tighten continuity.

- Test 2–3 second overlaps before extending; scale once motion and edges stay stable.

🗣️ Iconic voices you can license (ElevenLabs)

ElevenLabs rolls a curated Iconic Marketplace (Sir Michael Caine) and Matthew McConaughey expands his newsletter into Spanish audio. Fresh clips and links emphasize creator access. Excludes Kling feature.

Iconic Marketplace lists 25+ voices; licensing runs through rights holders

ElevenLabs says the Iconic Marketplace now features 25+ legendary voices, with usage approved and managed directly by each rights holder, following up on launch. The company also shared a direct “start creating” entry point and clarified that licensing requests flow through a two‑sided platform. See the policy and roster in operations note and explore via Marketplace page.

For practical use, this matters because approvals and routing are centralized; you can scope narration or storytelling projects without bespoke outreach, and you get a verified voice provenance path via the rights holder.

McConaughey’s newsletter adds Spanish audio via ElevenLabs

Matthew McConaughey moved from investor to customer: his ‘Lyrics of Livin’’ newsletter is adding a Spanish audio edition powered by ElevenLabs, making his own voice available for non‑English listeners. Details and background are in the company’s note and blog post customer update with more in ElevenLabs blog.

Why it’s useful: creators can point to a marquee example of multilingual voice distribution as a reference for their own localized shows and narrated projects.

Community flags growing roster beyond headline names

Creators are noting the Iconic Marketplace already includes a broader celebrity list beyond Michael Caine and McConaughey, signaling a growing pool for narration and story work. That matches ElevenLabs’ own mention of “over 25 others” on the roster celebrity roster note and operations note.

🎨 Precision edits with Qwen LoRA on fal

fal publishes a gallery of 9 specialized Qwen Image Edit Plus LoRAs—camera control, product integration, portrait expansion, and more—with before/after demos; Replicate ships a LoRA Explorer. Great for designers.

fal ships 9 Qwen Edit LoRA tools with $0.035/MP gallery

fal published nine Qwen Image Edit Plus LoRA recipes—Remove Lighting, Next Scene, Remove Element, Group Photo, Integrate Product, Shirt Design, Face to Full Portrait, Add Background, and Multiple Angles—each with before/after demos and one‑click runs at $0.035 per megapixel Gallery overview, and fal gallery page. The examples cover practical fixes like flattening harsh portrait light Remove lighting page and product placement with matched perspective and shadows for e‑com sets Integrate product page.

For designers and social teams, this bundles frequent edits into reproducible presets without hopping tools.

Replicate’s Qwen LoRA Explorer runs any Hugging Face adapter

Replicate launched a Qwen Image Edit Plus LoRA Explorer that accepts any Hugging Face adapter via lora_weights slugs, with a fast “Lightning” path for multi‑image, style‑preserving edits Explorer release. A curated list of 30+ adapters helps teams start quickly and standardize workflows across SKUs and portraits Hugging Face adapters. Following up on LoRA demo of Qwen‑Edit‑2509’s live Space showcase, this puts those styles behind a stable UI and API.

The point is: you can swap, upscale, and restyle with your own LoRAs, then wire it into pipelines.

🎵 AI cover channels: revenue, rules, growth

A creator breaks down how an AI music cover channel hit monetization in 10 days, CTR tactics (thumbnails), Content ID revenue splits, and daily earnings. Actionable for musicians and editors.

AI cover channel monetizes in 10 days; ~€25–30/day baseline

A creator detailed how an AI music cover channel hit YouTube monetization in 10 days, then stabilized at roughly €25–30 per day from the back catalog growth thread. They chose the 500 subscribers + 3,000 watch hours route (vs 3M Shorts views), leaned on strong thumbnails that peaked above 15% CTR, and confirmed that Content ID typically splits revenue with rights holders at about 50% for covers.

The visual hook mattered: thumbnails and visuals were built with Nano Banana, which the creator also shared a quick-start for generating on X replies Nano Banana guide. For content direction, they showcased a Motown‑style cover as an example of the sound and aesthetic that clicked with viewers YouTube cover.

- Test long‑form uploads to hit 3,000 watch hours faster; keep CTR high with clear thumbnails.

- Expect Content ID to claim and split; plan revenue per video accordingly.

- Batch thumbnail concepts with Nano Banana to iterate style quickly Nano Banana guide.

🧍♂️ Keep the character: Hedra + Recast

Hedra teases “perfect” character consistency across infinite scenes, while creators test Higgsfield’s Recast Studio to drop themselves into film shots—fun, early-stage identity pipelines.

Hedra unveils “Character Consistency” with 12‑hour 1,000‑credit promo

Hedra is pitching a bold promise: one character, infinite scenes, with identity that stays locked across wildly different settings. The company is running a 12‑hour push offering 1,000 free credits if you follow, RT, and reply the phrase “Hedra Character Consistency” launch demo, following up on Hedra Batches (eight‑at‑once generations).

For character‑driven shorts and branded story worlds, this matters. Stable identity through scene changes cuts re‑design cycles and makes episodic looks viable without retraining.

Higgsfield Recast Studio tested by creators: fun, but not pro‑ready yet

Creators tried Higgsfield’s in‑app Recast Studio to drop themselves into film shots; the verdict is that it’s entertaining and usable for playful swaps, but not ready for professional pipelines yet creator test. A follow‑up from the same tester calls it “fine… for fun only,” signaling latency and compositing limits for client work today follow‑up view.

So what? It’s a low‑friction way to pre‑viz gags and social posts, but teams should keep it in the sandbox while waiting on sharper edge handling, motion matching, and color science.

🧵 Generate on X: Nano Banana tricks

Higgsfield’s Nano Banana runs directly inside X replies; creators share the exact syntax, prompt ideas, and bot return images—including Turkish prompt chains. Handy for quick ideation.

In‑reply edit works: “remove all signs” street test returns a clean pass

A creator asked Nano Banana, directly in an X reply, to delete all signage from a busy street photo—and the bot returned an edited scene with objects removed while preserving look and depth Edit instruction and Exact prompt. The delivered image confirms end‑to‑end in‑thread editing without leaving X Bot result.

X‑native Nano Banana reply syntax confirmed with multilingual examples

At least three bot replies with generated images landed today, confirming the “@higgsfield_ai + #nanobanana + your prompt” format works right inside X replies, following up on X‑native gen. Clear how‑tos in Turkish and English walk through the exact wording and flow Syntax in Turkish and How‑to steps.

Nano Banana 2 chatter grows; creators expect near‑term release

Creators say samples labeled as “Nano Banana 2” look notably strong and speculate an official drop could arrive within a few days, urging others to watch for an announcement and prep prompts for X‑native testing Imminent rumor.

Prompt chain example: ‘Kebapçı Apo’ on Mars with astronaut and rocket

Under a seed post, a Turkish prompt chain asked for a grillman in a white tank, a “KEBAPÇI APO” sign with an astronaut hanging from it, Mars terrain, and a scrappy rocket labeled “01” Seed post and Turkish prompt. The @higgsfield_ai bot replied in‑thread with a cohesive composite matching the brief Bot result.

🧪 Google watch: riftrunner, Gemini Live, human‑like vision

Gemini 3 (“riftrunner”) appears on LM Arena; Gemini Live gets accents and a more natural voice presence; DeepMind shares human‑aligned visual grouping via odd‑one‑out tests. Signals for future creative tools.

‘Riftrunner’ shows up on LM Arena, pointing to a Gemini 3 Pro RC

LM Arena briefly listed a new model called “riftrunner,” which creators believe is a Gemini 3.0 Pro release candidate, strengthening signs that a wider rollout is near Arena screenshot. Following up on image preview, which showed a 200 on a private Gemini 3 image endpoint, today’s sighting adds a second concrete breadcrumb that matters for teams prepping style tests and evals. Speculation threads are already calling for Google DeepMind to drop it Speculation post.

If this is the Pro-tier model, expect sharper visual reasoning and prompt adherence—plan a quick A/B harness against your current MJ/Grok/Kling recipes and keep prompts, seeds, and eval images ready to re-run the moment access opens.

Gemini Live update adds accents and a more natural, expressive voice

Google’s Gemini Live got a speech update: regional accents, more expressive delivery, and a noticeably more natural presence in back-and-forths Feature demo. For voice-over, direction, and live ideation, this reduces the robotic feel and makes it easier to hold a scripted tone, which is exactly what small creative teams needed for quick reads and scratch tracks.

If you rely on live reads for timing or animatics, schedule a pass to see whether this replaces your temp VO pass on short clips. Note latency and whether it sticks to your marked beats.

DeepMind aligns vision models to human grouping with AligNet (Nature)

DeepMind reports that aligning vision models via odd‑one‑out tests, the THINGS dataset, a teacher model, and a large synthetic set (AligNet) yields human‑like grouping and better downstream understanding, reasoning, and few‑shot performance Paper summary. For creatives, this points to future models that “see” concepts beyond texture/style, which should help with consistent character/object identity and less brittle edits.

If you’ve struggled with texture bias or mismatched props across shots, keep an eye on models citing AligNet‑style alignment; they’re the ones likely to keep identities straight when you change angles, lenses, or lighting.

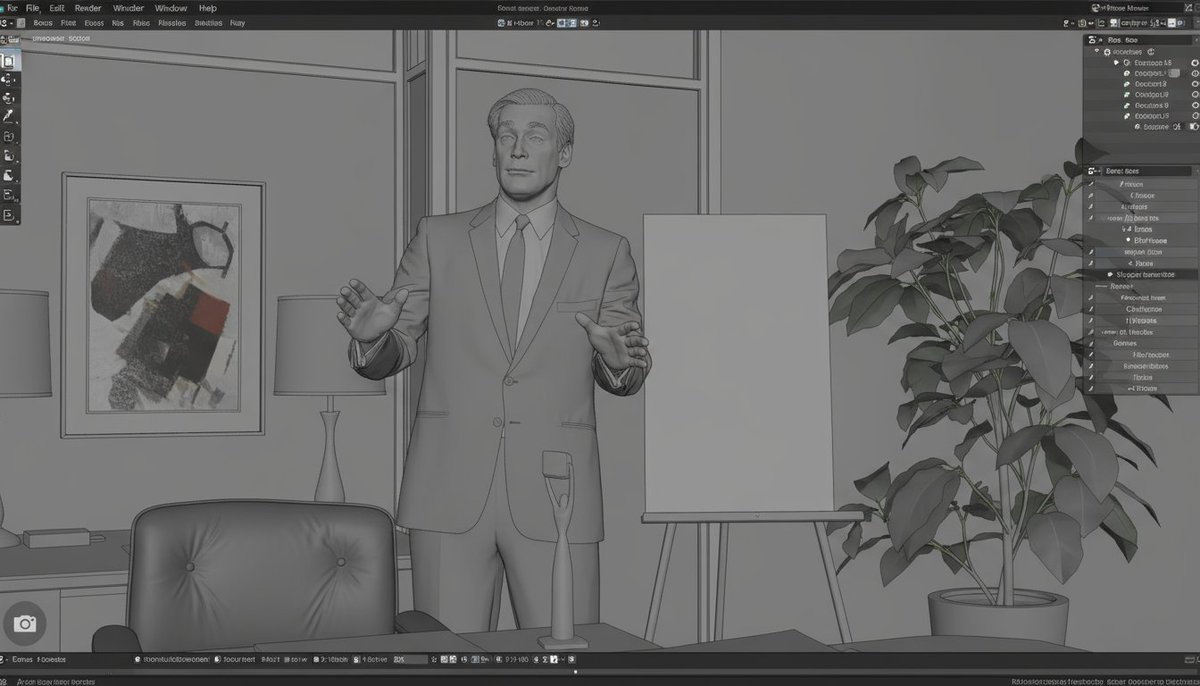

🧩 One‑node swaps: WAN on Comfy Cloud

ComfyUI rolls a Wan 2.2 Animate → Character Replacement workflow as a single node, plus a beat‑synced “EAT IT” experiment and copy‑pasteable cloud workflows. Useful for quick reshoots. Excludes Kling feature.

ComfyUI ships Wan 2.2 Animate → Character Replacement as a one‑node Comfy Cloud workflow

Character swapping now runs in seconds from a single node on Comfy Cloud, cutting complex graph setup to near‑zero and making quick reshoots practical for indie teams. Following WAN 2.2 control camera‑control gains, ComfyUI’s demo shows auto‑replacement by picking a target character and running the flow, with a drag‑in workflow file available in the cloud repo Workflow demo and GitHub repo.

Copy‑pasteable Comfy Cloud Workflows go live: drag files in, run in the browser

ComfyUI published a cloud workflows collection you can drag directly into Comfy Cloud, turning shared .json graphs into runnable jobs without local setup—useful for passing exact pipelines across teams and classes Cloud workflow note and GitHub workflows.

“EAT IT” shows beat‑synced sequence automation entirely inside ComfyUI

A kinetic experiment auto‑drives image sequences to the music beat from folders to a procedural flow, pointing to timing‑accurate motion design without leaving the node graph. The team plans to walk through the workflow live, and a short clip shows the on‑beat morphing visuals Experiment clip and YouTube workflow.

ComfyUI highlights the surge of open‑source tools building on its graph editor

The team calls out a growing stack of OSS add‑ons and companion tools atop ComfyUI—useful context for studios deciding where to anchor pipelines and share nodes as portable assets Ecosystem comment.

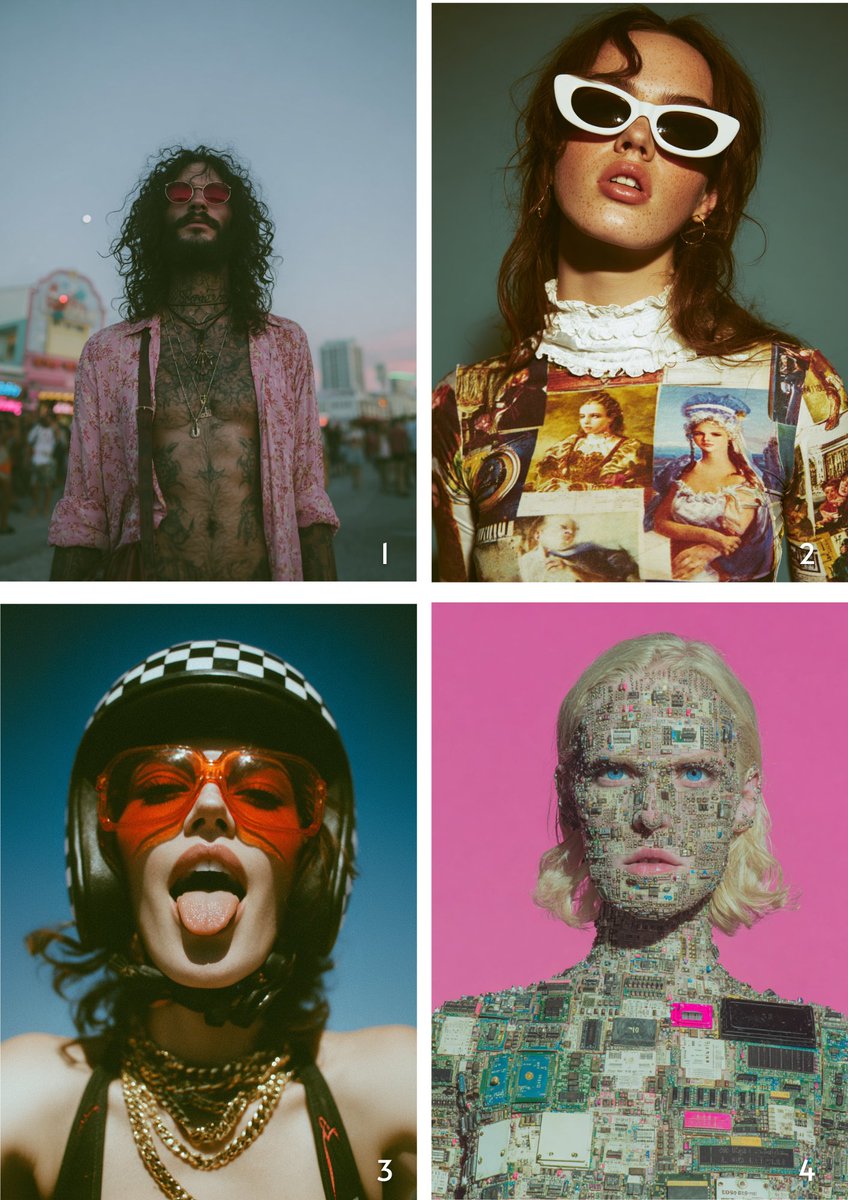

🎯 Steal these looks: MJ V7 + mosaic recipes

Fresh Midjourney style refs and prompt packs drop today—dark‑fantasy comic sref, a modular Mosaic prompt, pink‑chibi V7 collage params, and glossy portrait presets for editorial moods.

Midjourney sref 427834754 nails contemporary dark‑fantasy comic look

A new Midjourney style reference (--sref 427834754) delivers a contemporary American comic vibe—think Spawn, Venom, Conan—with muscular realism and dramatic lighting. It’s tuned for moody hero portraits and fantasy set pieces, with artist lineage callouts to Marc Silvestri, David Finch, Clayton Crain, and Joe Madureira Style reference.

For comic covers, character sheets, and promo one‑sheets, this sref gives you heavy inks, bold shapes, and high‑contrast glows without baby‑sitting every prompt detail.

New MJ V7 collage recipe: chaos 22 + sref 281066737 for pink chibi sets

A fresh V7 collage recipe lands with --chaos 22 --ar 3:4 --exp 15 --sref 281066737 --sw 500 --stylize 500 for cohesive, candy‑color chibi grids. It’s a fast way to stamp out a whole character lineup with consistent palette, proportions, and lighting Recipe post, following up on V7 sref prior collage presets.

Dial chaos between 12–28 to trade repeatability for exploration; keep sw high to lock the look while you iterate poses and roles.

Reusable Mosaic prompt template drops with ATL examples

A clean, swap‑in Mosaic prompt template lands for Midjourney users: “A dynamic mosaic composition featuring a [subject] constructed from delicate [color] tiles…,” designed for precise geometric layouts and rhythm on white grounds. The post includes ATL examples (violin, ballerina, hummingbird, koi) to copy and adapt Prompt template.

Use this when you need consistent art‑direction across a set—brand iconography, product silhouettes, or editorial spot art—by only swapping subject and color tokens.

Editorial “Patterns” zine prompts: collage shirt, checkered helmet, circuits face

Bri Guy shares a tight prompt set for “Patterns”—Japanese‑inspired textile themes, Victorian photo‑collage apparel, a checkered racing helmet portrait, and a transistors‑and‑circuits face mosaic. It’s a ready‑to‑run mini‑moodboard for stylists and art leads Prompt set.

Treat these as modular seeds: swap garment types, palette, or surface treatment while holding framing and camera notes to keep a campaign coherent.

Motion‑streak portrait pack shows MJ’s strength in style‑first imagery

A series of glossy, light‑streak portraits and action stills shows how far you can push V7 into editorial‑grade looks—skin speculars, orange‑cyan light trails, and kinetic blur baked into the render. The set spans faces, a spacecraft sprint, and a helmeted rider Portrait set, with more samples in a follow‑up drop More samples.

Good fit for music key art, fashion teasers, and title cards where the “look” carries more weight than literal scene accuracy.

🛠️ Leonardo maker kits: try‑ons, fonts, Ingredients

Leonardo drops practical features for creators—outfit try‑on, Font Matcher, hairstyle preview, and Veo3 Ingredients for compositing. Solid for branding, campaigns, and pre‑vis.

Leonardo Font Matcher instantly applies any scanned type to your text

Leonardo shows a one‑tap Font Matcher that scans a sample and applies the exact style to your own text, ready for key art and social graphics Feature video, following up on Font Matcher where it first demoed sample‑based type recreation.

Veo3 Ingredients lets you composite images and elements into a single video

Leonardo spotlights Veo3 Ingredients for blending images and elements into one controllable clip, so you can shape style and motion for story beats and brand spots from inside the same workspace Feature brief. Good for previz and shortform motion tests.

Leonardo adds outfit try‑on: inspo photo → flat‑lay → you wearing it

Leonardo’s outfit try‑on flow turns a reference outfit into a flat‑lay and a render of you wearing the full look, all inside the app Feature video. This is useful for campaign mockups, casting approvals, and creator merch looks without a studio.

Change My Hairstyle Blueprint previews bangs vs no‑bangs on your selfie

The “Change My Hairstyle” Blueprint lets you test bangs and other styles on a selfie for character design, casting lookbooks, or creator thumbnails before a real cut Blueprint video. It’s a fast way to align on hair direction in pre‑production.

📽️ Today’s standout reels

A quick skim of inspiring shorts and tests across tools—Luma Dream Machine Ray3, Grok Imagine anime cuts, Pollo 2.0 results, a Midjourney scare gif, and micro‑film technique previews. Excludes Kling feature.

“Uncanny Harriet” returns as a fully AI-made pop video

Two years after the first Harriet clip, the new music video leans entirely on AI for visuals and lip‑sync—no human driving needed this time. The improvement is visible in facial realism and timing. Watch the full video.

Grok Imagine teases vivid anime-style cuts and angles

A compact anime teaser shows pink‑haired character beats across quick cuts, selling Grok Imagine’s pose variety and angle play in a single reel. Useful for testing consistency across edits and pacing. Watch the anime teaser.

Hailuo 2.3 powers creepy micro-shorts for the horror contest

Following up on contest launch, a creator posted an under‑bed ankle‑grab scare and a subway reflection set, both made with Hailuo 2.3. If you’re entering, the contest details live here Contest site, and the subway beat is here whisper reel.

WAN 2.2 on Comfy Cloud swaps characters in one-node workflows

Comfy Cloud shows a one‑node flow to auto‑replace characters inside a scene, yielding quick cinematic swaps. If you want to test it, grab the file from the Comfy Cloud repo and preview the swap demo.

A Midjourney scare-loop shows how subtle motion heightens unease

A creator claims one animated image can explain why Midjourney unnerves people—fast, photoreal transitions end on a crisp cityscape frame. It’s a good study in still→motion hybrids and final‑frame punctuation for title cards. See the scare loop.

Filmmaker previews upgraded techniques for Dubai submission

A director revisiting his first film shows 2024 vs 2025 portrait frames, citing “insane new techniques” and an upcoming breakdown while recruiting collaborators for a $1M festival run. See the before/after grid.

Hedra shows perfect character consistency across wild scene changes

Hedra’s reel demonstrates a single character staying visually locked while the world changes—useful for episodic series or branded avatars. Credits promo: 1,000 free via reply mechanics. See the consistency demo.

Leonardo turns outfit inspo into a flat-lay and try-on reel

Upload a fit pic → get a flat‑lay plus a generated image of you wearing it. It’s a concise loop for social creative and mood boards. Watch the try‑on demo.

Sora 2 reels pit crabs with knives against a battle bot

Two short Sora 2 clips stage an arena of knife‑wielding crabs and a bot, showing action continuity and close‑up impact shots. It’s a lighthearted test of motion and staging. See the crab duel and a bot counterpart bot vs crabs.

Leonardo’s Font Matcher applies any style in seconds

Scan a reference and instantly apply the font style to your own text—handy for title cards and poster comps. See the matcher demo.

📚 Papers shaping creative AI

Mostly methods and toolkits relevant to media workflows—local AI efficiency, small‑model reasoning, GUI agents on human demos, self‑supervised video labels, and an upscaler LoRA Space.

GroundCUA drops 3.56M desktop UI labels; GroundNext hits SOTA

GroundCUA compiles 56K screenshots with 3.56M human‑verified annotations across 87 apps; the GroundNext models map instructions to UI targets and achieve SOTA with less training data, with RL fine‑tuning boosting agent performance paper card, ArXiv paper. Creative toolchains (NLEs, DAWs, Photoshop‑class apps) stand to gain more reliable GUI agents for repetitive edits and panel navigation.

Intelligence per Watt: 5.3× IPW gains put local LMs in play

Stanford et al. introduce Intelligence per Watt (IPW) to compare model accuracy per unit power and show a 5.3× IPW improvement from 2023–2025, with local LMs accurately handling 88.7% of single‑turn chat/reasoning queries and local accelerators still 1.4× less efficient than cloud ones paper card, ArXiv paper. For creatives, this signals more viable on‑device image/video assist and voice tasks without cloud round‑trips.

DeepMind aligns vision models to human‑like concept grouping

Using odd‑one‑out tests, a teacher model, the THINGS dataset, and a large synthetic set (AligNet), DeepMind aligns vision models to group/separate concepts more like humans; the aligned models improve on understanding, reasoning, and few‑shot tasks research summary. Expect better "what’s similar here?" judgments in asset search, storyboard matching, and edit assistants.

VibeThinker‑1.5B coaxes big‑model reasoning from 1.5B params

A diversity‑driven optimization method (Spectrum‑to‑Signal) helps VibeThinker‑1.5B exhibit large‑model reasoning at a fraction of size/cost, a promising path for mobile‑grade storyboard, prompt planning, and shot‑list assistants paper card, ArXiv paper. The takeaway: smarter on‑edge helpers for writers and directors without hauling 70B+ models.

Adaptive multi‑agent refinement lifts dialogue factuality and tone

A multi‑agent framework refines conversational outputs along factuality, personalization, and coherence axes, outperforming single‑agent baselines on tough datasets paper card, ArXiv paper. Writers’ rooms and interactive NPC/dialogue tools can route drafts through specialized checkers rather than one monolithic pass.

ChronoEdit‑14B Upscaler LoRA gets a live Hugging Face Space

A public Space lets you try ChronoEdit‑14B Diffusers Upscaler LoRA for image recovery/inpainting pipelines—handy for rescuing stills and props before comp app note, Hugging Face space. The workflow centers on diffusers‑based upscaling, giving art teams a testable path without local setup.

VideoSSR: self‑supervised reinforcement scales video understanding sans labels

VideoSSR proposes a self‑supervised reinforcement scheme to train video understanding without expensive manual annotation, useful for action beats, cuts, and continuity cues at scale paper note. For filmmakers, cheaper scene semantics could power automated selects, beat detection, and rough cuts in dailies.

🗯️ The AI art argument, again

Debates flare about consumer demand for AI art; one creator shares monthly earnings and day rates; others cite Picasso’s “steal” quip and meme rebuttals. Sentiment vs. outcomes in focus.

Creator posts real earnings to rebut “no one wants AI art” claim

Following up on creator debate, Oscar (@Artedeingenio) says he makes “thousands of dollars a month” from AI art and has been offered $500/day, pushing back on a reply that “no consumer actually wants the AI art” Earnings rebuttal. He adds that multiple publishers reach out for collabs and he declines many due to time.

Why this matters: it shifts the conversation from sentiment to outcomes. If you’re pitching AI‑assisted visuals, this is a concrete day‑rate and demand signal you can benchmark against.

Meme rebuttals lampoon tool gatekeeping: Blender screenshots and “then so is AI”

Creators are countering purity tests with humor: “Memes, but they’re Blender screenshots” pokes fun at the idea that the tool defines the art Blender meme thread, while a separate clip shrugs, “If this is considered art, then so is AI” 3D face art clip. These spread fast and shape public perception—use them to soften tense stakeholder conversations before sharing case studies.

“AI can’t plagiarize; humans can” reframes theft debate around agency

Another thread argues that models lack agency, so plagiarism is a human act, not a machine act Plagiarism claim. This framing won’t settle legal fights, but it’s useful in client education: focus on your data sources, rights, and project scopes—the choices that make or break compliance.

Meta‑take: AI vs anti‑AI posts are sliding into rage‑bait engagement

One creator calls out the debate pattern as “rage bait engagement farming,” noting some spend more time arguing than sharing work Debate meta‑comment. For working artists, the takeaway is simple. Ship more, argue less. Show finished pieces, process, and credits to change minds.

Picasso’s “copy vs steal” quote resurfaces to defend AI workflows

Oscar leans on the attributed Picasso line “Good artists copy, great artists steal” to argue that AI‑assisted making fits a long tradition of derivative art, needling critics with “Do these AI haters think they’re better than Picasso?” Quote defense. The point is rhetorical, but it’s the frame many clients already hear. Prepare a cleaner answer about influence, licensing, and originality.

📣 Calls, galas, and demo days

Opportunities and recaps for creatives: Hailuo’s Horror contest (20k credits), Chroma Awards (> $175k pool), Hailuo LA immersive gala highlights, and Residency demo days in SF.

Free credits today: Hedra (1,000) and Higgsfield (204) limited windows

Two time‑boxed creator promos are live: Hedra is giving 1,000 credits for a “follow + RT + reply ‘Hedra Character Consistency’” (12 hours) Hedra offer, and Higgsfield is handing out 204 credits for “follow + retweet + reply” (8 hours) Higgsfield offer. Grab them to test character‑consistent image/video and one‑click angle shifts without spending.

Tip: reply from your main creative account so the credits DM lands where you work.

ComfyUI announces Friday live deep‑dive into beat‑synced animation

ComfyUI will stream a workflow session this Friday on building kinetic, beat‑reactive image sequences entirely inside ComfyUI—no external editor needed event teaser. The session dissects the “EAT IT” patch from foldered assets to procedural animation; set a reminder here YouTube video.

This is useful if you score loops, bumpers, or reels and want timing locked to your audio.

The Residency SF demo days open RSVPs for Nov 18–19

The Residency is hosting two San Francisco demo nights in a 100+‑year‑old mansion: Builder Demo Day on Nov 18 and Investor Demo Day on Nov 19, featuring “30 of SF’s craziest builders.” Seats will hit capacity; RSVP is open now event details. Sign up directly via the Luma pages for the two nights investor demo day and builder demo day.

So what? If you’re shipping AI work, this is a tight room for feedback, future collaborators, and early users.

AI Slop Review goes live with GerdeGotIt at 10pm CET

The AI Slop Review livestream featuring GerdeGotIt airs today at 10pm CET, following up on live show notice with the watch link now posted. Expect a candid chat on making thoughtful art that still travels online episode drop, with the YouTube event link attached YouTube live.

Why care: it’s a rare hour where a top AI artist unpacks process, not tool hype.

Builder.io hosts Fusion 1.0 live demo on Nov 13

Builder.io is running a live demo for Fusion 1.0—their AI agent that spans product, design, and code—on Nov 13 (10am PT / 1pm ET). If you work in creative production, it’s a chance to see how feature specs turn into shippable UI with fewer handoffs registration post, with registration here webinar page.

So what? If Fusion can keep design and code in sync, it trims rounds of creative rework.