ElevenLabs Image and Video Beta supports Veo, Sora, Kling – 22% off 7 days

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

ElevenLabs rolled image and video generation directly into Studio, so you can create with Veo, Sora, Kling, Wan, and Seedance, then finish on a multi‑clip timeline without leaving the app. There’s a 22% launch discount for seven days. For most teams, that collapses 3–5 apps into one place: voices, precise lipsync, music/SFX, and Topaz upscaling bundled into a single render.

The flow is simple: generate, send to Studio, swap in a cloned or library voice, tighten lip sync, add music, bump resolution, and export. Stills from Nanobanana, Flux Kontext, Wan, and Seedream can seed boards and assets that drop straight onto the timeline. Alibaba’s Wan team publicly confirmed Wan is in the model mix, and ElevenLabs says more engines are on the way, which makes the suite feel like a neutral hub rather than a walled garden. The practical win is control and speed—fewer round‑trips, cleaner text and faces on final passes, and consistency across edits.

If you’re stitching shots across tools, Kling 2.5 Turbo Pro’s Start/End Frame control also went live on two providers (fal and Runware), which pairs well with Studio when you need locked transitions between scenes.

Feature Spotlight

ElevenLabs becomes a one‑stop creative suite

ElevenLabs folds image+video into its audio studio (Veo, Sora, Kling, Wan, Seedance), adds lipsync and Topaz upscaling—turning it into a unified platform for creators to generate and finish pieces in one place.

Biggest creative stack news today: ElevenLabs adds built‑in image/video gen with top models, studio timeline, lip‑sync, music/SFX, and Topaz upscaling. Multiple accounts echo the shift. This section excludes other tool updates.

Jump to ElevenLabs becomes a one‑stop creative suite topicsTable of Contents

🎛️ ElevenLabs becomes a one‑stop creative suite

Biggest creative stack news today: ElevenLabs adds built‑in image/video gen with top models, studio timeline, lip‑sync, music/SFX, and Topaz upscaling. Multiple accounts echo the shift. This section excludes other tool updates.

ElevenLabs launches Image & Video (Beta) with top models and Studio timeline

ElevenLabs rolled out Image & Video (Beta) with a 22% launch discount for seven days, letting you generate with Veo, Sora, Kling, Wan and Seedance, then finish in Studio with voices, music and SFX launch thread, and product page. Following up on voice replicas licensed celebrity voices, Studio lets you apply cloned or library voices across multi‑clip timelines.

It also supports stills with Nanobanana, Flux Kontext, Wan and Seedream for boards or assets you can refine, then bring straight into the editor launch thread. This consolidates what teams have been juggling across 3–5 separate apps into one place.

Topaz upscaling and precise lipsync land inside ElevenLabs Studio

The new suite bakes in Topaz upscaling for both images and videos, plus lipsync and voice‑swap directly in Studio, so you can tighten narration and boost resolution without round‑tripping to other tools feature brief. The flow is simple: generate → export to Studio → adjust timeline, refine the VO with a clone or library pick, add music/SFX, and render.

For small creative teams, this cuts export/import steps and reduces artifact‑ridden final passes, especially on branded edits where crisp text and synced VO matter.

Alibaba confirms Wan powers ElevenLabs’ new image/video suite

Alibaba’s Wan team says Wan is among the models inside ElevenLabs’ Image & Video (Beta), adding another first‑party confirmation to the official model lineup partner note, alongside Veo, Sora, Kling and Seedance model list. For creators, this means Wan‑style photorealism is available in the same pipeline as voice and music—no separate export.

Expect more models to show up over time; ElevenLabs notes “more image and video models are coming soon” in its thread model list.

🎬 Frame‑locked control and fast restyles

Cinematic control updates for filmmakers: start/end frame guidance lands across providers, plus quick restyle pipelines. Excludes the ElevenLabs launch (covered above).

Kling 2.5 Turbo Pro Start/End Frame goes live on fal and Runware

Kling 2.5 Turbo Pro’s first/last‑frame control is now shipping across two providers: it’s live on fal’s Pro endpoint and exposed via Runware’s API. That means shot‑locked transitions and scene joins you can plan, not chase—useful for storyboards and precise beats, following up on Start/End frames early creator tests.

Creators can trigger it on fal’s hosted model with guidance presets Launch thread and dig into Runware’s implementation for programmatic workflows API update. For pricing and model details, see fal’s model page Fal model page.

OpenArt adds one‑click video restyle with preset looks

OpenArt launched a drag‑and‑drop Video Restyle: upload a clip, pick anime/retro/comic/sci‑fi/fantasy, choose resolution, and render. It’s a fast route for creators who need on‑brand variants without rebuilding shots.

The feature runs from a simple web flow Feature demo with the product page outlining supported modes and output options OpenArt restyle.

ComfyUI Subgraphs show stylize→loop pipelines in the cloud

A new ComfyUI cloud workflow highlights Subgraphs as a clean way to stylize an input image and output a looped transformation video. Only the key controls are surfaced, so you can learn and then tinker deeper when needed.

The team shared the runnable workflow JSON for direct cloning and edits Cloud workflow, with the graph available on GitHub for inspection or remixing Workflow JSON.

LTX Studio’s Elements speed reusable product comps and variants

LTX Studio is pushing an Elements workflow: save a clean product once, tag it, then composite it across shots in Gen Space or Storyboard. The tips stress saving multiple angles, HEX color swaps, and testing rhythm in Storyboard before production.

Producers can pair Elements with Nano Banana for realism bumps Pro tips thread and jump from the landing page into project flows and docs LTX landing.

Seedream 4.0 speeds batch edits with multi‑reference inputs

Seedream 4.0 now accepts multiple reference images at once and returns cohesive sets fast, aimed at batch iteration for product and campaign visuals. It’s positioned to cut round‑trips when you need consistent style across angles.

BytePlus teased the workflow and results for creative teams evaluating throughput and consistency Feature brief, with a short explainer video linked for a quick scan Product video.

Pollo AI rolls out Flash Templates for quick promo restyles

Pollo AI introduced Flash Templates geared for making products “pop” with pre‑built motion/lighting setups, plus 30+ Black Friday presets and 3 free uses each through Dec 1. It’s a low‑friction way to restyle promos without timeline work.

The launch thread includes a direct link to the templates and a small credit promo for trying them today Launch thread, with the template gallery live for immediate tests Template page.

🤖 Agentic video: zero‑input episodes and model pickers

Early signs of autonomous long‑form generation and toolchains. Excludes the ElevenLabs suite (feature) to focus on agent workflows and model choice UX.

NoSpoon agent generates a zero‑input 25‑minute episode on Sora 2

NoSpoonStudios says its video agent completed an autonomous 25‑minute episode with Sora 2, with no user input, and is testing 10‑minute+ lengths before a wider release Autonomous episode post. The team also highlights the agent works across engines, showing a creator piece powered by Luma and noting Ray 2 remains available Creator showcase.

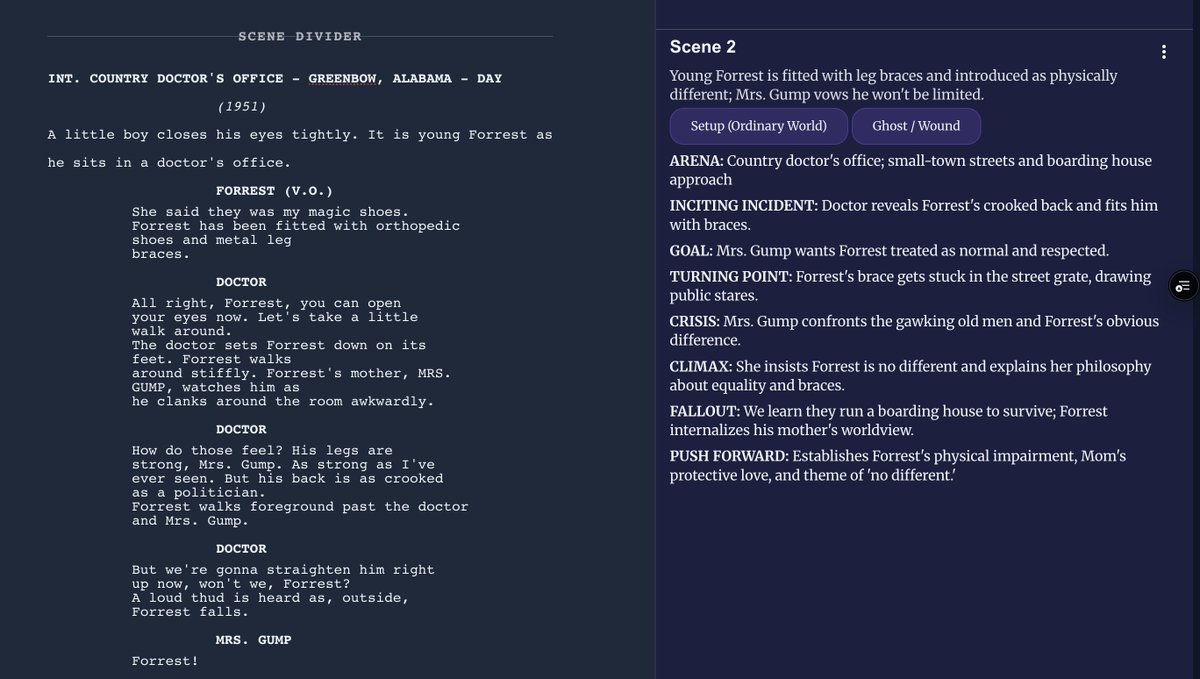

BeatBandit adds screenplay import that auto‑maps acts, beats, and scenes

BeatBandit can ingest a full script and, in roughly 5–10 minutes, break it into logline, theme, acts, per‑scene crises, and a reverse map from scenes to global beats; it then drops the result into an editor that links scenes back to beats Feature thread. For agentic pipelines, this gives a structured plan the video agent can follow or revise shot‑by‑shot Editor view.

Synthesia avatars now perform actions and move through scenes; free‑tier access

Synthesia’s latest update takes avatars beyond talking heads: you can prompt actions, environments, and outfits, and the system renders characters moving through a scene—useful for product demos and training Feature video. The company notes this capability is available to everyone, including the free plan, which lowers the barrier for testing.

Wondercraft surfaces a multi‑model picker: Sora 2 Pro, Sora 2, Veo 3.1

Wondercraft’s studio lists several top video models side‑by‑side for creators, including Sora 2 Pro, Sora 2, and Veo 3.1, signaling a straight‑from‑UI way to A/B outputs without hopping tools Model list. This model‑picker pattern matters for teams building longer autonomous runs, where routing to the best engine per shot is a real gain.

Pictory’s Chrome extension turns any webpage into an edit‑ready video

Pictory shipped a Chrome extension that converts a live URL into a pre‑cut video project with script and assets, removing the copy‑paste step for explainer and repurpose workflows Chrome demo. The listing is live for immediate install via the Chrome Web Store Chrome extension.

🧙♂️ Character realism and 3D from one photo

Big upgrades for character artists and game/film pre‑viz: a new HD character model and single‑image→3D. Excludes ElevenLabs news.

Hedra ships Character‑3 HD with 2,500‑credit tryout

Hedra unveiled Character‑3 HD, a higher‑fidelity character model billed as delivering cinematic realism, and is offering 2,500 credits via a follow + RT + “Character‑3 HD” reply flow so creators can test it immediately release thread.

For character artists and previs teams, this means sharper skin detail, stronger facial coherence in close‑ups, and a low‑friction way to A/B it against your current stack this week.

Hunyuan3D teases single‑image to 3D modeling for creators

Tencent’s Hunyuan3D highlighted a full 3D model generated from a single photo and invited creators to try it and share results model invite. If it holds up, this can turn reference stills into usable 3D bases for games, previs, and concept passes without a photogrammetry setup.

🧩 Product pipelines with LTX Elements + Seedream 4.0

Today’s how‑tos center on product shots at scale: LTX publishes a 3‑step Elements flow with pro tips; Seedream 4.0 adds multi‑reference batch output. Excludes the ElevenLabs feature.

LTX Elements posts a 3‑step product workflow with Storyboard and pro tips

LTXStudio laid out a practical product‑shots pipeline using Elements: Save your product, Tag it in Gen Space/Storyboard, then Composite multiple Elements into final shots—with Storyboard to test campaign flow. The thread adds concrete tips: save the product from multiple angles to keep geometry consistent, tweak brand colors via HEX codes, and pair with Nano Banana for added realism and polish Step 1 video, Step 2 video, Pro tips post, Workflow recap.

If you’re building ad sets or decks, this is built for scale: reuse the same saved Element across dozens of compositions, maintain look integrity, then lock rhythm in Storyboard before production. Full docs and access live on the LTX site LTX site.

Seedream 4.0 adds multi‑reference batch generation for product images

Seedream 4.0 now takes multiple reference images at once and returns cohesive, high‑quality variations in batches—useful for product shots where you want consistent angles, textures, and lighting across a set. Following up on Type-to-edit text‑guided edits, today’s post emphasizes "One click. Multiple results" and shows watch examples that translate well into thumbnails, storyboards, and catalog visuals Feature post.

If you need proof points for stakeholders, BytePlus also points to a retail workflow case study you can share internally YouTube case study.

🧠 LLMs for research and drafting (hands‑on today)

Writers and researchers get new options: Grok 4.1 rolls out broadly with strong Elo claims (mixed creator tests), and Perplexity hosts Kimi K2 Thinking on web. Excludes ElevenLabs platform.

OpenAI rolls out GPT‑5.1 Instant and Thinking to ChatGPT and API

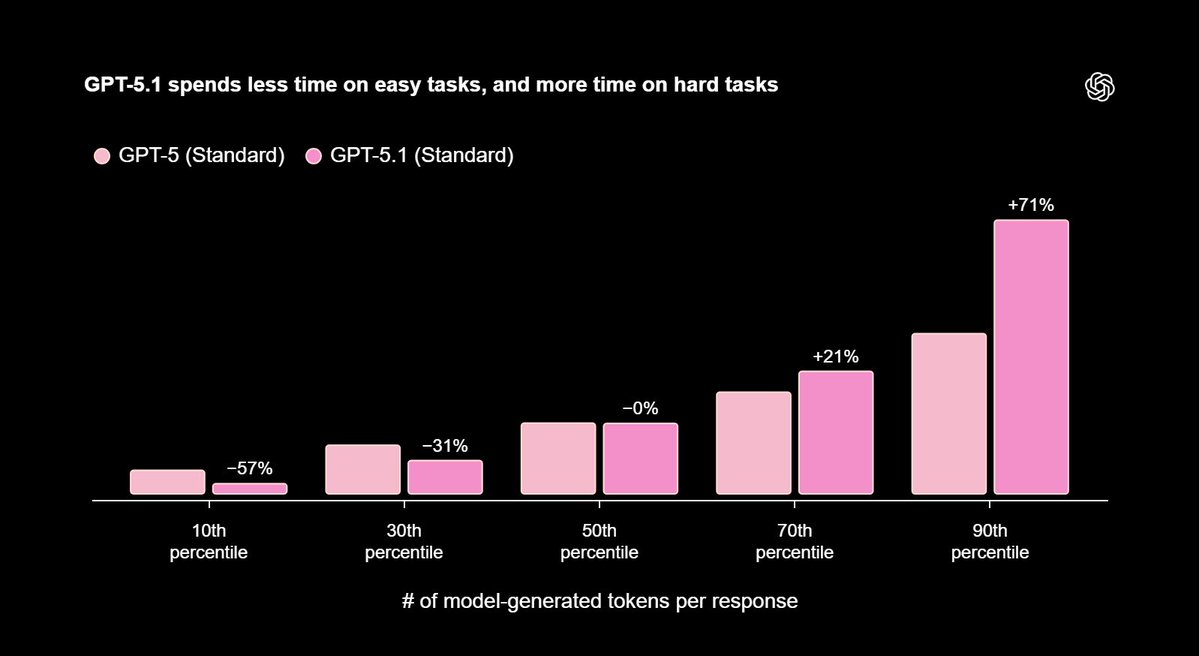

OpenAI is rolling GPT‑5.1 to paid ChatGPT users today with Instant and Thinking variants, plus an API add later this week under gpt‑5.1‑chat‑latest and gpt‑5.1 (Thinking). The update claims clearer writing, better math/coding, adaptive reasoning, and new personalization presets. See the rollout notes for specific gains and the easy/hard token‑spend chart. rollout notes

For research and drafting, this means faster short answers but deeper persistence on tough questions, and better adherence to custom instructions. API teams also get prompt caching up to 24 hours and specialized coding models, which helps long research sessions and iterative drafting. api update

Grok 4.1 goes live across web, X, and apps with top preliminary Elo

xAI’s Grok 4.1 is now selectable on grok dot com and within X on iOS/Android, with a "Thinking" variant and preliminary LMArena Elo leads: 1483 for thinking and 1465 for non‑reasoning. Availability spans web and mobile today. model picker leaderboard claims

Early creator trials for research‑style prompts are mixed: one test shows fast, detailed synthesis, while another found underwhelming responses relative to expectations. Treat it as promising but worth side‑by‑side checks before switching workflows. creator test critical take

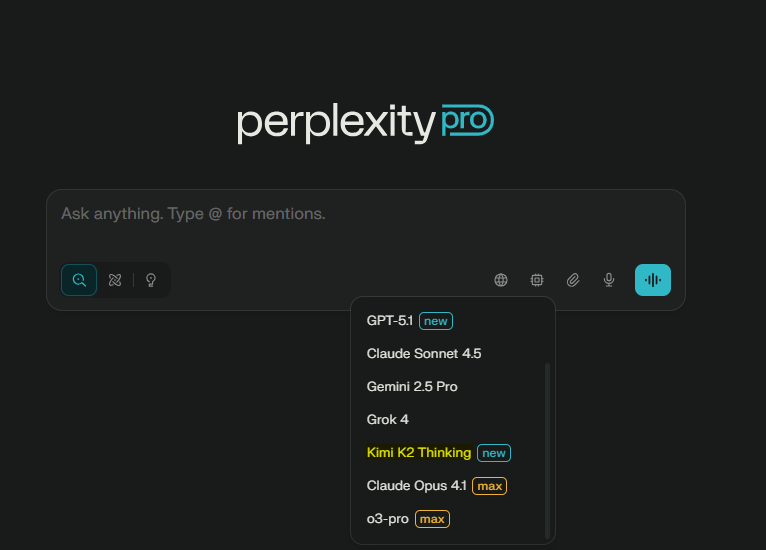

Perplexity adds Kimi K2 Thinking (Chinese) on web; Android pending

Perplexity has added Kimi K2 Thinking to its model selector on the web, hosted by Perplexity as previously indicated; users report strong performance. The Android app doesn’t show it yet. If you research Chinese sources or want a different long‑thinking profile, this adds another capable option to rotate. model picker

ResearchRubrics benchmark debuts to grade deep research agents

Scale AI introduced ResearchRubrics, a benchmark with 2,500+ expert rubrics to score factual grounding, reasoning, and clarity on deep research tasks. Early results note leading agents—including big‑name DR systems—average under 68% rubric compliance, flagging missed implicit context and weak synthesis. This is useful if you’re vetting LLM research workflows or routing across models. paper summary ArXiv paper

📝 Script breakdown assistants go pro

BeatBandit adds screenplay import with automated beats/scene mapping and an editor that links scenes to story structure—handy for directors and editors.

BeatBandit adds screenplay import with automatic beats and scene mapping

BeatBandit now ingests a full screenplay and, in about 5–10 minutes, generates a linked breakdown: logline/theme, acts and major beats, character notes, a scene list with per‑scene crises, and a reverse map tying scenes back to global beats Feature thread and Setup steps. The built‑in editor links each scene to its story beats—positioned as beyond what Final Draft offers—so directors and editors can jump from structure to page and back without manual cross‑referencing Editor link, Analysis details, and Scene mapping.

🎨 Style recipes and prompt packs you can reuse

A strong crop of reusable looks and token lists for illustrators and photographers; mostly prompt frameworks and consistency hacks. Excludes ElevenLabs items.

Midjourney V7 comic‑book template (3:2) lands with clean, punchy outputs

A reusable Midjourney V7 prompt packs bold outlines, motion lines, vivid primaries and --ar 3:2 to turn any subject into a dynamic “charging forward” panel Prompt template.

One‑ref “Nano Banana” template locks a glitched stripe aesthetic across scenes

Using a single reference image as [@]img1, this template forces Nano Banana to keep the same distorted stripes, palette and glitch across new sports shots and even objects—copy: “Create an editorial [sport‑type] [shot‑type] image that follows the same style and colour palette as [@]img1.” Template thread.

Grok Imagine fashion/portrait token pack: projection mapping, pink/cyan, Pro 400H

A compact token list steers Grok Imagine toward editorial neon and projection‑mapped looks—e.g., “Pink and cyan interplay,” “Facial light patterning,” “Fujifilm Pro 400H”—ready to mix into prompts Token list.

Neo‑realistic fantasy anime style ref (--sref 4054265217) for cinematic portraits

Drop --sref 4054265217 into MJ prompts to inherit a polished, studio‑grade fantasy anime look with warm tones, diffuse shadows and semi‑realistic rendering Style ref post.

New MJ V7 sref 3794279776 adds a clean collage aesthetic

A fresh MJ V7 recipe—--sref 3794279776 with chaos 10, stylize 500 and sw 200—yields crisp, poster‑ready collage panels Prompt recipe, following up on Warm watercolor sref.

Gemini Nano Banana JSON prompt for cinematic K‑pop/goth portraits

A full JSON spec pins camera (85mm, f/1.8), pose, lighting gels and palette for reproducible studio portraits; tweak fields like background_color or shot_type to iterate fast Prompt JSON.

Grok Imagine landscape pack: four ALT prompts for surreal worlds

Four reusable ALT prompts generate cohesive sci‑fantasy terrains—etched monoliths, ribbed tree‑towers, golden deserts, storm valleys—with lighting cues you can recycle across shots ALT prompts.

Nano Banana “scribble art” prompt for single‑line characters with pen reveal

A structured prompt produces continuous‑line portraits on white with a visible pen at the edge; swap [Character Name] to reuse the exact look and framing Prompt card.

📣 Ad kits + Black Friday perks for creators

Today’s best creator‑side deals and ad templates across platforms. Excludes the ElevenLabs launch to keep this focused on promos/workflows.

Higgsfield BF: 65% off + unlimited image models, 9‑hour 300‑credit drop

Higgsfield is running a Black Friday sale at 65% off annual plans with a year of unlimited image‑model usage, plus a 9‑hour social giveaway for 300 credits if you follow, repost, and comment. This stacks directly for teams making ads and covers a wide model roster. Following up on 65% off, the new hook is the timed 300‑credit boost.

See the terms in the launch reel Deal thread, and plan selection on the site Pricing page.

Kling AI BF deal: 50% off year one, up to 40% extra credits, Unlimited Generation

Kling AI’s Black Friday offer cuts your first‑year subscription by 50%, boosts top‑ups with up to 40% extra credits, and unlocks Unlimited Generation for Ultra and Premier tiers. This directly lowers cost per iteration for video teams.

Full slate in the official post Deal launch; creator round‑up flags it as a tempting pick for AI video work Creator note.

Pictory BFCM: 50% off annual + 2,400 credits, plus a Chrome web‑to‑video tool

Pictory’s Black Friday/Cyber Monday promo halves annual pricing and adds 2,400 AI credits, with a bonus coaching session noted in‑thread. For social teams, the new Chrome extension can turn any webpage into an edit‑ready video, which pairs well with the discounted credits.

Deal specifics and pricing are here Sale terms and on the site Pricing page. The browser add‑on workflow is shown step‑by‑step Extension demo.

Pollo 2.0: 30+ Black Friday Flash templates (3 free uses each) + $10K contest

Pollo AI shipped 30+ Black Friday Flash templates for product ads, giving 3 free uses per template through Dec 1 and 100 credits via follow + repost + reply “Flash.” There’s also a Pollo 2.0 community ranking contest with a $10,000 prize pool running Nov 17–30.

Template reel and free‑use details are here Templates post with the page linked Templates page. Contest steps and submission form are in the announcement Contest post and the form Contest form.

Hailuo Minimax 2.3 is currently unlimited at 768p on Freepik

Creators report Hailuo Minimax 2.3 is “currently UNLIMITED” on Freepik at 768p, making it a zero‑cost way to test longer runs and motion ideas right now. This is a quiet but valuable perk for budget‑sensitive video experiments.

Note the community heads‑up here Freepik unlimited.

Hedra Character‑3 HD promo: 2,500 credits for follow/RT/reply

Hedra released Character‑3 HD for high‑realism characters and is granting 2,500 credits via DM if you follow, repost, and reply with “Character‑3 HD.” Useful for creators needing close‑up faces and consistent expressions without upfront spend.

See the offer and visual quality in the launch post Model and credits.

🎙️ Voices and music in minutes (non‑Eleven)

Rapid voice and music options for creators outside of the ElevenLabs feature: Firefly speech feels natural, Producer.ai adds memories, and a 12‑link resource thread covers lipsync workflows.

Firefly’s Generate Speech gets creator thumbs‑up for emotional reads

Adobe Firefly’s Generate Speech is earning real‑world praise for natural pacing and tone control that makes reads feel human, following up on Firefly speech tutorial coverage. A creator reports dropping in a script, picking a voice, and nudging tone to get an emotional delivery, with a direct link to try it now Creator review and the tool page Firefly page.

For quick dubs, character VO, or temp narration, this cuts setup time to minutes while keeping control over feel. If you need a second source, the same creator shares a direct “try it” pointer too Try link.

Producer.ai adds “Memories” to learn your taste and speed up song creation

Producer rolled out Memories, an opt‑in profile that learns your preferences so the tool composes tracks that feel more like you. The team’s short demo shows the feature inside the app, with a promo code (MUSIC1) shared to get started today Feature thread and a follow‑up nudge with the same code Promo code.

This is useful when you need consistent sonic identity across shorts, tutorials, or ad edits and want to reduce prompt wrangling over time.

12 free links to ship AI cover vocals: lipsync, visuals, thumbnails

Techhalla compiled 12 free resources that walk through the whole AI cover pipeline—lipsync, stills, animations, transitions, and thumbnail craft—so you can move from idea to upload fast Guide thread. The thread calls out Infinite Talk on Higgsfield Speak as the go‑to for dependable lip‑sync when animating covers Lipsync pick.

If you’re piecing together tools for YouTube or Shorts, this gives a concrete starting set and a tested lip‑sync choice without touching ElevenLabs.

🏗️ Infra shifts that speed creator tools

Platform and runtime moves likely to improve latency/cost for creative workloads. Excludes ElevenLabs’ feature story.

Replicate joins Cloudflare to run models closer to users

Replicate is joining Cloudflare and will remain a distinct brand while gaining speed, more capacity, and deep integration with the Cloudflare Developer Platform Deal announcement. For creative teams, that points to lower latency at the edge and simpler deployments for image/video inference.

If Cloudflare Workers AI and caching back Replicate-hosted models, expect faster cold starts and steadier global throughput during launches.

GMI to build $500M Taiwan AI data center with ~7,000 Blackwell GPUs

GMI will invest $500M to stand up a high‑density AI data center in Taiwan powered by roughly 7,000 NVIDIA Blackwell GB300 GPUs Investment details. More training and inference capacity should ease peak‑time congestion and stabilize pricing for video and image generation backends used by creative tools.

LMSYS debuts SGLang Diffusion acceleration with Wan-series support

LMSYS introduced SGLang Diffusion, bringing runtime acceleration for diffusion models with support for Alibaba’s Wan-series, according to Alibaba Wan’s team Acceleration note. Faster inference loops cut render queues and can lower per-shot costs when platforms adopt it, which matters for batch style frames and storyboards.

📚 Thinking‑aware editing and agent orchestration (papers)

Fresh papers for creative AI: multimodal editing with reasoning, interleaved generation, agent orchestration, and MoE scaling. Mostly method/benchmark releases today.

MMaDA‑Parallel adds thinking‑aware editing with 6.9% alignment gain on ParaBench

A new diffusion+language framework, MMaDA‑Parallel, keeps text and image reasoning in sync during denoising and adds Parallel RL (ParaRL) guided by semantic rewards. The authors report a 6.9% Output Alignment lift over the prior Bagel baseline on the new ParaBench, aimed squarely at thought‑consistent edits and generations. This matters if your edits keep drifting from what the prompt implies.

ResearchRubrics: 2,800+ hours and 2,500 rubrics to grade deep research agents

Scale’s ResearchRubrics pairs domain prompts with 2,500+ expert rubrics (built with 2,800+ human hours) to score factual grounding, reasoning, and clarity in long‑form, evidence‑backed answers. Early runs show leading agents average under 68% rubric adherence—useful reality checks if you rely on agents for briefs, treatments, or research docs.

WEAVE debuts a suite for interleaved multimodal comprehension and generation

WEAVE introduces tasks and metrics where models must read images, follow text instructions, then alternate between understanding and generating across turns. It focuses on in‑context, multi‑turn workflows—useful for tools that refine shots or composites step‑by‑step—showing clearer wins over single‑shot editors in the provided comparisons.

UFO³ proposes cross‑device agent orchestration with a mutable “TaskConstellation” DAG

UFO³ models a user goal as a distributed DAG of atomic “TaskStars,” letting an orchestrator schedule, adapt, and recover tasks across heterogeneous devices. For creative teams, the idea maps well to agent pipelines that juggle render nodes, mobile capture, and cloud LLM tools under one evolving plan.

✨ Showreels and shot specs to spark ideas

Inspiration corner: micro‑reels and prompt‑rich examples across Luma, Grok Imagine, and Veo. Excludes the ElevenLabs news to keep this a pure creative gallery.

Dark‑fantasy comic sting in Grok Imagine nails reveal + title beat

A short Grok Imagine clip lands a moody winged reveal then a clean title card—useful as a logo sting or cold open for horror‑fantasy pieces short clip. The glow control and deep blacks will grade well into teaser slates.

ImagineArt 1.5 montage showcases crisp portraits, paintings, and party scenes

A creator’s montage claims a convincing “real‑photo” feel across varied subjects—handy to mine for stills before you animate or comp model montage. Use it to spot strengths in skin, eyes, and mixed lighting.

Neo‑realistic fantasy anime sref 4054265217 locks cinematic studio finish

A new Midjourney style ref (--sref 4054265217) yields a warm, neo‑realistic fantasy look with polished studio lighting—useful for consistent series work across characters and scenes style refs. Keep it in your sref stack to maintain continuity.

Otherworldly landscape set comes with usable ALT prompts for quick lookbooks

Four Grok Imagine landscapes—glowing maze monolith, ribbed megaflora, carved desert, and wave‑stone valley—ship with detailed ALT prompts you can reuse to seed environment packs prompted set. Iterate time of day and haze to build a cohesive series.

Seedance 1.0 Pro Fast reels emphasize sharper faces and stable motion

Runware posted multiple quick reels showing face sharpness, landscape detail, and side‑by‑side comparisons under the “Pro Fast” mode—good for realism checks before you commit a project reel set. Try mirroring one test for apples‑to‑apples.

Grok Imagine portrait tokens: a compact list for editorial lighting looks

A token pack—Projection mapping, Pink/cyan interplay, Facial light patterning, Fujifilm Pro 400H, and more—pairs with a collage of examples to steer Grok’s fashion/portrait looks token list. Save it as a reusable lighting menu for shoots.

MJ V7 collage recipe (sref 3794279776) delivers cohesive panel sets

A V7 recipe—--sref 3794279776 with chaos 10, stylize 500, sw 200—produces haloed, cohesive collage panels ideal for mood boards that feel unified settings post. Mix single‑subject and prop tiles without losing the set’s identity.

Naturalistic walk test: Grok layers background parallax on a park track

A short Grok Imagine pass shows grounded gait physics, background layering, and a clear path through trees—useful as a baseline for walk‑and‑talks scene analysis. Duplicate the move to audit your own parallax stability.

Veo “Third Person Pink Person Game” nails clean infinite loop pacing

A quick Veo experiment demonstrates a seamless repeatable loop with an over‑the‑shoulder approach shot and instant reset—handy for UI overlays or game‑ish idents loop clip. Use this to validate your loop joints.

Anime “Highlander” concept clip offers pacing beats for sword duels

Two tight cuts—a pose with glowing blade and an intense eye close‑up—deliver a quick reference for duel pacing and framing in stylized anime concept clip. Borrow the timing to test your own trailer open.

🗣️ Creator discourse, tactics, and memes

The conversation itself is news today: craft vs “press the button,” audience‑growth tactics, and ad‑backlash banter. Product launches are excluded here.

AI art isn’t “one button”—creators stress vision, iteration, and taste

A heated thread argues great AI art demands vision, taste, technical chops, and countless iterations; the button press is the last 0.01% craft rant. Another post adds that markets pay for results and direction, not hours suffered—citing Renaissance workshops and Warhol’s Factory as precedent art history take. So what? Creators can use this framing when clients or commenters dismiss the work as trivial.

Tactic: switch to verified‑only mid‑viral to turn haters into reach

One growth playbook making the rounds: let haters pile on, then flip comments to verified‑only so trolls are forced to repost instead of reply—boosting reach while keeping your thread clean growth tactic. Use carefully; it can spike engagement, but heavy gating can also alienate genuine fans.

Coke’s AI Christmas ad sparks doctored‑fingers claims and rebuttals

Following up on extra fingers claim about an anti‑Coke video, creators now call out a YouTube thumbnail that appears to add distorted fingers to smear the ad thumbnail callout. Others mock the backlash—“the cope in the replies is hilarious”—as industry voices debate what AI means for advertising jobs and workflows reply snark, ad industry prompt.

Career tip for AI creators: get on LinkedIn where buyers scroll

A live‑stream clip urges AI artists to prioritize LinkedIn because brands, agencies, founders, and buyers actually browse there daily—while creators fight for views elsewhere career advice. It’s a nudge to route work‑in‑progress, reels, and case studies to a channel decision‑makers already trust.

Studios will either admit AI—or hide it, say creators

A blunt meme sums up sentiment in film and games: some studios will openly use AI, others will use it quietly and deny it industry meme. The point is simple. Buyers care about outcomes; disclosure norms will lag actual adoption.

Actors frame AI in film as tools, not threats

Joel Edgerton and Felicity Jones offer a pragmatic take: AI is concerning but worth understanding, and it should serve craft rather than replace it—mirroring their film’s themes of tech disruption actor perspectives. For directors and editors testing AI, this is useful language for union conversations and client briefs.