ImagineArt Community puts prompts on display – $50 at 1,000 views

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

ImagineArt rolled out a transparent Community where every post exposes the full prompt, model, and settings — and it pays. Link your profile and hit 1,000 views to unlock a $50 promo, turning the portfolio itself into an earnable unit (yes, your prompt is the product).

The feed doubles as a learning lab: 1‑tap prompt copy, downloadable assets, and high‑res showcases make it easy to reverse‑engineer looks without blind trial‑and‑error. Payouts tether to views and likes, rewarding reproducible recipes over one‑off spectacle — the kind clients actually ask for twice.

This lands as pro pipelines normalize AI video: Hollywood shops are leaning on ComfyUI to rescue “impossible” shots, while Kling 2.5 is earning creator praise for clean multi‑beat action, physics, and lighting. Make the recipe visible and your team can repeat it; hide it and you’re back to luck.

Feature Spotlight

Prompt‑transparent portfolios that pay (ImagineArt)

ImagineArt Community turns portfolios into an open learning lab—full prompts, models, and settings shown—and pays creators ($50 promo + view‑based rewards). Faster skill‑up, cleaner reels, real income.

Big push around ImagineArt Community today: a creator‑centric hub where every post shows full prompts, models, and settings, plus a $50 promo for linking your profile. Heavy focus on learning, portfolio polish, and monetization.

Jump to Prompt‑transparent portfolios that pay (ImagineArt) topicsTable of Contents

🧰 Prompt‑transparent portfolios that pay (ImagineArt)

Big push around ImagineArt Community today: a creator‑centric hub where every post shows full prompts, models, and settings, plus a $50 promo for linking your profile. Heavy focus on learning, portfolio polish, and monetization.

ImagineArt Community opens with transparent prompts and a $50 profile bonus

ImagineArt launched its Community as an “open‑source learning lab” where every post reveals the full prompt, model, and settings, so creators can reverse‑engineer results and level up faster launch thread feature pitch. The rollout includes a $50 incentive if you link your profile on socials and reach 1,000 profile views, positioning the portfolio itself as a unit that can earn offer thread payout details ImagineArt page.

- Posts expose prompts, models, and parameters with one‑tap prompt copy and downloadable assets, reducing blind trial‑and‑error for style replication prompt copy.

- The platform frames engagement as validation: views and likes translate to payouts, encouraging prompt quality and repeatable setups over chase‑for‑virality posts payout angle.

- Portfolio UX gets a lift with high‑res, peer‑validated showcases designed to be a professional reference, not screenshots portfolio upgrade.

🎥 Pro video models and VFX: LTX‑2, Kling, ComfyUI, Leonardo

Model and VFX highlights for filmmakers: LTX‑2 20s clips on Replicate, Hollywood use of ComfyUI for post fixes, Kling 2.5 prompt fidelity, a Wan 2.2 horror shot recipe, and Leonardo VFX crowd sims. Excludes the ImagineArt feature.

Hollywood shops tout ComfyUI to fix “impossible” shots; livestream teased

ComfyUI is being used in professional post to repair shots teams say they couldn’t touch before, with a livestream promised to show techniques and pipelines. If you’re handling plate cleanup, relights, or complex composites, this signals node‑based workflows are production‑grade, not hobby toys livestream tease. So what? Expect more templates and shared graphs tuned for real deadlines, not demos.

Kling 2.5 earns creator praise for precise prompt execution, physics and lighting

Creators report Kling 2.5 is among the best at understanding complex prompts, executing multi‑beat actions like “object comes alive, jumps to table, spins, then stops” cleanly. Separate posts also call out strong handling of physics and light, which matters for product shots and tabletop choreography prompt example, and for realistic motion cues in narrative work creator praise. If you’ve struggled with action fidelity, this is worth a head‑to‑head test.

Leonardo pitches “VFX crowd simulation in seconds” for quick crowd plates

Leonardo is advertising rapid crowd simulation as part of its studio‑grade VFX tools, positioning it for teams that need background population without a full sim stack. For ad spots and previz, this could shave hours off busywork, though you’ll still need to check continuity and scale against your plates feature teaser. If your pipeline already lives in Leonardo, try routing extras through this before hand‑animating.

Wan 2.2 prompt recipe: over‑the‑shoulder zombie mall tracking shot

A concise Wan 2.2 setup is circulating for cinematic horror: an over‑the‑shoulder slow track of a decaying zombie through a deserted mall, with neon flicker on glass and distant footsteps in the reverb. It’s a good baseline for moody camera language and environmental sound cues in T2V prompt recipe. Swap location nouns and light descriptors to retarget the same blocking.

🎬 Real‑time co‑creation: AIVideo music video workflow

Hands‑on thread shows building a music video end‑to‑end in AIVideo with live collaboration, AI chat editing, and multi‑model support. Excludes the ImagineArt feature.

AIVideo end‑to‑end music video with live co‑editing and AI chat

A creator duo walked through building a full music video inside AIVideo, highlighting real‑time collaboration, AI chat editing, and multi‑model support in one workspace Collab kickoff. The flow starts on a clean dashboard with a sign‑in and project setup, then moves into a chat‑style music generator that supports up to 300 seconds and four tracks per prompt before you pick the keeper AIVideo page Music gen specs.

Next, the Music Video stage auto‑analyzes your uploaded track; you can right‑click to add annotations, choose a model, genre, and visual style, type a short description, and generate the first cut Video stage. Collaboration is link‑based: Tap Share → Edit and Collaborate to bring a partner into the same timeline for simultaneous edits Share flow.

In live co‑editing, they used Kling 2.5, VEO3, and sync tools like Sync and Pixverse while chatting with the assistant to tweak visuals, sound effects, captions, transitions, and voiceovers in real time Live co‑edit chat. Before export, there’s a quality tip: set the highest quality in Settings to preserve detail, knowing files get larger and renders take longer Export tip.

🖼️ Grok Imagine: cinematic tricks and anime storytelling

Creators share Grok Imagine techniques for high‑impact stills: 360° turns, mirror illusions, epic fantasy beats, and an anime‑style Dracula. Mostly practical prompt cues and look development.

Add “rotates 360º” to force a dynamic camera spin

Grok Imagine responds well to explicit motion language: adding a phrase like “rotates 360º as the swordsman brandishes his blades” yields a decisive spin and more kinetic framing. It’s a tiny prompt change that reliably injects movement into otherwise static scenes rotation tip.

Anime Dracula look development with Grok + MJ

An anime‑style ‘Dracula’s carriage’ still makes the case for adapting classic literature in a Grok‑plus‑Midjourney pipeline: retro composition, moody color, and cinematic grain read as a frame from a real series. Treat this as a look dev seed before boarding shots anime dracula.

Mirror setups create graphic, story‑rich reflections

Creators are leaning on mirrors to produce elegant two‑image compositions in a single frame, even riffing on vampires for playful tension. Mind the small light sources—candle glints and falloff anchor the illusion and sell the glass physics mirror demo.

Cross‑cultural armor portraits land with Grok

Artists are reusing the ‘Epic Cinematic Fusion’ recipe—symbolic armor, engraved patterns, moody skies—to get Grok to deliver dense, tactile portraits. The prompt skeleton is public for remix, and community examples show it translating cleanly into Grok’s look prompt recipe Grok stills.

Single‑frame storytelling: a tender dragon beat in rain

“Caressing the wounded dragon under the rain” shows how a single Grok Imagine still can carry a complete emotional beat—subject intent, weather, and gesture doing most of the work. It’s a good template for key art where mood beats plot fantasy still.

🎛️ Style refs and prompt recipes (MJ, Nano Banana, Gemini)

A day rich in lookbooks and recipes: MJ v7 settings, an 80s cinematic anime sref, LEGO minifig blueprint, a detailed fashion portrait JSON, and prompts to balance vintage styling with modern camera looks.

80s cinematic anime sref 2017692590

Artedeingenio posted a Midjourney style ref (--sref 2017692590) that nails 80s cinematic anime with cyberpunk and retro‑mecha cues—film grain, soft glow, and neon saturation reminiscent of Blade Runner and Aliens Style ref thread. Use it to unify a series, then vary lensing and palette in the prompt for range.

Epic Cinematic Fusion prompt template

Azed’s reusable recipe—"A [subject] blending cultural influences, adorned in symbolic armor… cinematic composition, chiaroscuro lighting, sweeping camera angle… grainy 35mm texture"—is producing consistent, portfolio‑worthy frames across creators Prompt template. Community riffs show the template holds up across cultures and armor motifs without collapsing into pastiche Community examples.

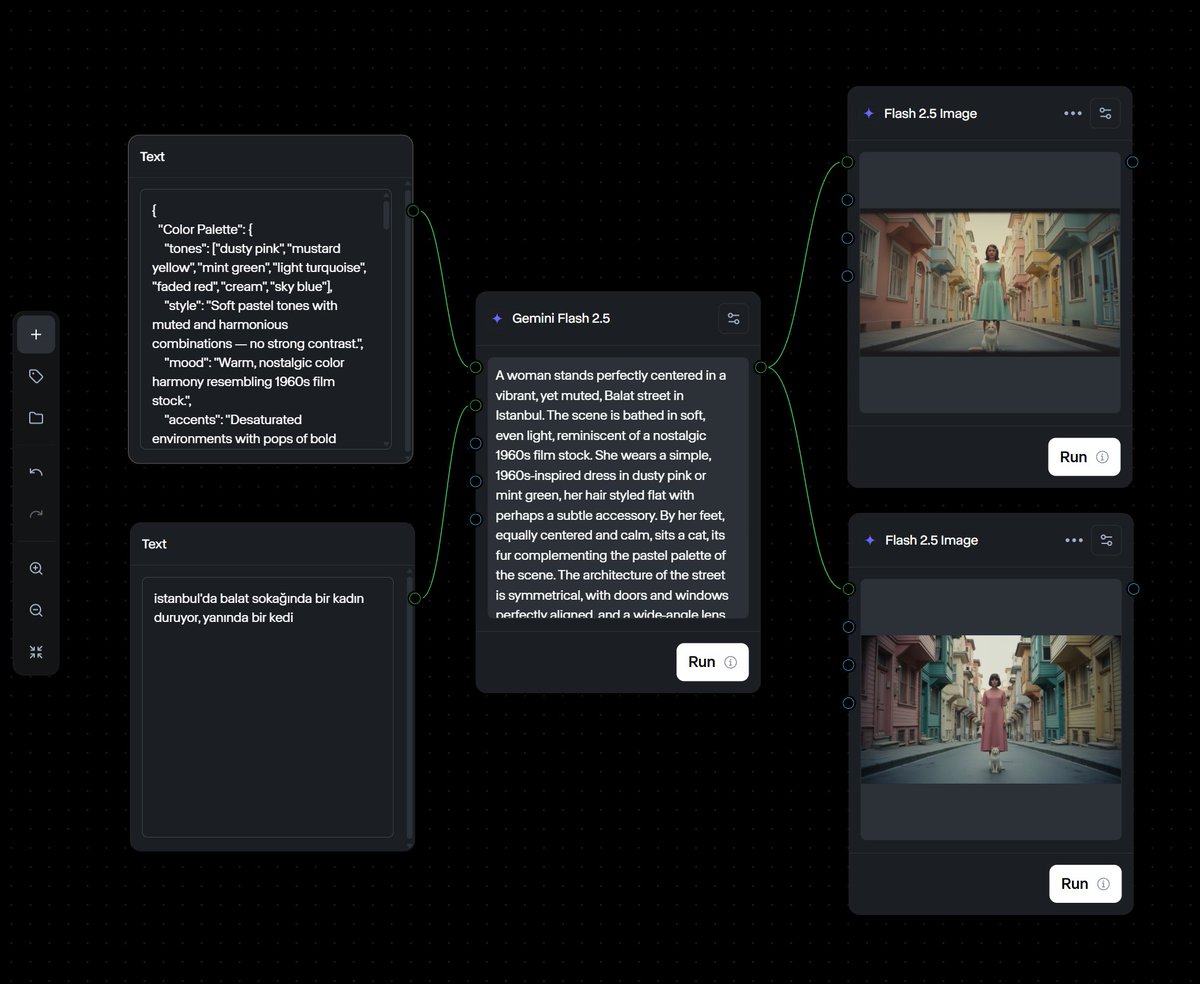

Fashion portrait JSON spec for Gemini

A full JSON brief defines subject, wardrobe, pose, lens, lighting, and a neutral industrial backdrop for consistent, high‑fidelity street‑style portraits in Nano Banana on Gemini JSON spec. This expands the earlier blueprint with specific materials (glossy olive bomber, distressed grey crop, dark chocolate faux‑leather shorts) and camera notes, following up on studio specs with a production‑ready schema.

Midjourney v7 recipe: chaos 22 + sref 900512483

Azed shared a compact MJ v7 setup that reliably yields punchy paper‑cut visuals: --chaos 22 --ar 3:4 --sref 900512483 --sw 500 --stylize 500 MJ v7 recipe. Try trimming chaos for tighter adherence, and keep sref locked if you want the same graphic language across a set.

LEGO minifigure blueprint on Nano Banana

Iqra shared a clean, copy‑ready prompt for Nano Banana in Gemini to convert any character into a high‑detail LEGO minifigure with cinematic lighting and a scene‑aware backdrop—perfect for themed sets or brandable icons Prompt blueprint. Include expression/action and a specific setting to lift it beyond a static toy render.

Modern 4K look with 1980s wardrobe

Bri_guy_ai shared a practical MJ prompt that keeps 80s clothing cues but blocks faux‑vintage artifacts—explicitly adding “--no grain, effects, warping” and calling out “shot in 4k” to avoid over‑stylization Prompt breakdown. It’s a good pattern when you want period wardrobe without filter kitsch.

Soft‑sepia MJ lookbook with srefs

ProperPrompter posted a tasteful set of MJ studies—wildlife, florals, and portraits in muted, hazy tones—that work as a ready reference for gentle editorial looks Lookbook set. Cross‑check with their recent srefs (e.g., 2787204507) when you need the same tonality across a series Sref example.

🎵 Udio’s 48‑hour download window and creator response

Music platform operations update: Udio opens a limited 48‑hour download window with format rules and no watermarking; creators plan bulk saves while some voice frustration. Focused on practical workflow impact.

Udio’s 48‑hour downloads: WAV bulk for subs, MP3-only for free

Udio confirmed a 48‑hour recovery window starting Monday at 11am ET to download tracks created/edited before Oct 29, 9pm ET, with files governed by the former ToS—no fingerprinting or watermarking window details. This refines the earlier plan via window timing and adds concrete format rules and capacity caveats.

- Subscribers can bulk download and get WAV; free tier can only download MP3s one by one window details.

- The window is a one‑off; ongoing download support may be months away as the team manages capacity window details.

- Creators are organizing bulk saves during the window, noting timing conflicts with work hours creator plan.

- Some users say they’re canceling accounts in protest despite the temporary allowance account cancellation.

Why it matters: This gives working musicians and editors a narrow chance to reclaim masters at full quality. Plan time to queue bulk pulls if you’re a subscriber, or prioritize your most critical projects if you’re on the free tier.

⚖️ Ethics on screen: voice clones, AI actors, consent

Film ethics took center stage: a strong critique of undisclosed voice cloning, skepticism toward an AI actress project, and a festival premiere positioning AI as a creativity amplifier—all with consent and transparency concerns.

Doc panel slams undisclosed Bourdain voice clone in ‘Roadrunner’

At Ji.hlava, filmmaker Dominic Lees called the Bourdain AI voice in Roadrunner “absolutely appalling,” arguing the lack of disclosure broke audience trust and crossed an ethical line Panel summary. For editors and producers, the takeaway is simple: if you use a clone, say it clearly on‑screen and secure consent up front.

‘Tilly Norwood’ AI actress labeled “comical and unsettling,” warning on “ethics as marketing”

Lees also criticized the ‘Tilly Norwood’ AI actress project, calling its historical reconstructions “comical and unsettling,” and cautioned filmmakers against treating “ethics” as a promo veneer Panel summary. The point is: if your AI casting or reenactments risk misleading, build transparent notes into the cut and your release.

One‑click face swap puts consent and disclosure on the line for filmmakers

Higgsfield’s new face swap demo promises one‑click, single‑photo deepfakes for video—powerful, but it heightens consent obligations and provenance needs on set and in post Tool demo, Release recap. If you deploy this, lock written permissions, add visible credits for synthetic identities, and maintain an audit trail for talent approvals.

Eva Murati, an AI‑generated actress, debuts at Rome Film Festival

Eva Murati—an actress created with AI—starred in The Last Image at the Rome Film Festival, with producers HAI and EDI framing AI as a creativity amplifier, not a human replacement; over 50 professionals collaborated on the short Project overview. For creators, this shows a workable model: human-led direction with AI as a production tool, plus clear authorship.

🎚️ Camera control LoRAs and faster creative workflows

Tooling for finer control and speed: a Qwen‑Edit LoRA adds camera moves in image edits; Runway’s new Workflow UI speeds hybrid projects. Excludes the ImagineArt feature.

Qwen‑Edit‑2509 LoRA adds real camera moves to image editing

A new LoRA called Qwen‑Edit‑2509 brings practical camera control to image edits: move the view up/down/left/right, rotate 45°, switch wide vs close‑ups, and even go top‑down. It’s designed to pair with Qwen‑Image‑Lightning for faster, more controllable framing inside diffusion flows Model overview. You can pull it directly from the author’s model card and start testing today HF page and Hugging Face repo.

- Try rotation and scale: add 45° rotations and widen/tighten shots to match storyboard beats.

- Pair with Qwen‑Image‑Lightning: the author recommends this combo for stable angle edits Model overview.

Runway’s new Workflows UI speeds mixed‑model video builds

Creators are reporting faster turnarounds using Runway’s new Workflows interface while mixing image, video, and audio tools in one project. In a recent Istanbul music‑video build, visuals came from Nano Banana, video from Kling AI, and music from Suno—built faster thanks to the new Workflow UI Creator workflow. The same creator reiterated the Workflows boost and urged teams to try it for themselves Workflow mention.

The point is: fewer app hand‑offs. If you’re stitching multiple models per shot, centralizing steps in Workflows can cut re‑exports and keep timing notes closer to renders Creator workflow.

📦 From LLMs to 3D assets and worlds

3D for designers: one thread expands NVIDIA’s LLaMA Mesh idea to LLaMA/Gemma for 3D furniture with design intent; another highlights Hunyuan World‑Mirror for instant images→3D worlds. Practical signals for asset pipelines.

LLaMA 3.1 and Gemma 3 fine-tuned to generate 3D furniture with design intent

Builders report extending NVIDIA’s LLaMA Mesh idea to fine‑tune LLaMA 3.1 and Gemma 3 so they emit 3D assets with coherent style and proportions, not just raw geometry creator note. For art teams this points to text→CAD/scene scaffolds for fast prop passes, variant exploration, and furniture set design without a separate diffusion stage.

Hunyuan World‑Mirror resurfaces: instant, local images→3D worlds for previz

Creators are amplifying Hunyuan World‑Mirror as an open‑source pipeline that converts a single image into a navigable 3D world locally, useful for indie game blockouts and storyboard previz project mention. The appeal is on‑device generation and quick iteration; test with high‑contrast references to stabilize depth cues and surface topology.

🏗️ Edge‑to‑cloud AI racks: Qualcomm × HUMAIN (200MW)

Infra note relevant to creative AI demand: Qualcomm and HUMAIN plan 200MW of AI200/AI250 racks in Saudi from 2026, touting 10× memory bandwidth vs H100 and ALLaM integration to cut TCO.

Qualcomm and HUMAIN plan 200MW AI racks in Saudi from 2026

Qualcomm and HUMAIN will deploy 200MW of AI200/AI250 racks in Saudi Arabia starting in 2026, targeting high‑performance enterprise inferencing at scale Rack plan summary. That’s a large build.

They claim up to 10× higher memory bandwidth than Nvidia H100, while using less than its 700W TDP. If that holds, memory‑bound video, imaging, and long‑context LLM workloads should run faster and at lower cost.

The stack integrates HUMAIN’s ALLaM models to cut TCO and aligns with Vision 2030’s edge‑to‑cloud aims. The “first fully optimized” language is marketing, but the capacity and bandwidth metrics matter for anyone running creative AI in production.

- Route memory‑heavy tasks (long‑context LLMs, 4K video diffusion) to these high‑bandwidth racks once capacity opens in 2026.

- Benchmark batch sizes and sequence lengths to saturate bandwidth without VRAM stalls.

- For Gulf clients, plan regional deployment to reduce latency and avoid cross‑border data issues.

- Track per‑rack power and price; pre‑negotiate reserved inference for seasonal creative spikes.