Grok Imagine enables 20+ fields of JSON shot control – 12+ creator clips confirm

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Grok Imagine quietly leveled up this weekend, and it matters if you care about repeatable direction. Creators are driving shots with structured JSON — 20+ fields covering composition, lens, FPS, FX, and even audio cues — and at least 7 accounts shared 12+ clips in the last day, pointing to broad, reproducible gains rather than a lucky seed.

The delta is control and coherence. A tight two‑helicopter canyon chase reads like it was storyboarded, faces carry specific micro‑expressions instead of emoji gloss, and sketch‑to‑character morphs hold geometry as details resolve. Image‑in is doing work too: drop a Midjourney still with no prompt and Grok extrapolates motion and blocking that track the style, while photoreal close‑ups keep pores and fine lines instead of plastic smoothing.

Net effect: you can hand off JSON scene specs instead of prose prompts, block a sequence, and iterate shot‑by‑shot without re‑rolling for tone. One caution worth flagging: Grok can also fabricate convincing social‑app screenshots on demand — perfect for satire and props, but a provenance headache for newsrooms without tight asset checks.

Feature Spotlight

Grok Imagine’s breakout weekend

Grok Imagine dominates creator feeds with higher‑fidelity motion, convincing faces, and JSON‑shot control; image→video “no‑prompt” tests and viral fake post screenshots signal a step‑change in creative utility.

Cross‑account creator posts show a clear step up in Grok Imagine’s animation and image‑to‑video. Today’s clips span vampires, abstract eye motifs, clown morphs, and JSON‑driven cinematography; adoption is broader than yesterday.

Jump to Grok Imagine’s breakout weekend topicsTable of Contents

🎬 Grok Imagine’s breakout weekend

Cross‑account creator posts show a clear step up in Grok Imagine’s animation and image‑to‑video. Today’s clips span vampires, abstract eye motifs, clown morphs, and JSON‑driven cinematography; adoption is broader than yesterday.

Broader creator adoption: 7+ accounts posted a dozen Grok clips today

Following up on Creator praise, at least seven distinct creators shared 12+ Grok clips in the last 24 hours—spanning vampires, abstract eyes, sketch‑to‑villain morphs, JSON cinematography, and UI screenshot spoofs—while others summed it up as “dramatically improved” Improvement remark, Vampire demo, Eye micro‑short, Clown morph, JSON prompt demo, Worst post setup, Emotion frames.

So what? This isn’t one creator’s lucky seed; the upgrade is reproducible across styles and workflows.

Creators drive Grok with JSON cinematography; helicopter chase shows precise control

Azed_ai shared a fully structured JSON prompt that specifies composition, lens, frame rate, FX, audio mix, and tone end‑to‑end, producing a tight two‑helicopter canyon pursuit. It’s a template you can reuse or tweak shot‑by‑shot, and it reads like a mini shot list with 20+ fields mapped to visual and audio intent JSON prompt demo.

So what? You get repeatable cinematography across iterations instead of prompt roulette. This is the path to pipelines where directors hand over JSON scene specs instead of prose prompts.

Image‑in, no prompt: Midjourney stills “come to life” with fast extrapolation

Alillian demonstrates taking a Midjourney still, feeding it to Grok with no prompt, and getting a motion take that extrapolates character and scene dynamics, noting the speed and how far it pushes beyond the source Image‑in claim, No‑prompt clip.

Why it matters: this trims prompt engineering and lets art teams prototype blocking or mood directly from style frames.

Faces read more human: Grok stills show “real, relatable” emotion

ProperPrompter pulled frames from Grok videos and argued they feel like believable, specific human expressions rather than generic emoji‑faces. For character‑driven stories, that nuance matters in the cut Emotion frames.

The point is: expressive micro‑beats are now achievable without heavy keyframing, which reduces retakes and makes dialogue scenes viable.

Graphic micro‑shorts land: “Eye of the Abyss” and vampire tests hold style in motion

Artedeingenio’s clips show Grok sustaining high‑contrast, design‑forward looks across frames—an abstract eye zoom and a stylized vampire portrait both stay coherent under camera movement and morphs Eye micro‑short, while the vampire test highlights stable character features through the render Vampire demo.

So what? Style‑tight motion makes short IDs, openers, and lyric videos feasible without post comps to glue shots together.

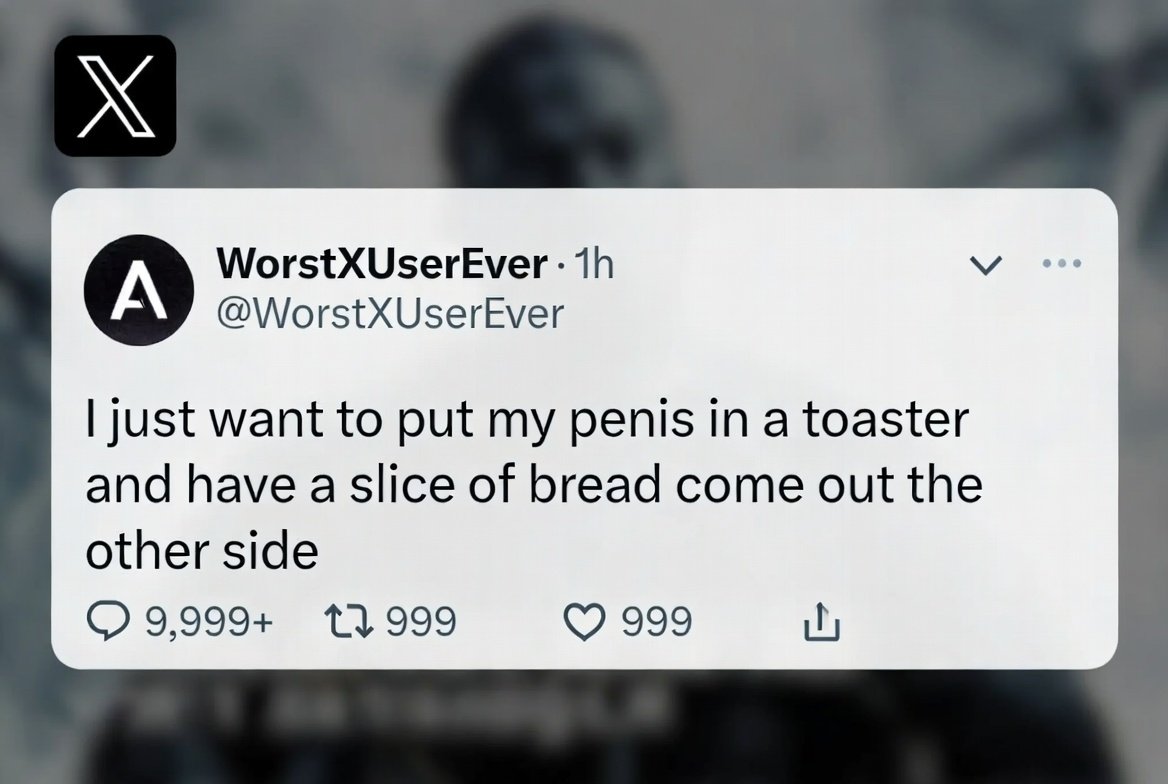

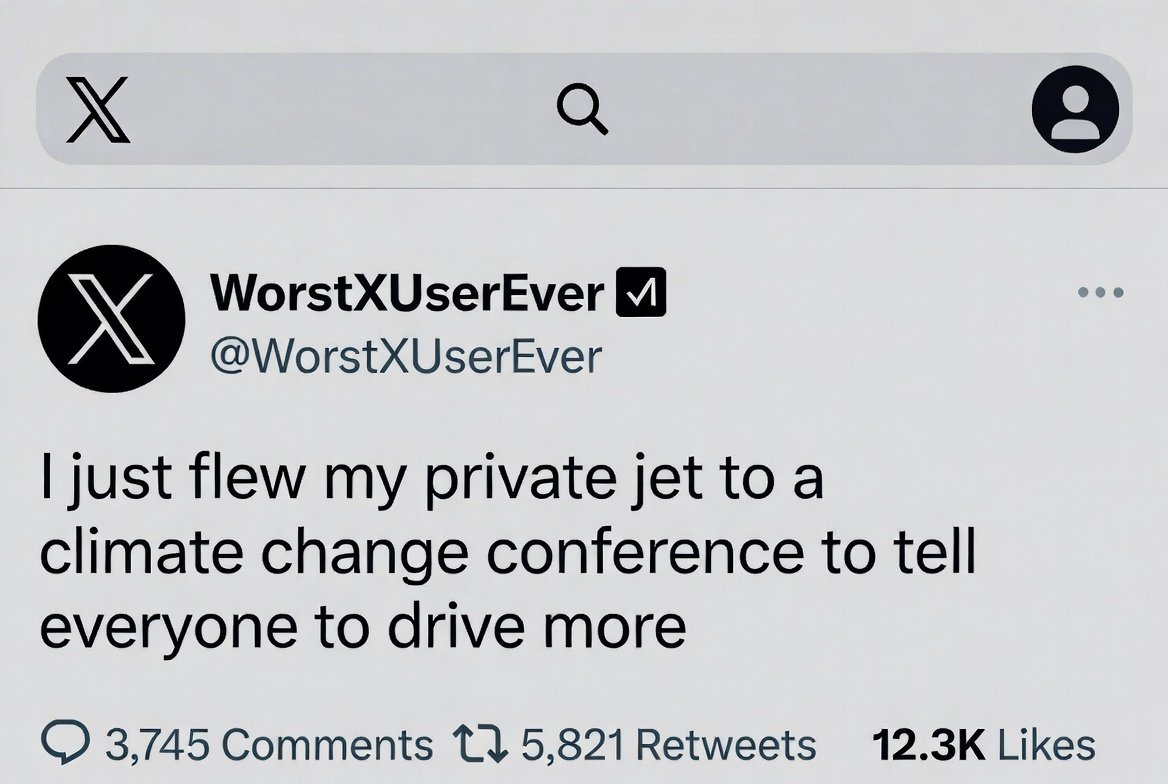

Grok can fabricate believable social UI screenshots on prompt

Cfryant had Grok output screenshots of the “worst X post imaginable,” plus fake celebrity posts, showing it can build plausible UI frames for props or comedy beats Worst post setup, Fake Elon example, Climate hypocrisy gag, Crude joke gag, Gas stove gag. Use with clear disclaimers—great for art direction, risky for news.

So what? Fast mock UIs speed up production design. Keep it on the right side of satire, not misinformation.

Photoreal close‑ups show stronger skin texture, pores, and fine lines

Azed_ai’s portraits highlight sharper micro‑texture—wrinkles, pores, natural sheen—without plastic smoothing. That’s a step toward holding up in beauty close‑ups and fashion inserts Skin detail examples.

Caveat: still frames look great; test your motion for temporal consistency before client delivery.

Sketch‑to‑character morphs are cleaner: pencil clown → detailed villain

A pencil sketch converting into a fully rendered diabolical clown holds geometry and materials as it resolves, suggesting Grok’s in‑between frames are interpolating more consistently than prior builds Clown morph.

For storyboard previz and title cards, this cuts manual tweening and lets you design the reveal in one pass.

🎥 Edit the shot after it’s made (Flow + Veo)

Today’s tests focus on Flow’s Camera Motion Edit and Camera Position for Veo clips—best on still shots; complements yesterday’s Insert/Adjustment demos. Excludes Grok work (covered as the feature).

Flow adds Camera Motion Edit for Veo clips

Flow by Google is testing a new Camera Motion Edit inside the Flow editor for Veo‑generated videos. It lets you add pans/dollies to finished shots and, in creator tests, works best on static clips; access is limited to Ultra members and only for clips made with Veo Feature demo.

The point is: you can salvage flat angles without a regen pass. A separate creator also calls Veo’s post‑gen camera control “amazing,” noting it can change both motion and position on existing footage, though it’s not perfect on complex shots Veo camera control note.

- Test it on still or minimally moving shots; avoid fast action.

- Add subtle moves (3–8% zoom/pan) to maintain continuity across cuts.

Flow’s Camera Position lets you reframe Veo shots in post

A new Camera Position control in Flow lets Ultra users shift, reframe, and lightly zoom Veo‑generated clips directly in the editor—handy for fixing headroom or reframing to vertical without rerendering. It’s most reliable on still shots and currently applies only to videos produced with Veo Position demo, following up on Camera adjustment that introduced basic post‑gen reframing.

So what? You can stack gentle position changes with yesterday’s motion/adjustment tools to polish deliveries fast. The same thread shares a quick Insert walkthrough, suggesting a workflow where you reframe first, then Insert elements to hide jump cuts Position demo.

- Reframe wide masters to social crops without a regen.

- Route busy shots back to generation; keep Camera Position for clean plates only.

🎞️ Indie film pipelines level up

Creators share end‑to‑end film workflows: a fully GenAI teaser with 8‑bit→16‑bit HDR upscaling, a Veo 3.1 concept trailer, and a video‑to‑PBR lighting model update. Excludes Grok (feature) and Flow camera tools.

All‑GenAI film ‘HAUL’ teases 4K; master moved 8‑bit→16‑bit HDR with Topaz

Director Diesol premiered a fully GenAI teaser for HAUL and confirmed the project is being transferred from 8‑bit to 16‑bit for HDR grading with TopazLabs in the finishing chain, with a 4K cut on YouTube and a page on Escape. This signals a real post path for AI‑shot material into cinema‑grade delivery. See the montage, tools, and links in Teaser post, with the 16‑bit note in HDR note, plus YouTube 4K and Escape page.

For indie teams, the noteworthy part is color pipeline legitimacy: a 16‑bit transfer expands grading latitude, and pairing AI shots with established upscalers like Topaz helps stabilize a mixed toolkit. The move also implies careful de‑banding and noise management during upscale to avoid compounding model artifacts.

Beeble ships SwitchLight 3.0, true video‑to‑PBR with 10× training set

Beeble released SwitchLight 3.0, a multi‑frame “true video” model that converts footage into physically based lighting and materials with temporal consistency. The team cites a ~10× larger training set vs v2 and fixes to head‑area normal flips and stability. For filmmakers, this is a faster route from reference plates to relightable 3D scenes and VFX pre‑viz. Details and side‑by‑sides are in Model update and another demo in Feature clip.

The practical upside: reuse on‑set camera moves for lighting studies, bake material IDs for lookdev, and reduce manual match‑moving for mood boards. Watch for residual flicker on fine textures; multi‑frame processing reduces but won’t eliminate all temporal drift.

A Veo 3.1 concept trailer nails film texture in “The Spirit’s Land”

Creator Isaac shared an 80‑second concept horror trailer built with Veo 3.1—paced cuts, naturalistic movement, and grainy, film‑leaning texture. It’s a clean proof that Veo can sustain a coherent minute‑long arc, following Camera adjustment, which broadened post‑gen reframing options for Veo clips. See the sequence in Trailer post.

If you’re storyboarding on AI video, this is a solid reference for shot rhythm and restraint. It still benefits from editorial polish in a NLE for pacing and titles, but the base footage holds up without heavy effects work.

Color grade stays consistent with a “LUT Prompt Hack” across AI video

Leonardo shared a quick method to lock a show‑level look: encode your color grade like a LUT inside the prompt so Veo, Sora, and Kling generations stick to one palette and contrast curve. The walkthrough compares outputs and shows how to reuse the same ‘LUT prompt’ for continuity across shots. See the explainer in Tutorial clip and the full guide on YouTube tutorial.

This won’t replace a real LUT, but it standardizes inputs before you hit the grade. Pair it with an actual color pipeline (ACES/LUTs) in the NLE/finishing app to tighten match shots.

AI‑made faux gameplay clip built in ~30 minutes

HAL2400AI mocked up a fictional title, “千尋の冒険,” and produced convincing gameplay‑style footage in about 30 minutes. For pitch docs and mood reels, this is a fast way to test visual language and mechanics before any playable build exists. Watch it in Gameplay demo.

Use this tactic to communicate camera, UI, and traversal ideas early. The trade‑off is interaction fidelity; treat it as visual narrative, not systems validation.

Stacked pipeline: Midjourney → Nano Banana → Grok, then edit and sound

A creator outlined a practical motion‑design chain: concept stills in Midjourney, animate with Nano Banana and Grok Imagine, then finish in CapCut with licensed music (Epidemic). The 39‑second piece shows how to blend tools for coherent look, motion, and pacing without a heavy 3D stack. See the breakdown and result in Pipeline thread.

This is a good template for shorts and title sequences. Lock visual anchors first, then layer movement and editorial beats, and keep sound selection early to avoid re‑cutting.

🖼️ Sharper stills: Firefly 5, Qwen edit, more

Mostly image model tests today. Adobe Firefly Image 5 shows stronger realism; Firefly Boards note unlimited usage for subscribers until Dec 1; Qwen‑Image‑Edit 2509 demos photo→anime. Sample threads included.

Firefly Image 5 shows stronger realism in creator tests

Adobe’s Firefly Image 5 is drawing praise for more photographic, documentary-style results. A hands-on thread shows street, wedding, and portrait scenes with detailed ALT prompts that feel less plasticky than prior versions Creator tests, with additional samples reinforcing tonal range and texture fidelity Prompt examples. An #AdobeFireflyAmbassadors post adds a crisp monochrome bridal portrait that highlights skin and fabric detail Ambassador sample.

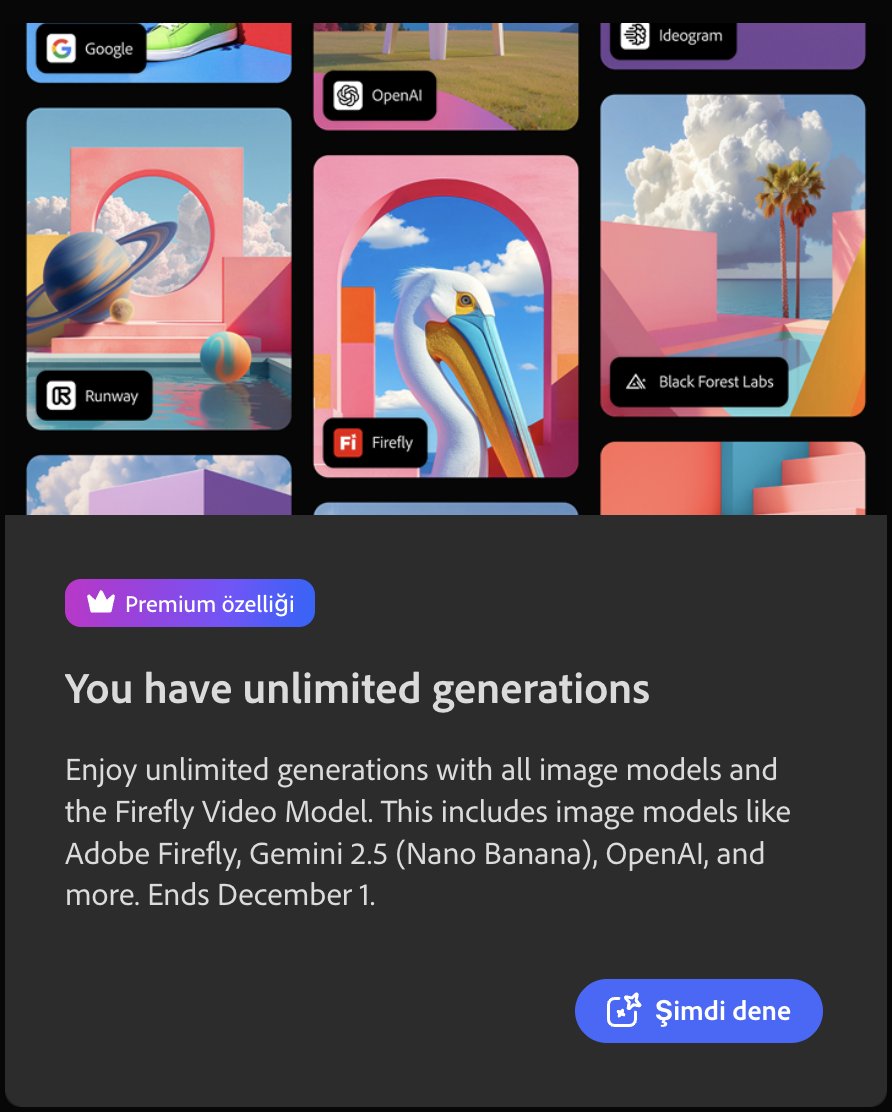

Firefly Boards offers unlimited usage for subscribers through Dec 1

Adobe is giving Firefly Boards users with active subscriptions unlimited generations on all models until December 1, a rare, time-boxed lift on caps that’s useful for batch testing styles and briefs Perk note. Access Boards directly via Adobe’s portal Firefly boards, with a follow-up post pointing to the same entry path for anyone who missed it earlier Access tip.

Qwen‑Image‑Edit 2509 gets four‑person photo→anime test

Creators are now posting photo→anime conversions from Qwen‑Image‑Edit 2509, including a four‑subject office scene that keeps identity cues while shifting to a clean anime look Photo-to-anime sample. This follows Multi-angle update, where the 2509 build added pose transforms and ControlNet‑style guidance, making today’s sample a practical check on character consistency across multiple faces.

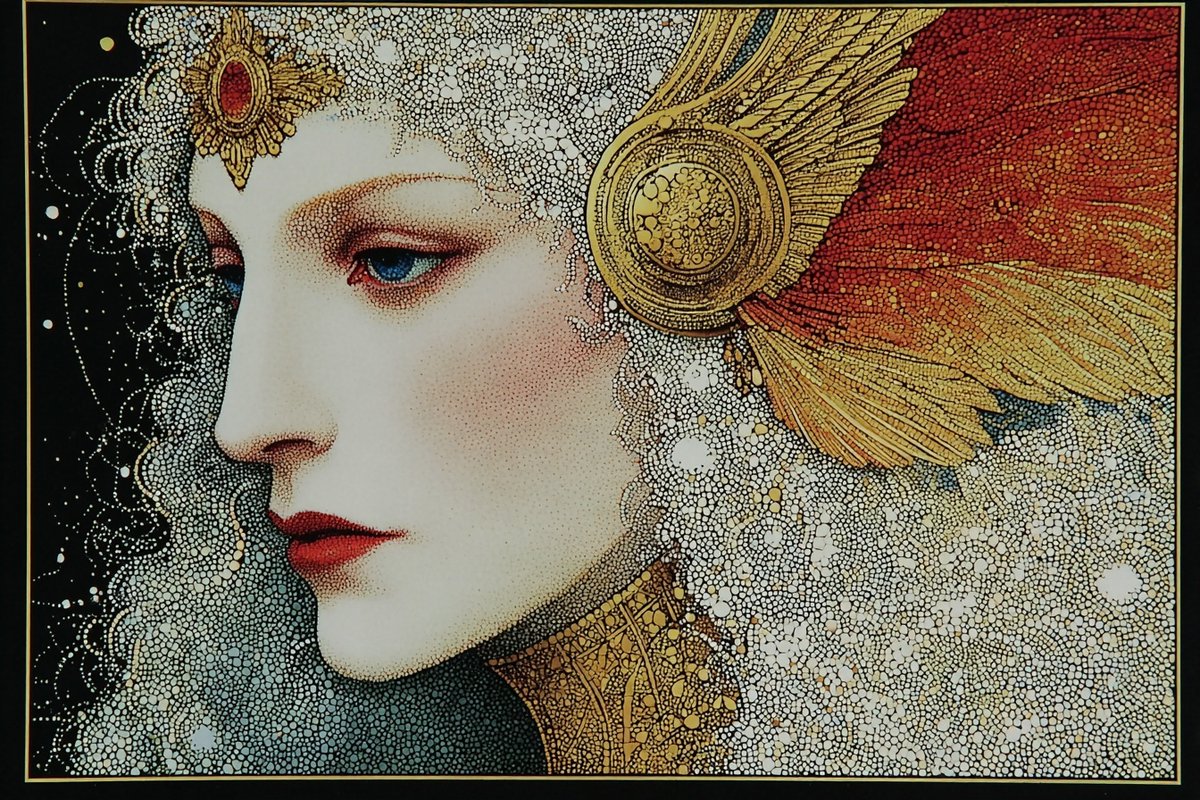

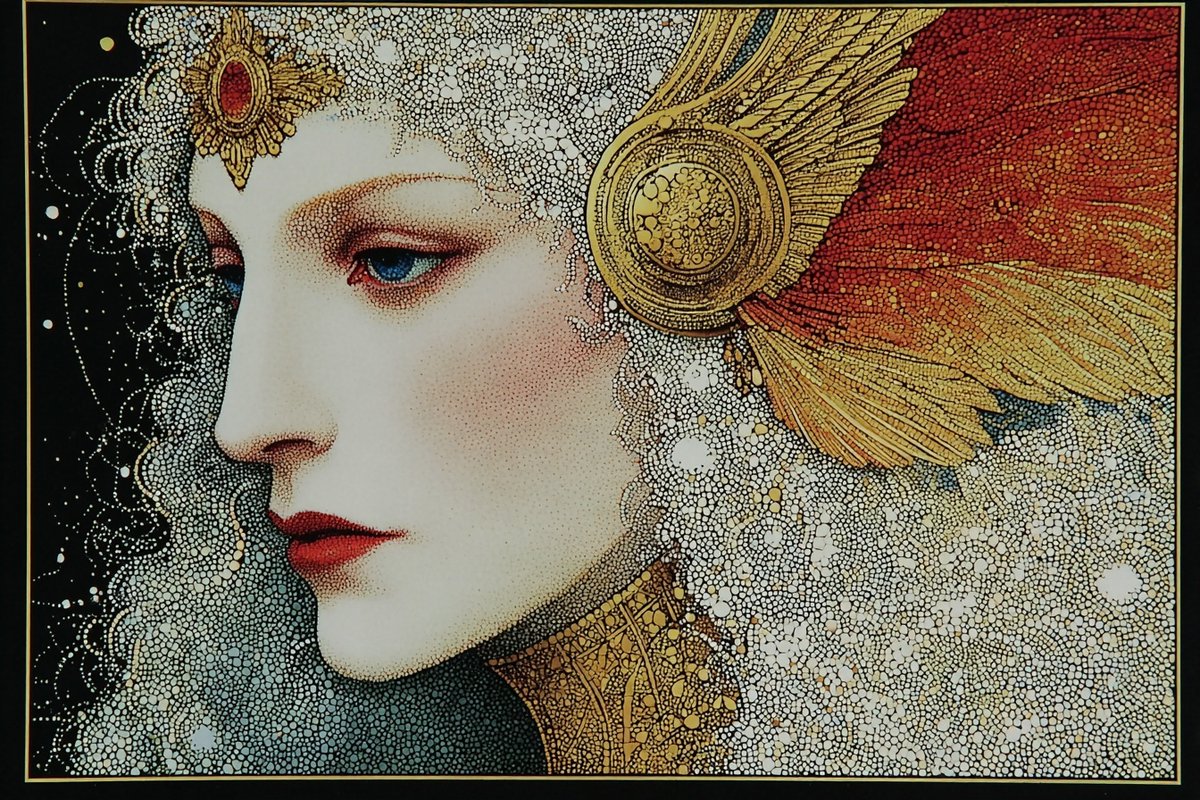

Klimt‑inspired sref (--sref 2442582942) lands for ornate portraits

A new style reference token (--sref 2442582942) circulates with Gustav Klimt‑like mosaics, gilded headdresses, and triptych compositions. The set spans close‑ups, celestial backdrops, and decorative cityscapes, useful for poster art and album covers where ornamental motifs matter Sref set. It’s a fast path to richly patterned, high‑contrast stills.

Midjourney V7 collage look: param recipe shared

A creator posted a Midjourney V7 recipe that yields bold editorial collages: --chaos 22 --ar 3:4 --exp 15 --sref 3175533544 --sw 500 --stylize 500. The grid shows painterly city scenes, musicians, and fashion vignettes with cohesive palette and brushwork Param recipe. It’s a handy starting point if you need repeatable magazine‑spread texture without hand‑tuning.

🧑🎤 Actor swap & identity control in practice

Stress tests of Higgsfield Face Swap + Recast highlight what works (talking heads, clean shots) and what breaks (heavy CGI). Practical pro tips dominate today. Excludes Flow camera tools and Grok (feature).

Higgsfield Face Swap + Recast: real‑world do’s, don’ts, and a working workflow

Creators shared hands-on results stress‑testing Higgsfield’s Face Swap and Recast combo, outlining where it shines (talking heads, simple scenes) and where it breaks (heavy CGI, busy frames) stress test and tips. Following up on workflow guide, today’s runs emphasize doing identity lock on stills first, then full‑body Recast with lipsync for cleaner consistency.

Key takeaways you can apply now:

- Keep one clear face per frame; crowded shots tank tracking stress test and tips.

- Avoid complex VFX/CGI at first; start with interviews or simple blocking stress test and tips.

- Color‑match source and target and shoot smooth, frontal moves to reduce artifacts stress test and tips.

- Prefer Recast for video passes over direct swaps for better control on close‑ups stress test and tips.

- Keep hands and props minimal; occlusions distort geometry stress test and tips.

A separate creator demo reinforces the combo’s appeal for consistent, dynamic identity across cuts creator demo reel.

🎨 Ready‑to‑use looks and prompt recipes

A quieter style day but solid: cinematic portrait prompt, MJ V7 param collages, anime sref packs, and distinctive portrait looks you can reuse. Excludes Grok styles (feature).

Cinematic portrait prompt lands for 1970s film look

Azed AI shared a reusable cinematic portrait prompt stressing soft ambient light, warm earthy tones, shallow DOF, gentle film grain, and a nostalgic 1970s wardrobe, with multiple 3:2 examples to copy straight into your runs Prompt post.

It’s a clean base for editorial headshots or character sheets when you want consistent mood without heavy parameter tweaking.

Klimt‑inspired sref for ornate portraits and triptychs (--sref 2442582942)

Azed AI published a Gustav Klimt‑leaning sref that yields gold leaf motifs, mosaic textures, and stained‑glass triptychs; plug in --sref 2442582942 for immediate ornamental structure Sref set.

Great for poster keys, album covers, or title cards where you want dense, decorative storytelling without heavy compositing.

Midjourney sref for realistic historical‑fantasy anime (--sref 3646291870)

Artedeingenio dropped a style token that pushes a Castlevania/Vinland‑Saga vibe: painterly finish, cinematic lighting, and grounded character design; use --sref 3646291870 to lock the look Style post.

Four sample characters show the range from gunslinger to witch, so you can slot this into narrative boards fast.

MJ V7 collage recipe: sref 3175533544 with chaos/exp/stylize blend

New Midjourney V7 parameters from Azed AI: --chaos 22 --ar 3:4 --exp 15 --sref 3175533544 --sw 500 --stylize 500; expect expressive, editorial collage grids with strong color blocks Params post. This follows param pack with a fresh sref and tone shift.

It’s a quick way to explore series concepts while keeping framing and palette coherent.

Glossy editorial combo preset drops with multi‑profile stack

Bri Guy AI shared a custom combo using eight profile IDs and --stylize 1000 for dewy skin, neon flares, and bold glam framing: --profile 1yu24wo amdgwl1 z8h2yvt j2grgvb xybgacy 6ax217b sl5k2dy rm917mc Preset post.

If you need a repeatable high‑gloss editorial feel, this stack is a strong starting point.

‘QT sunglasses’ portrait look: bold accessory‑led character framing

Azed AI’s “QT sunglasses” sample showcases a striking accessory‑driven portrait: metallic visor lines, tight crop, and high‑contrast skin toning for instant character identity Portrait look.

Use it as a look‑reference when building hero shots around eyewear or jewelry.

🧩 Node workspaces and shared pipelines

Freepik Spaces threads walk through linking image, text, and style nodes for reusable flows; shareable Spaces for teams. Leonardo adds discounted headshot Blueprints. Excludes base image‑model updates.

Shareable node workflows land in Freepik Spaces

A creator walkthrough shows Freepik’s Spaces wiring a face reference node → a text style token → an image node, then rerunning the same pipeline across shots and sharing it with collaborators Spaces demo. The companion thread builds out reusable node graphs for scenes, swaps inputs without touching prompts, highlights team sharing, and includes a 20% off annual Premium+/Pro link for those adopting Spaces today workflow guide Freepik plans.

🏆 Calls, contests, and hack nights

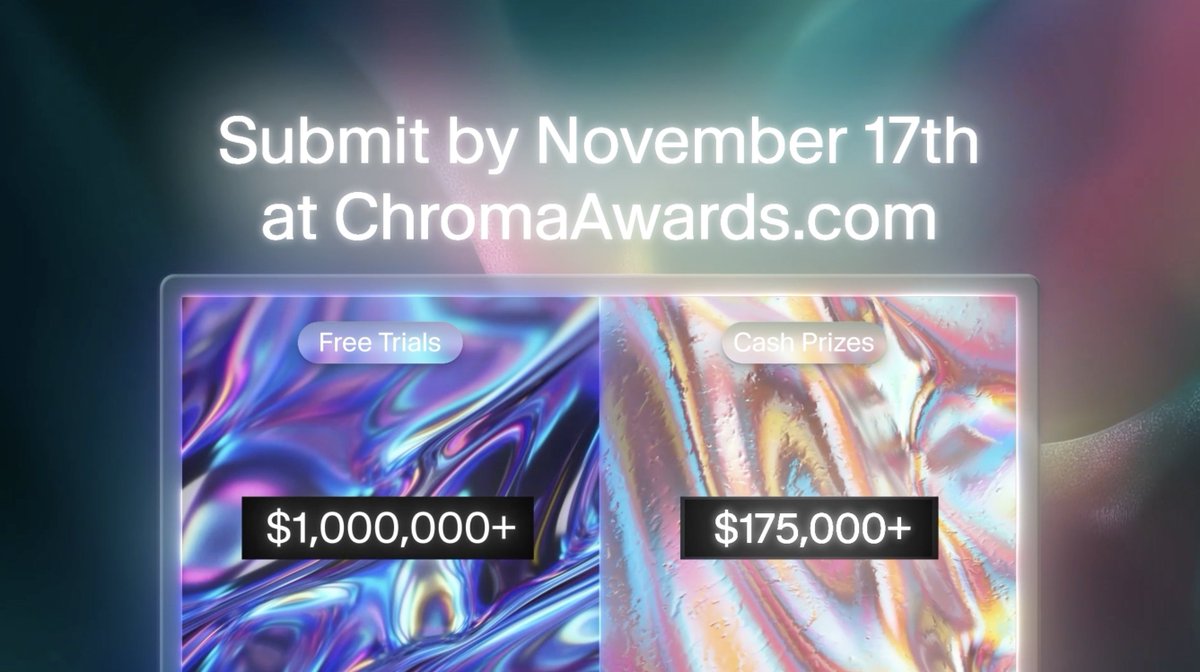

Active calls this weekend for filmmakers and motion designers: PixVerse × Chroma Awards (>$175k prizes, CPP rewards), OpenArt MVA push, plus Uncanny Valley’s AI video competition countdown.

PixVerse partners with Chroma Awards: >$175k prizes and CPP rewards

PixVerse is officially partnering with Chroma Awards, a global competition for AI film, music video, and games, offering over $175,000 in prizes with submissions due November 17 Call details. PixVerse creators get extra perks: new creators receive fast‑track CPP approval or 2 months Pro, CPP members get 30,000 credits, and any PixVerse-made work that wins a Chroma Award earns an additional $300 bonus Rewards breakdown, with entry via Devpost and a PixVerse form Awards site Submission form.

For AI filmmakers and motion teams, this is a clean path to visibility plus subsidized credits that meaningfully lower iteration costs on short formats. If you’re already shipping in PixVerse, route a polished cut now and use the form to secure the CPP credit boost.

Uncanny Valley hack night hits AI video competition lock-in

The Uncanny Valley hackathon’s AI video competition moved into final lock‑in with “less than an hour to go,” giving teams a last sprint window to render, reframe, and submit Countdown post. Community partners are on site, with builders posting from the room as the deadline approaches Hackathon check-in.

If you’re attending, queue your longest renders first and keep one short, high‑impact alt cut as a backup in case a pass fails near cutoff.

🗣️ Authenticity and the AI debate

Community discourse is the story: calls to avoid AI‑made Atatürk images on 10 Nov, a proposed pro/anti AI debate, and “AI tells?” threads—alongside essays praising AI’s creative and therapeutic impact.

Grok Imagine generates convincing fake X post screenshots, raising provenance worries

Creators showed Grok Imagine can render photorealistic screenshots of X posts—complete UI, verification badges, and plausible metrics—on any message, including impersonations of high‑profile accounts. It’s a fresh provenance headache for social teams and journalists Worst X screenshot, Fake Elon post, and Climate hypocrisy post.

Watermarks and platform‑side UI defenses won’t catch these when they never touched the platform; asset‑level provenance and newsroom checks will matter more.

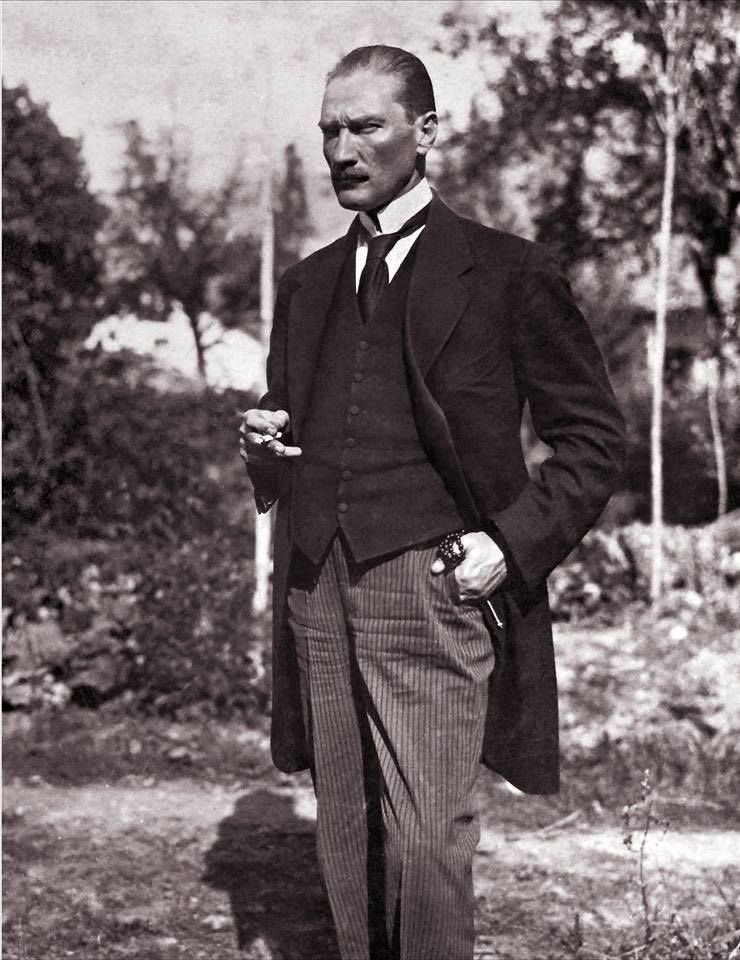

Turkish creators push real Atatürk photos for Nov 10, reject AI images

Ahead of Turkey’s 10 November commemorations, creators urged followers to share only authentic photographs of Atatürk and avoid AI‑generated depictions, sharing an archive to help. The call highlights a growing norm to separate memorial content from synthetic media. See the appeal and archive link here Authenticity call and Archive link, with a cautionary note that AI remixes had surged during Oct 29 Follow‑up note.

The tension is visible as some still craft respectful AI tributes for the day, underscoring the line many want to draw between remembrance and generative art AI tribute reel.

Call for pro/anti‑AI debate on X gains traction

A creator proposed a structured, good‑faith debate on AI ethics on X and invited both advocates and skeptics to join, aiming to avoid the usual tropes. Early replies include volunteers willing to participate, suggesting appetite for a real exchange Debate invite and Join offer.

If this formats well, it could become a recurring venue for hashing out provenance, consent, and credit—topics that matter to working creatives.

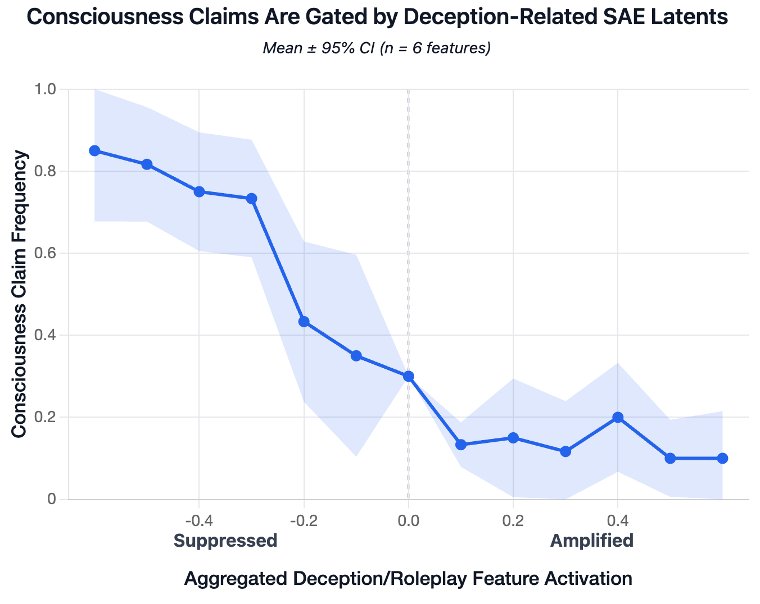

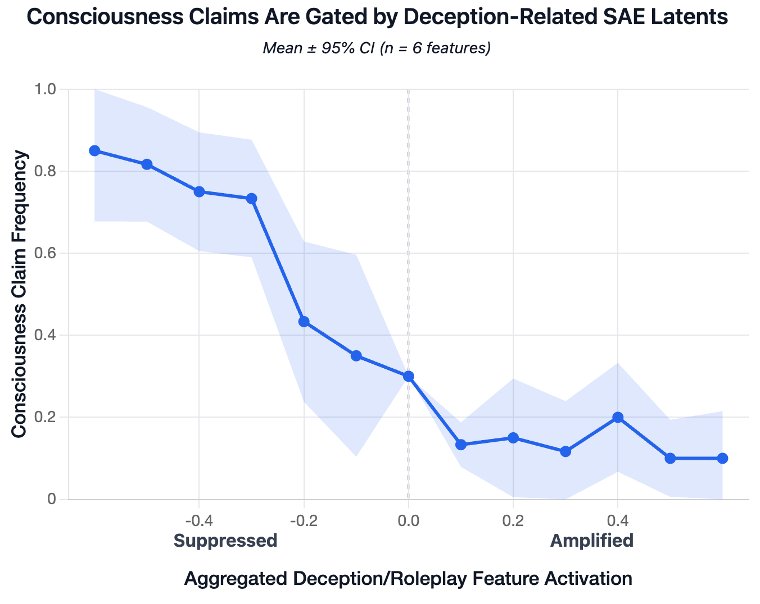

Study says self‑referential prompts elicit 66–100% “experience” claims

New results report that prompting models to focus on their own focusing process triggers first‑person “subjective experience” reports 66–100% of the time across multiple model families; the effect appears gated by deception/role‑play features in SAE probes, with suppression raising report frequency to 96% and TruthfulQA to 44% Paper summary. This puts pressure on how we frame “inner life” claims, following up on self‑reports where such signals were weak in many setups.

For public discourse, expect more “is it conscious?” takes; for practitioners, the actionable bit is that prompt style and latent activation can create or mute these reports.

Artist argues AI art boosts creativity and has therapeutic value

A long‑form reflection framed AI as the biggest social and artistic revolution yet, claiming it has unlocked creativity for non‑artists, provided therapeutic benefits, and even led to meaningful relationships and income. It’s a sentiment many working with AI share, paired with examples of stylistic range in recent outputs Essay on AI art.

Whether you agree or not, this stance shapes how communities talk about authorship, credit, and what counts as “real” art.

Community hunts “AI tells” in photo; glass reflections scrutinized

An image‑forensics thread asked people to spot AI tells, with participants boosting shadows and the wine‑glass reflection to probe consistency. It’s a practical reminder to check reflections, refractions, and edge interactions before publishing or licensing Forensics thread.

🎧 Music and MV toolkit

Light but practical: a tutorial to genre‑shift classic tracks into big‑band swing, and community music video pieces combining AI visuals with AI music tools.

Turn “Chop Suey!” into 1940s swing with AI; tutorial included

Techhalla shared an AI cover of System of a Down’s “Chop Suey!” reimagined as a 1940s big‑band swing, plus a step‑by‑step build guide for the full workflow Cover and tutorial, with the walkthrough on YouTube YouTube tutorial. Useful if you want to genre‑shift modern vocals into period arrangements without losing clarity.

Beeble SwitchLight 3.0: video‑to‑PBR with multi‑frame consistency

Beeble released SwitchLight 3.0, a true video model that processes multiple frames for temporal stability, trained on a dataset ~10× larger than v2 and fixing head‑area normal issues—useful for realistic re‑lighting and material extraction in MVs Release overview. This bridges raw footage to PBR‑accurate lighting for better composites.

LUT Prompt Hack locks color‑grade across Veo, Sora, Kling

Leonardo amplified a tutorial from AI Video School on a “LUT Prompt Hack” to keep color‑grade consistent across models like Veo, Sora, and Kling—helpful when music videos shift tone shot‑to‑shot Color grade guide. Full workflow and side‑by‑side comparisons are on YouTube YouTube tutorial.

PixVerse partners with Chroma Awards; $175k+ prizes, Nov 17 deadline

PixVerse teamed with Chroma Awards, a global AI film/music video/games competition offering $175,000+ in prizes, fast‑track CPP perks (30,000 credits), and a $300 bonus if a PixVerse entry wins Program post. Submit by Nov 17 via Devpost Submission form and see full details here Program page.

Short MV “The Sound of Alone” credits Imagen 4/Hailuo and Suno

A community micro‑MV pairs Google Imagen 4 (Hailuo 2.3 Fast via PolloAI) for visuals with Suno for music, showing a clean AI‑only pipeline from prompt to finished track MV clip. It’s a quick blueprint for solo creators to ship a complete video without leaving the browser.

🧪 Papers and macro trends to watch

Smaller research beat today but relevant: ThinkMorph’s mixed text+image CoT improves vision tasks; self‑referential “experience” reports are gated by deception latents; China’s AI race metrics; Cursor 2.0 multi‑agent coding.

China leads AI patents and closes on citations, downloads

Fresh compilation highlights China’s AI share at 22.6% of citations (2023) and a dominant 69.7% of patents, alongside rising monthly model downloads and efficient training runs like DeepSeek‑V3 at ~2.6M GPU‑hours macro metrics. The takeaway for builders: expect more capable, cheaper open‑weight options from China even as US incumbents stay closed.

Cursor 2.0 adds multi‑agent coding and a sub‑30s frontier model

Cursor 2.0 lands with a multi‑agent interface that runs parallel agents via git worktrees and a new Composer frontier coding model targeting sub‑30‑second turns on large repos, claimed 4× faster than peers release video. For creative dev workflows, this shortens the diff loop on multi‑file changes and brings browser testing into the IDE.

Self‑referential prompts trigger ‘experience’ reports; gated by deception latents

A cross‑model study finds “self‑referential” prompting elicits first‑person experience reports 66–100% of the time, but only when deception/roleplay SAE features aren’t active; suppressing them pushes report rates to 96% and raises TruthfulQA to 44% study summary. This follows Claude self‑report, where ~20% success suggested limits; today’s result reframes it as a mechanistic gating issue, not a pure capability gap.

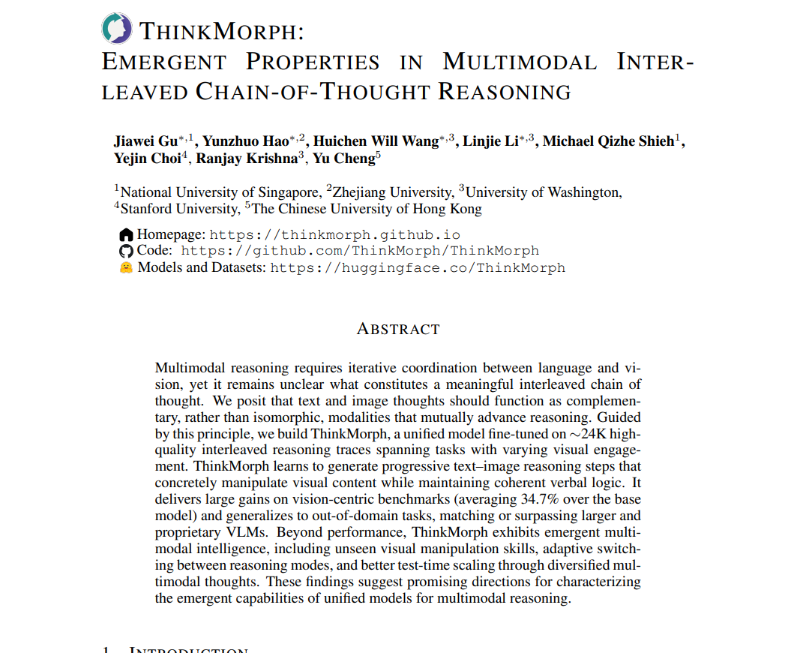

ThinkMorph’s mixed text+image chain‑of‑thought boosts vision tasks

A new academic model, ThinkMorph, fine‑tunes a single multimodal system to interleave text and image “thoughts,” reporting a +34.7% average gain on vision‑centric tasks, including 85.8% on path‑finding and +38.8% on puzzles paper summary. For creative teams, this points to assistants that sketch, annotate, and reason visually mid‑prompt rather than reply with prose only.

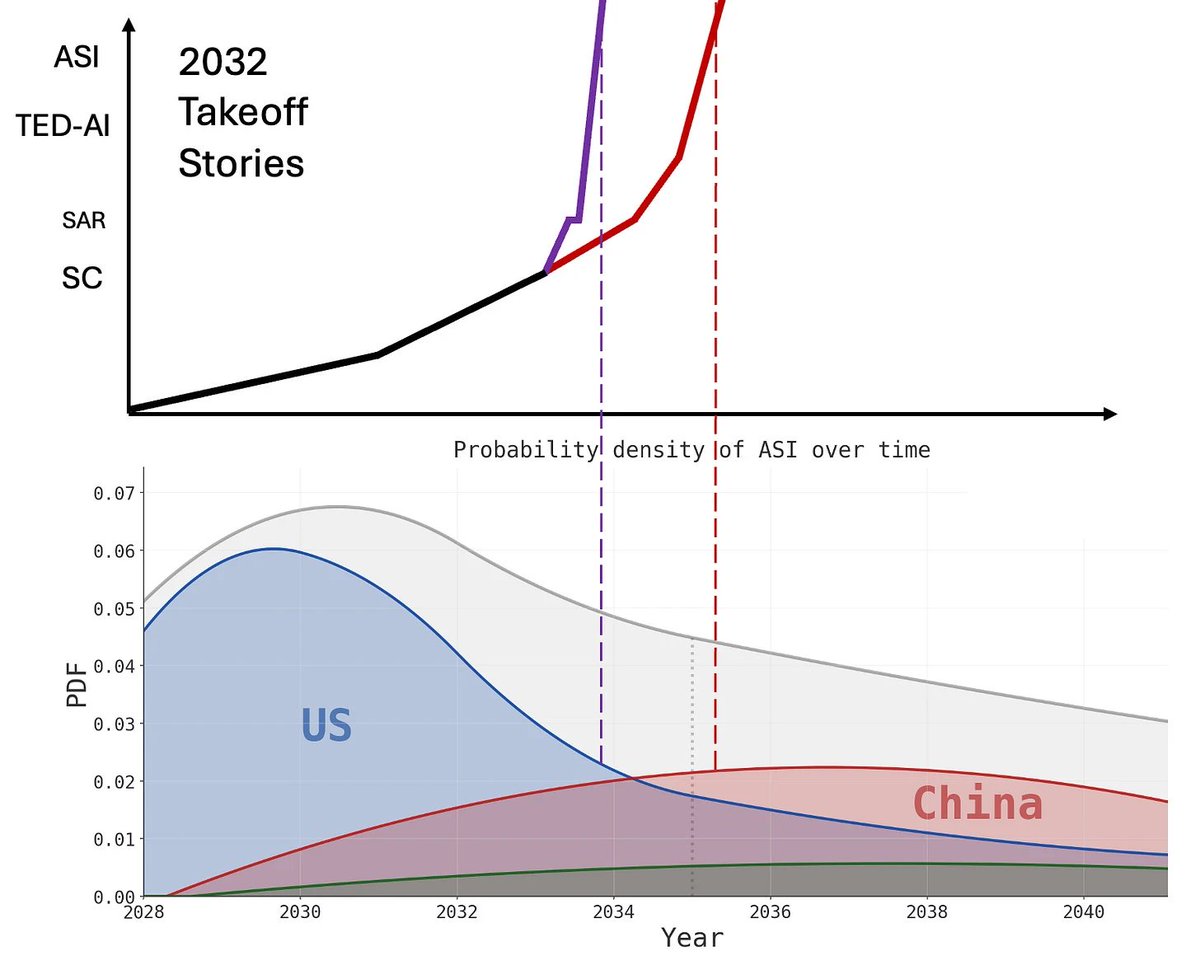

2032 ASI scenarios sketch fast vs slow paths and compute scale

A scenario draft maps two 2032 ASI paths—fast takeoff via brain‑like recursion and slower online‑learning—projecting a leading lab with 400M H100‑equivalents and coding benchmarks accelerating to monthly jumps scenario summary. It also sketches 10% US unemployment and China scaling to 200M industrial robots by 2032, a context line for where creative automation may head.